Archive for the ‘Information security’ Category

Cloud and the shared responsibility model misconceptions

One of the most common concepts working with cloud services is the “Shared responsibility mode”.

The model is aim to set the responsibility boundaries between the cloud service provider and the cloud service consumer, depending on the cloud service model (IaaS, PaaS, SaaS).

In this post, I will review common misconceptions regarding the shared responsibility model.

Misconception #1 — My cloud provider’s certifications allow me to comply with regulations

This is a common misconception for companies (and new SaaS providers) who fail to understand the shared responsibility model while deploying their first workload.

Reviewing cloud providers’ compliance pages, we can see that the providers have already certified themselves for most regulations and local laws, and in some cases even offer customers special environments that are already in compliance with regulations such as PCI-DSS or HIPAA.

If you are planning to store sensitive customers’ data (from PII, healthcare, financial, or any other types of sensitive data) in a public cloud, keep in mind that according to the shared responsibility model, the cloud provider is responsible only for the lower layers of the architecture:

· IaaS — the CSP is responsible for all layers, from the physical layer to the virtualization layer

· PaaS — the CSP is responsible for all layers, from the physical layer to the guest operating system, middleware, and even runtime

· SaaS — the CSP is responsible for all layers, from the physical layer to the application layer

Bottom line — the fact that a CSP has all the relevant certifications, means almost nothing when talking about compliance with regulations or protecting customers’ data.

Each organization storing sensitive data in the cloud must conduct a risk assessment, review which data is stored in the cloud (before storing data in the cloud), and set the proper controls to protect customers’ data.

Misconception #2 — Who is responsible for protecting my data?

When customers (either organizations or personal customers) store their data in public cloud services, they sometimes mistakenly think that if they store their data in one of the major CSPs, their data is protected.

This is a misconception.

All major CSPs offer their customers a large variety of services and tools to protect their customers’ data (from network access control lists, encryption in transit and at rest, authentication, authorization, auditing, and more), however, according to the shared responsibility model, it is up to the customer (mostly organizations storing their data in the cloud), to decide which security controls to implement.

In most cases, the CSPs don’t have access to customers’ data stored in the cloud, whether organizations decide to use managed storage services (from object storage to managed CIFS/NFS services), managed database services (from relational databases to NoSQL databases) and more.

The most obvious exception to the mentioned above is SaaS services, where we allow CSP service accounts access to our data, to allow us to perform queries, get insights about our data or even perform regular backups — the access is mostly strict to specific actions, to a specific role or service account, and usually shouldn’t be used by the CSP employees.

At the end of the day, the customer is always the data owner, and as a data owner, the customer must decide whether or not to store sensitive data in the cloud, who should have access to the data stored in the cloud, what access rights do we allow people to access and update/delete our data, and more.

Misconception #3 — Availability is not my concern since the cloud is highly available by design

The above headline is true, mainly for major SaaS services.

When looking at availability and building highly available architectures, specifically in IaaS and PaaS, it is up to us, as organizations, to use the services and the service capabilities that CSPs offer us, to build highly available solutions.

Just because we decided to deploy our application on a VM or store our data in a managed database service, but we failed to deploy everything behind a load-balancer or in a cluster, will not guarantee us the availability that our customers expect.

Even if we are using managed object storage services and we choose a low redundancy tier, using a single availability zone, the CSP does not guarantee high availability.

To achieve high availability to our workloads, we need to review cloud providers’ documentation, such as “Well architected frameworks” and design our workloads to fit business needs.

Misconception #4 — Incident response in the cloud is an impossible mission

This part is a little bit tricky.

Since as AWS always mention, they are responsible for the security of the cloud — they are responsible for the incident response process of the cloud infrastructure, from the physical data center, the host OS, the network equipment, the virtualization, and all the managed services.

We, as customers of cloud services, are responsible for security within our cloud environments.

In IaaS, everything within the guest OS is our responsibility as customers of the cloud.

It is our responsibility to enable auditing as much as possible, and send all logs to a central log repository and from there to our SIEM system (whether it is located on-premise or in a managed cloud service).

There are also documented procedures for building a forensics environment, made out of snapshots of our VMs or databases, for further analysis.

It is not perfect; we still don’t control the entire flow of the packet from the lower network layers to the application layer, and on managed PaaS services we only have audit logs and we can’t perform memory analysis of managed services (such as databases).

In SaaS services, it gets even worse since, in at best case, the SaaS provider is mature enough to allow us to pull audit logs using API and send them to our SIEM system for further analysis — unfortunately, not all SaaS providers are mature enough to provide us access to the audit logs.

Bottom line — challenging, but not completely impossible. Depending on the cloud service model and the maturity of the cloud provider.

Summary

It is important to understand the shared responsibility model, but what is more important is to understand the cloud service model and services or tools available for us, to enable us to build secure and highly available cloud environments.

References

· AWS Compliance Programs

https://aws.amazon.com/compliance/programs

· Azure compliance documentation

https://docs.microsoft.com/en-us/azure/compliance

· GCP Compliance offerings

https://cloud.google.com/security/compliance/offerings

· AWS Well-Architected Framework

https://docs.aws.amazon.com/wellarchitected/latest/framework/welcome.html

· Forensic investigation environment strategies in the AWS Cloud

https://aws.amazon.com/blogs/security/forensic-investigation-environment-strategies-in-the-aws-cloud

· Computer forensics chain of custody in Azure

https://docs.microsoft.com/en-us/azure/architecture/example-scenario/forensics

Introduction to Policy as Code

Building our first environment in the cloud, or perhaps migrating our first couple of workloads to the cloud is fairly easy until we begin the ongoing maintenance of the environment.

Pretty soon we start to realize we are losing control over our environment – from configuration changes, forgetting to implement security best practices, and more.

At this stage, we wish we could have gone back, rebuilt everything from scratch, and have much more strict rules for creating new resources and their configuration.

Manual configuration simply doesn’t scale.

Developers would like to focus on what they do best – developing new products or features, while security teams would like to enforce guard rails, allowing developers to do their work, while still enforcing security best practices.

In the past couple of years, one of the hottest topics is called Infrastructure as Code, a declarative way to deploy new environments using code (mostly JSON or YAML format).

Infrastructure as Code is a good solution for deploying a new environment or even reusing some of the code to deploy several environments, however, it is meant for a specific task.

What happens when we would like to set guard rails on an entire cloud account or even on our entire cloud organization environment, containing multiple accounts, which may expand or change daily?

This is where Policy as Code comes into the picture.

Policy as Code allows you to write high-level rules and assign them to an entire cloud environment, to be effective on any existing or new product or service we deploy or consume.

Policy as Code allows security teams to define security, governance, and compliance policies according to business needs and assign them at the organizational level.

The easiest way to explain it is – can user X perform action Y on resource Z?

A more practical example from the AWS realm – block the ability to create a public S3 bucket. Once the policy was set and assigned, security teams won’t need to worry whether or not someone made a mistake and left a publicly accessible S3 bucket – the policy will simply block this action.

Looking for a code example to achieve the above goal? See:

https://aws-samples.github.io/aws-iam-permissions-guardrails/guardrails/scp-guardrails.html#scp-s3-1

Policy as Code on AWS

When designing a multi-account environment based on the AWS platform, you should use AWS Control Tower.

The AWS Control Tower is aim to assist organizations deploying multiple AWS accounts under the same AWS organization, with the ability to deploy policies (or Service Control Policies) from a central location, allowing you to have the same policies for every newly created AWS account.

Example of governance policy:

- Enabling resource creation in a specific region – this capability will allow European customers to restrict resource creation in regions outside Europe, to comply with the GDPR.

- Allow only specific EC2 instance types (to preserve cost).

Example of security policies:

- Prevent upload of unencrypted objects to S3 bucket, to protect access to sensitive objects.

https://aws-samples.github.io/aws-iam-permissions-guardrails/guardrails/scp-guardrails.html#scp-s3-2

- Deny the use of the Root user account (least privilege best practice).

AWS Control Tower allows you to configure baseline policies using CloudFormation templates, over an entire AWS organization, or on a specific AWS account.

To further assist in writing CloudFormation templates and service control policies on large scale, AWS offers some additional tools:

Customizations for AWS Control Tower (CfCT) – ability to customize AWS accounts and OU’s, make sure governance and security policies remain synched with security best practices.

AWS CloudFormation Guard – ability to check for CloudFormation templates compliance against pre-defined policies.

Summary

Policy as Code allows an organization to automate governance and security policies deployment on large scale, keeping AWS organizations and accounts secure, while allowing developers to invest time in developing new products, with minimal required changes to their code, to be compliant with organizational policies.

References

- Best Practices for AWS Organizations Service Control Policies in a Multi-Account Environment

- AWS IAM Permissions Guardrails

https://aws-samples.github.io/aws-iam-permissions-guardrails/guardrails/scp-guardrails.html

- AWS Organizations – general examples

- Customizations for AWS Control Tower (CfCT) overview

https://docs.aws.amazon.com/controltower/latest/userguide/cfct-overview.html

- Policy-as-Code for Securing AWS and Third-Party Resource Types

https://aws.amazon.com/blogs/mt/policy-as-code-for-securing-aws-and-third-party-resource-types/

Not all cloud providers are built the same

When organizations debate workload migration to the cloud, they begin to realize the number of public cloud alternatives that exist, both U.S hyper-scale cloud providers and several small to medium European and Asian providers.

The more we study the differences between the cloud providers (both IaaS/PaaS and SaaS providers), we begin to realize that not all cloud providers are built the same.

How can we select a mature cloud provider from all the alternatives?

Transparency

Mature cloud providers will make sure you don’t have to look around their website, to locate their security compliance documents, allow you to download their security controls documentation, such as SOC 2 Type II, CSA Star, CSA Cloud Controls Matrix (CCM), etc.

What happens if we wish to evaluate the cloud provider by ourselves?

Will the cloud provider (no matter what cloud service model), allow me to conduct a security assessment (or even a penetration test), to check the effectiveness of his security controls?

Global presence

When evaluating cloud providers, ask yourself the following questions:

- Does the cloud provider have a local presence near my customers?

- Will I be able to deploy my application in multiple countries around the world?

- In case of an outage, will I be able to continue serving my customers from a different location with minimal effort?

Scale

Deploying an application for the first time, we might not think about it, but what happens in the peak scenario?

Will the cloud provider allow me to deploy hundreds or even thousands of VM’s (or even better, containers), in a short amount of time, for a short period, from the same location?

Will the cloud provider allow me infinite scale to store my data in cloud storage, without having to guess or estimate the storage size?

Multi-tenancy

As customers, we expect our cloud providers to offer us a fully private environment.

We never want to hear about “noisy neighbor” (where one customer is using a lot of resources, which eventually affect other customers), and we never want to hear a provider admits that some or all of the resources (from VMs, database, storage, etc.) are being shared among customers.

Will the cloud provider be able to offer me a commitment to a multi-tenant environment?

Stability

One of the major reasons for migrating to the cloud is the ability to re-architect our services, whether we are still using VMs based on IaaS, databases based on PaaS, or fully managed CRM services based on SaaS.

In all scenarios, we would like to have a stable service with zero downtime.

Will the cloud provider allow me to deploy a service in a redundant architecture, that will survive data center outage or infrastructure availability issues (from authentication services, to compute, storage, or even network infrastructure) and return to business with minimal customer effect?

APIs

In the modern cloud era, everything is based on API (Application programming interface).

Will the cloud provider offer me various APIs?

From deploying an entire production environment in minutes using Infrastructure as Code, to monitoring both performances of our services, cost, and security auditing – everything should be allowed using API, otherwise, it is simply not scale/mature/automated/standard and prone to human mistakes.

Data protection

Encrypting data at transit, using TLS 1.2 is a common standard, but what about encryption at rest?

Will the cloud provider allow me to encrypt a database, object storage, or a simple NFS storage using my encryption keys, inside a secure key management service?

Will the cloud provider allow me to automatically rotate my encryption keys?

What happens if I need to store secrets (credentials, access keys, API keys, etc.)? Will the cloud provider allow me to store my secrets in a secured, managed, and audited location?

In case you are about to store extremely sensitive data (from PII, credit card details, healthcare data, or even military secrets), will the cloud provider offer me a solution for confidential computing, where I can store sensitive data, even in memory (or in use)?

Well architected

A mature cloud provider has a vast amount of expertise to share knowledge with you, about how to build an architecture that will be secure, reliable, performance efficient, cost-optimized, and continually improve the processes you have built.

Will the cloud provider offer me rich documentation on how to achieve all the above-mentioned goals, to provide your customers the best experience?

Will the cloud provider offer me an automated solution for deploying an entire application stack within minutes from a large marketplace?

Cost management

The more we broaden our use of the IaaS / PaaS service, the more we realize that almost every service has its price tag.

We might not prepare for this in advance, but once we begin to receive the monthly bill, we begin to see that we pay a lot of money, sometimes for services we don’t need, or for an expensive tier of a specific service.

Unlike on-premise, most cloud providers offer us a way to lower the monthly bill or pay for what we consume.

Regarding cost management, ask yourself the following questions:

Will the cloud provider charge me for services when I am not consuming them?

Will the cloud provider offer me detailed reports that will allow me to find out what am I paying for?

Will the cloud provider offer me documents and best practices for saving costs?

Summary

Answering the above questions with your preferred cloud provider, will allow you to differentiate a mature cloud provider, from the rest of the alternatives, and to assure you that you have made the right choice selecting a cloud provider.

The answers will provide you with confidence, both when working with a single cloud provider, and when taking a step forward and working in a multi-cloud environment.

References

Security, Trust, Assurance, and Risk (STAR)

https://cloudsecurityalliance.org/star/

SOC 2 – SOC for Service Organizations: Trust Services Criteria

https://www.aicpa.org/interestareas/frc/assuranceadvisoryservices/aicpasoc2report.html

Confidential Computing and the Public Cloud

https://eyal-estrin.medium.com/confidential-computing-and-the-public-cloud-fa4de863df3

Confidential computing: an AWS perspective

https://aws.amazon.com/blogs/security/confidential-computing-an-aws-perspective/

AWS Well-Architected

https://aws.amazon.com/architecture/well-architected

Azure Well-Architected Framework

https://docs.microsoft.com/en-us/azure/architecture/framework/

Google Cloud’s Architecture Framework

https://cloud.google.com/architecture/framework

Oracle Architecture Center

https://docs.oracle.com/solutions/

Alibaba Cloud’s Well-Architectured Framework

Knowledge gap as a cloud security threat

According to Gartner survey, “Through 2022, traditional infrastructure and operations skills will be insufficient for 58% of the operational tasks”, combine this information with previous Gartner forecast that predicts that organizations’ spend on public cloud services will grow to 397 billion dollars, and you began to understand we have a serious threat.

Covid-19 and the cloud era

The past year and a half with the Covid pandemic forced organizations to re-evaluate their IT services and as a result, more and more organizations began shifting to work from anywhere and began migrating part of their critical business applications from their on-premise environments to the public cloud.

The shift to the public cloud was sometimes quick, and in many cases, without proper evaluation of the security risk to their customer’s data.

Where is my data?

Migrating to the public cloud began raising questions such as “where is my data located”?

The hyper-scale cloud providers (such as AWS, Azure, GCP) have a global presence around the world, but the first question we should always ask is “where is my data located” and should we build new environments in a specific country or continent to comply with data protection laws such as the GDPR in Europe, the CCPA in California, etc.

Hybrid cloud, multi-cloud, any cloud?

Almost any organization began using the public cloud hear about the terms “hybrid cloud” and “multi-cloud”, and began debating on future architecture suits to the organization needs and business goals.

I often hear the question – should I choose AWS, Azure, GCP, or perhaps a smaller public cloud provider, that will allow me to migrate to the cloud and be able to support my business needs?

Security misconfiguration

Building new environments in the public cloud, using “quick and dirty methods”, often comes with misconfigurations, from allowing public access to cloud storage services, to open access to databases containing customer’s data, etc.

Closing the knowledge gap

To prepare your organization for cloud adoption, the top management should invest a budget in employee training (from IT, support team, development teams, and naturally information security team).

The Internet is full of guidelines (from fundamental cloud services to security) and low-cost online courses.

Allow your employees to close the skills gap, invest time allowing your security teams to shift their mindset from the on-premise environments (and attack surface) to the public cloud.

Allow your security teams to take the full benefit of managed services and built-in security capabilities (from auditing, encryption, DDoS protection, etc.) that are embedded as part of mature cloud services.

The Future of Data Security Lies in the Cloud

We have recently read a lot of posts about the SolarWinds hack, a vulnerability in a popular monitoring software used by many organizations around the world.

This is a good example of supply chain attack, which can happen to any organization.

We have seen similar scenarios over the past decade, from the Heartbleed bug, Meltdown and Spectre, Apache Struts, and more.

Organizations all around the world were affected by the SolarWinds hack, including the cybersecurity company FireEye, and Microsoft.

Events like these make organizations rethink their cybersecurity and data protection strategies and ask important questions.

Recent changes in the European data protection laws and regulations (such as Schrems II) are trying to limit data transfer between Europe and the US.

Should such security breaches occur? Absolutely not.

Should we live with the fact that such large organization been breached? Absolutely not!

Should organizations, who already invested a lot of resources in cloud migration move back workloads to on-premises? I don’t think so.

But no organization, not even major financial organizations like banks or insurance companies, or even the largest multinational enterprises, have enough manpower, knowledge, and budget to invest in proper protection of their own data or their customers’ data, as hyperscale cloud providers.

There are several of reasons for this:

- Hyperscale cloud providers invest billions of dollars improving security controls, including dedicated and highly trained personnel.

- Breach of customers’ data that resides at hyperscale cloud providers can drive a cloud provider out of business, due to breach of customer’s trust.

- Security is important to most organizations; however, it is not their main line of expertise.

Organization need to focus on their core business that brings them value, like manufacturing, banking, healthcare, education, etc., and rethink how to obtain services that support their business goals, such as IT services, but do not add direct value.

Recommendations for managing security

Security Monitoring

Security best practices often state: “document everything”.

There are two downsides to this recommendation: One, storage capacity is limited and two, most organizations do not have enough trained manpower to review the logs and find the top incidents to handle.

Switching security monitoring to cloud-based managed systems such as Azure Sentinel or Amazon GuardDuty, will assist in detecting important incidents and internally handle huge logs.

Encryption

Another security best practice state: “encrypt everything”.

A few years ago, encryption was quite a challenge. Will the service/application support the encryption? Where do we store the encryption key? How do we manage key rotation?

In the past, only banks could afford HSM (Hardware Security Module) for storing encryption keys, due to the high cost.

Today, encryption is standard for most cloud services, such as AWS KMS, Azure Key Vault, Google Cloud KMS and Oracle Key Management.

Most cloud providers, not only support encryption at rest, but also support customer managed key, which allows the customer to generate his own encryption key for each service, instead of using the cloud provider’s generated encryption key.

Security Compliance

Most organizations struggle to handle security compliance over large environments on premise, not to mention large IaaS environments.

This issue can be solved by using managed compliance services such as AWS Security Hub, Azure Security Center, Google Security Command Center or Oracle Cloud Access Security Broker (CASB).

DDoS Protection

Any organization exposing services to the Internet (from publicly facing website, through email or DNS service, till VPN service), will eventually suffer from volumetric denial of service.

Only large ISPs have enough bandwidth to handle such an attack before the border gateway (firewall, external router, etc.) will crash or stop handling incoming traffic.

The hyperscale cloud providers have infrastructure that can handle DDoS attacks against their customers, services such as AWS Shield, Azure DDoS Protection, Google Cloud Armor or Oracle Layer 7 DDoS Mitigation.

Using SaaS Applications

In the past, organizations had to maintain their entire infrastructure, from messaging systems, CRM, ERP, etc.

They had to think about scale, resilience, security, and more.

Most breaches of cloud environments originate from misconfigurations at the customers’ side on IaaS / PaaS services.

Today, the preferred way is to consume managed services in SaaS form.

These are a few examples: Microsoft Office 365, Google Workspace (Formerly Google G Suite), Salesforce Sales Cloud, Oracle ERP Cloud, SAP HANA, etc.

Limit the Blast Radius

To limit the “blast radius” where an outage or security breach on one service affects other services, we need to re-architect infrastructure.

Switching from applications deployed inside virtual servers to modern development such as microservices based on containers, or building new applications based on serverless (or function as a service) will assist organizations limit the attack surface and possible future breaches.

Example of these services: Amazon ECS, Amazon EKS, Azure Kubernetes Service, Google Kubernetes Engine, Google Anthos, Oracle Container Engine for Kubernetes, AWS Lambda, Azure Functions, Google Cloud Functions, Google Cloud Run, Oracle Cloud Functions, etc.

Summary

The bottom line: organizations can increase their security posture, by using the public cloud to better protect their data, use the expertise of cloud providers, and invest their time in their core business to maximize value.

Security breaches are inevitable. Shifting to cloud services does not shift an organization’s responsibility to secure their data. It simply does it better.

Why Millennials Are Blasé About Privacy

Millennials don’t seem to care that Facebook and other companies harvest their data for profit. At least that’s the premise of a recent opinion piece in the New York Post. It suggests that millennials are consigned to the fact that, in order to have the many advantages that the new tech world provides, there has to be a sacrifice. If you are a millennial, I would be interested in your reaction to this premise and others which follow.

Millennials seem more comfortable with the notion that if a product is free then you are the product, and allow themselves to be an ’’open book” for all to see. As it will be revealed later, the opinion piece opines that this is not true of previous generations who appear to be more guarded with their privacy. Of course, previous generations had fewer threats to their privacy to go along with markedly less availability to information, entertainment, and communication (just to name a few).

So it is not necessarily fair to single out the millennials as if they were some alien outliers. Although, like aliens, they come from and live in different worlds to their predecessors. I mean, book burning was non-existent before Guttenberg’s printing press printed books, and there wasn’t a need for fallout shelters until the world went nuclear. In fact, you could make a case that the dangerous, crazy world that was passed on to millennials, and that they now inherit, may make the exposure of their personal information to the public seem tame by comparison. Not to mention that heavy engagement with social media and the like is a needed distraction from modern life!

Besides, no one would have guessed some fifteen years ago that Mark Zuckerberg’s dorm room doodle would morph into the behemoth of a business model it is today – replete with its invasive algorithms. Who could have imagined that social media companies could learn our political leanings, our likes and dislikes, our religious affiliations, and our sexual orientations and proclivities? If I, or some other legal or law enforcement entity want to retrace my activities on a given day – that is easily and readily accessible from my smartphone.

As millennials blithely rollover to the tech gods when it comes to filleting themselves publicly, the article takes them (and others) to task for handwringing and breathlessly expressing surprise and outrage at Cambridge Analytica for just working with the leeway given to them. Of course, if the company had helped Hillary Clinton win the Whitehouse instead of purportedly boosting the prospects of the odious ogre, Trump, there likely wouldn’t have been the same angst – or so the piece posits.

Be that as it may, the question must be asked: what did Cambridge Analytica do that countless other companies haven’t done? I mean, why should it be treated any differently by Facebook because it’s a political firm and not an avaricious advertising scavenger? The other Silicon Valley savants – Google, Apple, and Microsoft – all monetize your information. They are eager to invite advertisers, researchers, and government agencies to discover your treasure trove of personal information through them.

And millennials, as well as those of other generations, are only too willing, it seems, to provide such information- and in massive amounts. Indeed, they seem to relish, in a race to the bottom, who can post the most content, photos, and the like. They seem to be ambivalent about the inevitable fallout. “So what?” they say, “I’ve got nothing to hide.”

The article questions if those of previous generations would be so forthcoming, citing the so-called Greatest Generation eschewing the telephone if it meant that the government could eavesdrop on their conversations with impunity. On the contrary, millennials, it would appear, view the lack of privacy and the co-opting of personal information as the price for the plethora of pleasures that the digital medium supplies.

As Wired magazine founder Kevin Kelly said in his 2016 book, The Inevitable: Understanding the 12 Technological Forces That Will Shape Our Future:

“If today’s social media has taught us anything about ourselves as a species, it is that the human impulse to share overwhelms the human impulse for privacy.“

What do you think? Is it a fair assessment of the current state of affairs?

This article was originally published at BestVPN.com.

Your Internet Privacy Is at Risk, But You Can Salvage It All

In what has to be the most ironical turn of events, companies collectively pay cybersecurity experts billions of dollars every year so that they can keep their business safe and out of prying eyes. Once they have attained the level of security and privacy they want, they turn around to infringe upon the privacy of the people.

This has been the model many of them have been operating for a while now, and they don’t seem to be slowing down anytime soon. We would have said the government should fight against this, but not when they have a hand in the mud pie too.

In this piece, we discuss the various ways these organizations have been shredding your privacy to bits, what the future of internet privacy is shaping up to be and how to take back the control of your own data privacy.

How Your Internet Privacy Is Being Violated

A lot of the simple operations you perform with the internet every day means more to some data collectors than you know. In fact, it is what they are using to make decisions on what to offer you, what to hold back from you and so much more.

Going by the available technology, here are some of the frameworks that allow the collection and use of your information.

- Big data analytics: These are mapped to certain individuals/ demographics and used to predictive models for the future.

When you hear big data, what is being referred to is a large body of data which is meant for later analysis. Via a combination of efforts from man, bot and algorithms, the large amount of data is sifted through with the sole aim of finding patterns, trends, and behaviors.

- Internet of Things: Thus, you can access live video feeds of your home from anywhere in the world. You can even have your refrigerator tell you what supplies you are running low on.

What you don’t know is that as your IoT units collect this data, they are not just feeding it to you. In fact, they are sending back a lot more than you know to the companies that developed them.

Everyday things (such as your printer, refrigerator, light, AC unit and so much more) can now be connected to an internet connection. This enables them to work with one another with the sole aim of interoperability and remote access.

-

- Machine learning: These machines are then released into data sets to practice their newfound freedom.

Guess what they do? Mine for data from almost any source they can lay hands on, rigorously analyze the data and use that to diverse ends.

Machines were taught to do more than just be machines. Instead of being given a set of commands to run with, they have now been given specialized commands to aid their learning.

The significance of The Data Collections

All of the above might look like normal procedures for the achievement of the intended purposes that these technologies bring. However, they cause more harm than good.

On the one hand, the datasets are used to pigeonhole consumers by companies.

As of the time of this writing, machine learning data is already being used by some credit card companies to determine who they should allow a credit card and who they shouldn’t. It is even more ridiculous to think this decision is based off something as simple as what type of car accessory a customer would opt for.

As if that is not enough, machine learning is approaching a place where it would soon be able to diagnose diseases. That is not the scary part. This diagnosis will be based on social media data.

The companies don’t even need to see you physically before they know what diseases you might have. Talk about prodding into the most sensitive areas of your life that you might not even have shared with family and friends.

That, and we have not even talked about how marketers will chase you around with ads based on what you’ve searched, offer suggested content based on your patterns and prevent you from seeing out of the box across the board.

Putting an End to The Nonsense

You don’t have to put up with all these. Technology is meant to be enjoyed, so you shouldn’t be punished by being exploited.

One helpful tip is to layer all your connection over a VPN. These will help make you anonymous on the network, preventing data collectors and monitors from identifying your computer with your data stream.

Your IoT devices will also benefit from a VPN if you can share one over your router. This helps to make them more secure since they cannot us a VPN otherwise.

Private browser networks such as Tor will do you a lot of good when browsing the web. If you prefer conventional browsers, though, don’t forget to install an ad blocker before you go online again. They help prevent marketers and companies from tracking you all around the web after looking at some content.

Don’t forget to switch from Google to other search engines that leave your data to you. DuckDuckGo and Qwant are some of the options on this list.

Combine all of that, and you have your shot back at decency and privacy on the internet.

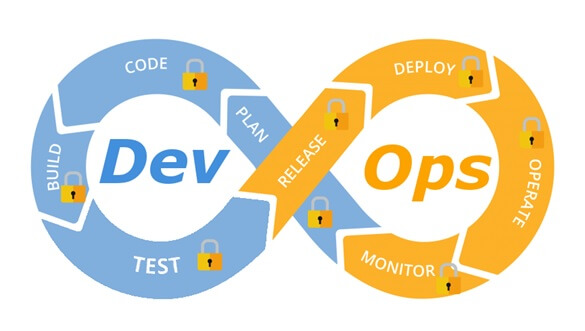

Integrate security aspects in a DevOps process

A diagram of a common DevOps lifecycle:

The DevOps world meant to provide complementary solution for both quick development (such as Agile) and a solution for cloud environments, where IT personnel become integral part of the development process. In the DevOps world, managing large number of development environments manually is practically infeasible. Monitoring mixed environments become a complex solution and deploying large number of different builds is becoming extremely fast and sensitive to changes.

The idea behind any DevOps solution is to provide a solution for deploying an entire CI/CD process, which means supporting constant changes and immediate deployment of builds/versions.

For the security department, this kind of process is at first look a nightmare – dozen builds, partial tests, no human control for any change, etc.

For this reason, it is crucial for the security department to embrace DevOps attitude, which means, embedding security in any part of the development lifecycle, software deployment or environment change.

It is important to understand that there are no constant stages as we used to have in waterfall development lifecycle, and most of the stages are parallel – in the CI/CD world everything changes quickly, components can be part of different stages, and for this reason it is important to confer the processes, methods and tools in all developments and DevOps teams.

In-order to better understand how to embed security into the DevOps lifecycle, we need to review the different stages in the development lifecycle:

Planning phase

This stage in the development process is about gathering business requirements.

At this stage, it is important to embed the following aspects:

- Gather information security requirements (such as authentication, authorization, auditing, encryptions, etc.)

- Conduct threat modeling in-order to detect possible code weaknesses

- Training / awareness programs for developers and DevOps personnel about secure coding

Creation / Code writing phase

This stage in the development process is about the code writing itself.

At this stage, it is important to embed the following aspects:

- Connect the development environments (IDE) to a static code analysis products

- Review the solution architecture by a security expert or a security champion on his behalf

- Review open source components embedded inside the code

Verification / Testing phase

This stage in the development process is about testing, conducted mostly by QA personnel.

At this stage, it is important to embed the following aspects:

- Run SAST (Static application security tools) on the code itself (pre-compiled stage)

- Run DAST (Dynamic application security tools) on the binary code (post-compile stage)

- Run IAST (Interactive application security tools) against the application itself

- Run SCA (Software composition analysis) tools in-order to detect known vulnerabilities in open source components or 3rd party components

Software packaging and pre-production phase

This stage in the development process is about software packaging of the developed code before deployment/distribution phase.

At this stage, it is important to embed the following aspects:

- Run IAST (Interactive application security tools) against the application itself

- Run fuzzing tools in-order to detect buffer overflow vulnerabilities – this can be done automatically as part of the build environment by embedding security tests for functional testing / negative testing

- Perform code signing to detect future changes (such as malwares)

Software packaging release phase

This stage is between the packaging and deployment stages.

At this stage, it is important to embed the following aspects:

- Compare code signature with the original signature from the software packaging stage

- Conduct integrity checks to the software package

- Deploy the software package to a development environment and conduct automate or stress tests

- Deploy the software package in a green/blue methodology for software quality and further security quality tests

Software deployment phase

At this stage, the software package (such as mobile application code, docker container, etc.) is moving to the deployment stage.

At this stage, it is important to embed the following aspects:

- Review permissions on destination folder (in case of code deployment for web servers)

- Review permissions for Docker registry

- Review permissions for further services in a cloud environment (such as storage, database, application, etc.) and fine-tune the service role for running the code

Configure / operate / Tune phase

At this stage, the development is in the production phase and passes modifications (according to business requirements) and on-going maintenance.

At this stage, it is important to embed the following aspects:

- Patch management processes or configuration management processes using tools such as Chef, Ansible, etc.

- Scanning process for detecting vulnerabilities using vulnerability assessment tools

- Deleting and re-deployment of vulnerable environments with an up-to-date environments (if possible)

On-going monitoring phase

At this stage, constant application monitoring is being conducted by the infrastructure or monitoring teams.

At this stage, it is important to embed the following aspects:

- Run RASP (Runtime application self-production) tools

- Implement defense at the application layer using WAF (Web application firewall) products

- Implement products for defending the application from Botnet attacks

- Implement products for defending the application from DoS / DDoS attacks

- Conduct penetration testing

- Implement monitoring solution using automated rules such as automated recovery of sensitive changes (tools such as GuardRails)

Security recommendations for developments based on CI/CD / DevOps process

- It is highly recommended to perform on-going training for the development and DevOps teams on security aspects and secure development

- It is highly recommended to nominate a security champion among the development and DevOps teams in-order to allow them to conduct threat modeling at early stages of the development lifecycle and in-order to embed security aspects as soon as possible in the development lifecycle

- Use automated tools for deploying environments in a simple and standard form.

Tools such as Puppet require root privileges for folders it has access to. In-order to lower the risk, it is recommended to enable folder access auditing. - Avoid storing passwords and access keys, hard-coded inside scripts and code.

- It is highly recommended to store credentials (SSH keys, privileged credentials, API keys, etc.) in a vault (Solutions such as HashiCorp vault or CyberArk).

- It is highly recommended to limit privilege access based on role (Role based access control) using least privileged.

- It is recommended to perform network separation between production environment and Dev/Test environments.

- Restrict all developer teams’ access to production environments, and allow only DevOps team’s access to production environments.

- Enable auditing and access control for all development environments and identify access attempts anomalies (such as developers access attempt to a production environment)

- Make sure sensitive data (such as customer data, credentials, etc.) doesn’t pass in clear text at transit. In-case there is a business requirement for passing sensitive data at transit, make sure the data is passed over encrypted protocols (such as SSH v2, TLS 1.2, etc.), while using strong cipher suites.

- It is recommended to follow OWASP organization recommendations (such as OWASP Top10, OWASP ASVS, etc.)

- When using Containers, it is recommended to use well-known and signed repositories.

- When using Containers, it is recommended not to rely on open source libraries inside the containers, and to conduct scanning to detect vulnerable versions (including dependencies) during the build creation process.

- When using Containers, it is recommended to perform hardening using guidelines such as CIS Docker Benchmark or CIS Kubernetes Benchmark.

- It is recommended to deploy automated tools for on-going tasks, starting from build deployments, code review for detecting vulnerabilities in the code and open source code, and patch management processes that will be embedded inside the development and build process.

- It is recommended to perform scanning to detect security weaknesses, using vulnerability management tools during the entire system lifetime.

- It is recommended to deploy configuration management tools, in-order to detect and automatically remediate configuration anomalies from the original configuration.

Additional reading sources:

- 20 Ways to Make Application Security Move at the Speed of DevOps

- The DevOps Security Checklist

- Making AppSec Testing Work in CI/CD

- Value driven threat modeling

- Automated Security Testing

- Security at the Speed of DevOps

- DevOps Security Best Practices

- The integration of DevOps and security

- When DevOps met Security - DevSecOps in a nutshell

- Grappling with DevOps Security

- Minimizing Risk and Improving Security in DevOps

- Security In A DevOps World

- Application Security in Devops

- Five Security Defenses Every Containerized Application Needs

- 5 ways to find and fix open source vulnerabilities

This article was written by Eyal Estrin, cloud security architect and Vitaly Unic, application security architect.

4 Ways To Learn About Internet Security

What Is Internet Security And Why Is It Important?

While the digital age has revolutionized the way we communicate, interact, buy and sell products and search for information, it has also created new risks that were not risks before. The internet, while extraordinary, is not always the safest environment and learning how to protect yourself, your business and your data is an important part of being an internet user. Internet security is a term that encompasses all of the strategies, processes and means an individual or company might use to protect themselves online including browser security, data protection, authentication and security for any transactions made on the web. It is about securing and protecting your networks and ensuring your privacy online.

With more and more people using the internet every day, more and more information is being processed online and this means huge amounts of data is being moved around the web. Sadly, this has seen the rise of new types of cybercrime and more opportunities for those looking to act criminally online to do so. If you use the internet in any way, whether it be for personal or professional reasons, such as for social media, emailing, for banking, running a website, to buy groceries or to publish content, you need to be thinking about your internet security and how to keep yourself safe online.

Whether you want to protect yourself or your business, it is important to know and understand internet security and the best methods for protecting yourself. Here are four ways you can begin to learn about internet security.

- Take An Online Course

If you are serious about learning more about internet security and using your knowledge to help you professionally, then you may consider undertaking an online course on internet and network security. This is one of the more thorough and structured ways to learn everything there is to know about internet security and what strategies you can implement yourself. By doing an online course, diploma or degree, you know that you are learning from teachers and tutors who know what they are talking about which allows you to gain valuable skills and knowledge. With a qualification at the end of the course, you can then put this knowledge to good use and help others develop advanced internet security mechanisms.

- Read Blogs

One of the great things about the internet is that you can find a wealth of information online about any topic that you are interested in. Whether it is baking, travel, fashion, or sports, you can find websites and blogs that help keep you up to date with what is going on in each of these areas. The same can be said for security. If you are interested in learning a bit more about security in your own time, then doing some online research is a good way to begin. Many experts out there understand the importance of network security and write about it. The good thing about reading blogs is that you can find blogs suitable for all levels of knowledge about network security. Whether you have very limited knowledge and are looking to understand basic terminology or you are more experienced and hoping to be introduced to more complex problems, you can find blogs that will be tailored to both.

- Check Out Youtube

Youtube may be a great platform for watching funny animal videos of music video clips, but it also a great online learning resource. There are many channels on Youtube that provide online learning videos, which offer a more hands-on approach to learning about internet security. With the videos, you can see the steps behind different processes in internet security and concepts that may be difficult to understand when you read about them can be more easily explained in a visual manner. Once you understand the basics of cybersecurity, Youtube is a great way to learn about how to use certain tools in a systematic fashion.

- Read Some Books

It may seem ironic to read about internet security from a book but there are some great books and textbooks out there that are focused on internet security. These books are usually written by experts in the field who really know their stuff. Whether you want to learn about hacking, malware, security systems or privacy, chances are there is a book on the subject that will cover every aspect of the topic. Check out Amazon or your local library to see if they have any books that will interest you.