Archive for the ‘encryption’ Category

Where is the OSI model in the public cloud?

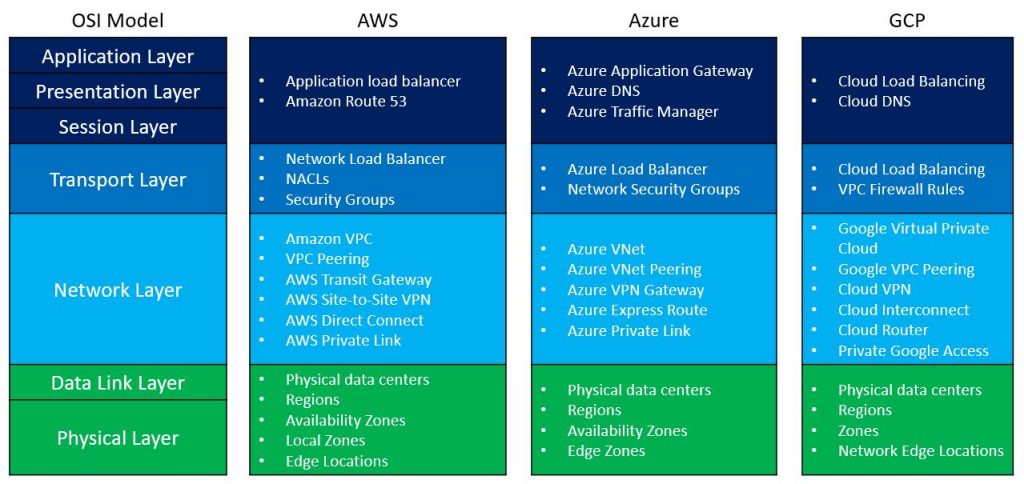

When talking about the public cloud, I always like the analogy to the OSI model.

“The Open Systems Interconnection model (OSI model) is a conceptual model. Communications between a computing system are split into seven different abstraction layers: Physical, Data Link, Network, Transport, Session, Presentation, and Application” (Wikipedia)

A similar and shorter model of the OSI model is the TCP/IP model.

Here is a comparison of the two models:

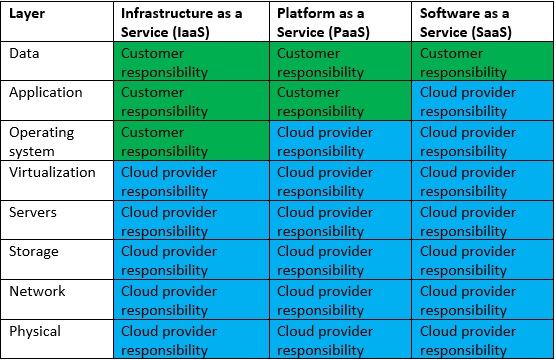

In the public cloud, we find a similar concept when talking about the shared responsibility model, where we draw the line of responsibility between the public cloud provider and the customers, in the different cloud service models, usually in terms of security, as we can see in the diagram below:

Where do public cloud services fit in the OSI model?

There are many networks related services in each of the major public cloud providers.

To make things easy to understand, I have prepared the following diagram, comparing common network-related services to the various OSI model layers:

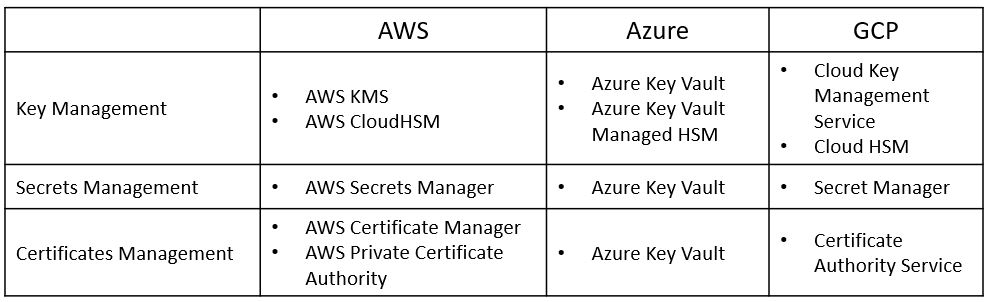

Encryption / Cryptography and the OSI Model

Layer 6 of the OSI model is the presentation layer.

Among the things, we can find in this layer is data encryption.

Encryption in this context is about encryption at rest – from object storage, block storage, file storage, and various data services.

Encryption includes symmetric and asymmetric encryption keys, secrets, passwords, API keys, certificates, etc.

The process includes the generation, storage, retrieval, and rotation of encryption keys.

Here are the most common encryption /cryptography-related services:

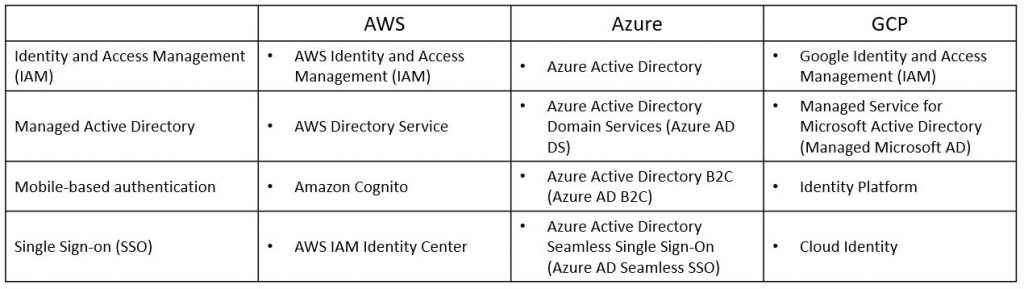

Identity Management and the OSI Model

Layer 7 of the OSI model is the application layer.

Among the things we can find in this layer are related to authentication and authorization, or the entire identity management.

Identity management is about managing the entire lifecycle of identity – from an end user, service account, computer accounts, etc.

The process includes account provisioning, password management (and MFA), permission management (role assignments), and finally account de-provisioning.

Here are the most common identity-related services:

How does everything come together?

When reviewing a cloud architecture, I like to compare the various services in the architecture to the different layers of the OSI model, from the bottom up:

- Network connectivity and traffic flow

- Encryption (according to data sensitivity)

- Authentication and Authorization (according to the least privilege principle)

The OSI model analogy, assist me to make sure I do not forget any important aspect when reviewing an architecture for a cloud workload.

Not all cloud providers are built the same

When organizations debate workload migration to the cloud, they begin to realize the number of public cloud alternatives that exist, both U.S hyper-scale cloud providers and several small to medium European and Asian providers.

The more we study the differences between the cloud providers (both IaaS/PaaS and SaaS providers), we begin to realize that not all cloud providers are built the same.

How can we select a mature cloud provider from all the alternatives?

Transparency

Mature cloud providers will make sure you don’t have to look around their website, to locate their security compliance documents, allow you to download their security controls documentation, such as SOC 2 Type II, CSA Star, CSA Cloud Controls Matrix (CCM), etc.

What happens if we wish to evaluate the cloud provider by ourselves?

Will the cloud provider (no matter what cloud service model), allow me to conduct a security assessment (or even a penetration test), to check the effectiveness of his security controls?

Global presence

When evaluating cloud providers, ask yourself the following questions:

- Does the cloud provider have a local presence near my customers?

- Will I be able to deploy my application in multiple countries around the world?

- In case of an outage, will I be able to continue serving my customers from a different location with minimal effort?

Scale

Deploying an application for the first time, we might not think about it, but what happens in the peak scenario?

Will the cloud provider allow me to deploy hundreds or even thousands of VM’s (or even better, containers), in a short amount of time, for a short period, from the same location?

Will the cloud provider allow me infinite scale to store my data in cloud storage, without having to guess or estimate the storage size?

Multi-tenancy

As customers, we expect our cloud providers to offer us a fully private environment.

We never want to hear about “noisy neighbor” (where one customer is using a lot of resources, which eventually affect other customers), and we never want to hear a provider admits that some or all of the resources (from VMs, database, storage, etc.) are being shared among customers.

Will the cloud provider be able to offer me a commitment to a multi-tenant environment?

Stability

One of the major reasons for migrating to the cloud is the ability to re-architect our services, whether we are still using VMs based on IaaS, databases based on PaaS, or fully managed CRM services based on SaaS.

In all scenarios, we would like to have a stable service with zero downtime.

Will the cloud provider allow me to deploy a service in a redundant architecture, that will survive data center outage or infrastructure availability issues (from authentication services, to compute, storage, or even network infrastructure) and return to business with minimal customer effect?

APIs

In the modern cloud era, everything is based on API (Application programming interface).

Will the cloud provider offer me various APIs?

From deploying an entire production environment in minutes using Infrastructure as Code, to monitoring both performances of our services, cost, and security auditing – everything should be allowed using API, otherwise, it is simply not scale/mature/automated/standard and prone to human mistakes.

Data protection

Encrypting data at transit, using TLS 1.2 is a common standard, but what about encryption at rest?

Will the cloud provider allow me to encrypt a database, object storage, or a simple NFS storage using my encryption keys, inside a secure key management service?

Will the cloud provider allow me to automatically rotate my encryption keys?

What happens if I need to store secrets (credentials, access keys, API keys, etc.)? Will the cloud provider allow me to store my secrets in a secured, managed, and audited location?

In case you are about to store extremely sensitive data (from PII, credit card details, healthcare data, or even military secrets), will the cloud provider offer me a solution for confidential computing, where I can store sensitive data, even in memory (or in use)?

Well architected

A mature cloud provider has a vast amount of expertise to share knowledge with you, about how to build an architecture that will be secure, reliable, performance efficient, cost-optimized, and continually improve the processes you have built.

Will the cloud provider offer me rich documentation on how to achieve all the above-mentioned goals, to provide your customers the best experience?

Will the cloud provider offer me an automated solution for deploying an entire application stack within minutes from a large marketplace?

Cost management

The more we broaden our use of the IaaS / PaaS service, the more we realize that almost every service has its price tag.

We might not prepare for this in advance, but once we begin to receive the monthly bill, we begin to see that we pay a lot of money, sometimes for services we don’t need, or for an expensive tier of a specific service.

Unlike on-premise, most cloud providers offer us a way to lower the monthly bill or pay for what we consume.

Regarding cost management, ask yourself the following questions:

Will the cloud provider charge me for services when I am not consuming them?

Will the cloud provider offer me detailed reports that will allow me to find out what am I paying for?

Will the cloud provider offer me documents and best practices for saving costs?

Summary

Answering the above questions with your preferred cloud provider, will allow you to differentiate a mature cloud provider, from the rest of the alternatives, and to assure you that you have made the right choice selecting a cloud provider.

The answers will provide you with confidence, both when working with a single cloud provider, and when taking a step forward and working in a multi-cloud environment.

References

Security, Trust, Assurance, and Risk (STAR)

https://cloudsecurityalliance.org/star/

SOC 2 – SOC for Service Organizations: Trust Services Criteria

https://www.aicpa.org/interestareas/frc/assuranceadvisoryservices/aicpasoc2report.html

Confidential Computing and the Public Cloud

https://eyal-estrin.medium.com/confidential-computing-and-the-public-cloud-fa4de863df3

Confidential computing: an AWS perspective

https://aws.amazon.com/blogs/security/confidential-computing-an-aws-perspective/

AWS Well-Architected

https://aws.amazon.com/architecture/well-architected

Azure Well-Architected Framework

https://docs.microsoft.com/en-us/azure/architecture/framework/

Google Cloud’s Architecture Framework

https://cloud.google.com/architecture/framework

Oracle Architecture Center

https://docs.oracle.com/solutions/

Alibaba Cloud’s Well-Architectured Framework

The Future of Data Security Lies in the Cloud

We have recently read a lot of posts about the SolarWinds hack, a vulnerability in a popular monitoring software used by many organizations around the world.

This is a good example of supply chain attack, which can happen to any organization.

We have seen similar scenarios over the past decade, from the Heartbleed bug, Meltdown and Spectre, Apache Struts, and more.

Organizations all around the world were affected by the SolarWinds hack, including the cybersecurity company FireEye, and Microsoft.

Events like these make organizations rethink their cybersecurity and data protection strategies and ask important questions.

Recent changes in the European data protection laws and regulations (such as Schrems II) are trying to limit data transfer between Europe and the US.

Should such security breaches occur? Absolutely not.

Should we live with the fact that such large organization been breached? Absolutely not!

Should organizations, who already invested a lot of resources in cloud migration move back workloads to on-premises? I don’t think so.

But no organization, not even major financial organizations like banks or insurance companies, or even the largest multinational enterprises, have enough manpower, knowledge, and budget to invest in proper protection of their own data or their customers’ data, as hyperscale cloud providers.

There are several of reasons for this:

- Hyperscale cloud providers invest billions of dollars improving security controls, including dedicated and highly trained personnel.

- Breach of customers’ data that resides at hyperscale cloud providers can drive a cloud provider out of business, due to breach of customer’s trust.

- Security is important to most organizations; however, it is not their main line of expertise.

Organization need to focus on their core business that brings them value, like manufacturing, banking, healthcare, education, etc., and rethink how to obtain services that support their business goals, such as IT services, but do not add direct value.

Recommendations for managing security

Security Monitoring

Security best practices often state: “document everything”.

There are two downsides to this recommendation: One, storage capacity is limited and two, most organizations do not have enough trained manpower to review the logs and find the top incidents to handle.

Switching security monitoring to cloud-based managed systems such as Azure Sentinel or Amazon GuardDuty, will assist in detecting important incidents and internally handle huge logs.

Encryption

Another security best practice state: “encrypt everything”.

A few years ago, encryption was quite a challenge. Will the service/application support the encryption? Where do we store the encryption key? How do we manage key rotation?

In the past, only banks could afford HSM (Hardware Security Module) for storing encryption keys, due to the high cost.

Today, encryption is standard for most cloud services, such as AWS KMS, Azure Key Vault, Google Cloud KMS and Oracle Key Management.

Most cloud providers, not only support encryption at rest, but also support customer managed key, which allows the customer to generate his own encryption key for each service, instead of using the cloud provider’s generated encryption key.

Security Compliance

Most organizations struggle to handle security compliance over large environments on premise, not to mention large IaaS environments.

This issue can be solved by using managed compliance services such as AWS Security Hub, Azure Security Center, Google Security Command Center or Oracle Cloud Access Security Broker (CASB).

DDoS Protection

Any organization exposing services to the Internet (from publicly facing website, through email or DNS service, till VPN service), will eventually suffer from volumetric denial of service.

Only large ISPs have enough bandwidth to handle such an attack before the border gateway (firewall, external router, etc.) will crash or stop handling incoming traffic.

The hyperscale cloud providers have infrastructure that can handle DDoS attacks against their customers, services such as AWS Shield, Azure DDoS Protection, Google Cloud Armor or Oracle Layer 7 DDoS Mitigation.

Using SaaS Applications

In the past, organizations had to maintain their entire infrastructure, from messaging systems, CRM, ERP, etc.

They had to think about scale, resilience, security, and more.

Most breaches of cloud environments originate from misconfigurations at the customers’ side on IaaS / PaaS services.

Today, the preferred way is to consume managed services in SaaS form.

These are a few examples: Microsoft Office 365, Google Workspace (Formerly Google G Suite), Salesforce Sales Cloud, Oracle ERP Cloud, SAP HANA, etc.

Limit the Blast Radius

To limit the “blast radius” where an outage or security breach on one service affects other services, we need to re-architect infrastructure.

Switching from applications deployed inside virtual servers to modern development such as microservices based on containers, or building new applications based on serverless (or function as a service) will assist organizations limit the attack surface and possible future breaches.

Example of these services: Amazon ECS, Amazon EKS, Azure Kubernetes Service, Google Kubernetes Engine, Google Anthos, Oracle Container Engine for Kubernetes, AWS Lambda, Azure Functions, Google Cloud Functions, Google Cloud Run, Oracle Cloud Functions, etc.

Summary

The bottom line: organizations can increase their security posture, by using the public cloud to better protect their data, use the expertise of cloud providers, and invest their time in their core business to maximize value.

Security breaches are inevitable. Shifting to cloud services does not shift an organization’s responsibility to secure their data. It simply does it better.

Confidential Computing and the Public Cloud

What exactly is “confidential computing” and what are the reasons and benefits for using it in the public cloud environment?

Introduction to data encryption

To protect data stored in the cloud, we usually use one of the following methods:

· Encryption at transit — Data transferred over the public Internet can be encrypted using the TLS protocol. This method prohibits unwanted participants from entering the conversation.

· Encryption at rest — Data stored at rest, such as databases, object storage, etc., can be encrypted using symmetric encryption which means using the same encryption key to encrypt and decrypt the data. This commonly uses the AES256 algorithm.

When we wish to access encrypted data, we need to decrypt the data in the computer’s memory to access, read and update the data.

This is where confidential computing comes in — trying to protect the gap between data at rest and data at transit.

Confidential Computing uses hardware to isolate data. Data is encrypted in use by running it in a trusted execution environment (TEE).

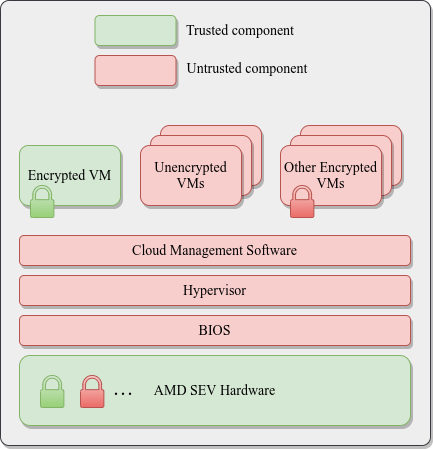

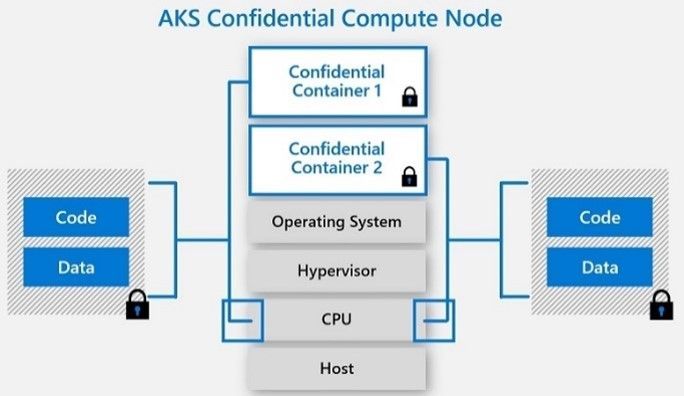

As of November 2020, confidential computing is supported by Intel Software Guard Extensions (SGX) and AMD Secure Encrypted Virtualization (SEV), based on AMD EPYC processors.

Comparison of the available options

| Intel SGX | Intel SGX2 | AMD SEV 1 | AMD SEV 2 | |

| Purpose | Microservices and small workloads | Machine Learning and AI | Cloud and IaaS workloads (above the hypervisor), suitable for legacy applications or large workloads | Cloud and IaaS workloads (above the hypervisor), suitable for legacy applications or large workloads |

| Cloud VM support (November 2020) | – | |||

| Cloud containers support (November 2020) | – | – | ||

| Operating system supported | Windows, Linux | Linux | Linux | Linux |

| Memory limitation | Up to 128MB | Up to 1TB | Up to available RAM | Up to available RAM |

| Software changes | Require software rewrite | Require software rewrite | Not required | – |

Reference Architecture

AMD SEV Architecture:

Azure Kubernetes Service (AKS) Confidential Computing:

References

· Confidential Computing: Hardware-Based Trusted Execution for Applications and Data

· Google Cloud Confidential VMs vs Azure Confidential Computing

https://msandbu.org/google-cloud-confidential-vms-vs-azure-confidential-computing/

· A Comparison Study of Intel SGX and AMD Memory Encryption Technology

https://caslab.csl.yale.edu/workshops/hasp2018/HASP18_a9-mofrad_slides.pdf

· SGX-hardware listhttps://github.com/ayeks/SGX-hardware

· Performance Analysis of Scientific Computing Workloads on Trusted Execution Environments

https://arxiv.org/pdf/2010.13216.pdf

· Helping Secure the Cloud with AMD EPYC Secure Encrypted Virtualization

https://developer.amd.com/wp-content/resources/HelpingSecuretheCloudwithAMDEPYCSEV.pdf

· Azure confidential computing

https://azure.microsoft.com/en-us/solutions/confidential-compute/

· Azure and Intel commit to delivering next generation confidential computing

· DCsv2-series VM now generally available from Azure confidential computing

· Confidential computing nodes on Azure Kubernetes Service (public preview)

https://docs.microsoft.com/en-us/azure/confidential-computing/confidential-nodes-aks-overview

· Expanding Google Cloud’s Confidential Computing portfolio

· A deeper dive into Confidential GKE Nodes — now available in preview

https://cloud.google.com/blog/products/identity-security/confidential-gke-nodes-now-available

· Using HashiCorp Vault with Google Confidential Computing

https://www.hashicorp.com/blog/using-hashicorp-vault-with-google-confidential-computing

· Confidential Computing is cool!

https://medium.com/google-cloud/confidential-computing-is-cool-1d715cf47683

· Data-in-use protection on IBM Cloud using Intel SGX

https://www.ibm.com/cloud/blog/data-use-protection-ibm-cloud-using-intel-sgx

· Why IBM believes Confidential Computing is the future of cloud security

· Alibaba Cloud Released Industry’s First Trusted and Virtualized Instance with Support for SGX 2.0 and TPM

Best Practices for Deploying New Environments in the Cloud for the First Time

When organizations take their first steps to use public cloud services, they tend to look at a specific target.

My recommendation – think scale!

Plan a couple of steps ahead instead of looking at single server that serves just a few customers. Think about a large environment comprised of hundreds or thousands of servers, serving 10,000 customers concurrently.

Planning will allow you to manage the environment (infrastructure, information security and budget) when you do reach a scale of thousands of concurrent customers. The more we plan the deployment of new environments in advance, according to their business purposes and required resources required for each environment, it will be easier to plan to scale up, while maintaining high level security, budget and change management control and more.

In this three-part blog series, we will review some of the most important topics that will help avoid mistakes while building new cloud environments for the first time.

Resource allocation planning

The first step in resources allocation planning is to decide how to divide resources based on an organizational structure (sales, HR, infrastructure, etc.) or based on environments (production, Dev, testing, etc.)

In-order to avoid mixing resources (or access rights) between various environments, the best practice is to separate the environments as follows:

- Share resource account (security products, auditing, billing management, etc.)

- Development environment account (consider creating separate account for test environment purposes)

- Production environment account

Separating different accounts or environments can be done using:

- Azure Subscriptions or Azure Resource Groups

- AWS Accounts

- GCP Projects

- Oracle Cloud Infrastructure Compartments

Tagging resources

Even when deploying a single server inside a network environment (AWS VPC, Azure Resource Group, GCP VPC), it is important to tag resources. This allows identifying which resources belong to which projects / departments / environments, for billing purposes.

Common tagging examples:

- Project

- Department

- Environment (Prod, Dev, Test)

Beyond tagging, it is recommended to add a description to resources that support this kind of meta-data, in-order to locate resources by their target use.

Authentication, Authorization and Password Policy

In-order to ease the management of working with accounts in the cloud (and in the future, multiple accounts according to the various environments), the best practice is to follow the rules below:

- Central authentication – In case the organization isn’t using Active Directory for central account management and access rights, the alternative is to use managed services such as AWS IAM, Google Cloud IAM, Azure AD, Oracle Cloud IAM, etc.

If managed IAM service is chosen, it is critical to set password policy according to the organization’s password policy (minimum password length, password complexity, password history, etc.)

- If the central directory service is used by the organization, it is recommended to connect and sync the managed IAM service in the cloud to the organizational center directory service on premise (federated authentication).

- It is crucial to protect privileged accounts in the cloud environment (such as AWS Root Account, Azure Global Admin, Azure Subscription Owner, GCP Project Owner, Oracle Cloud Service Administrator, etc.), among others, by limiting the use of privileged accounts to the minimum required, enforcing complex passwords, and password rotation every few months. This enables multi-factor authentication and auditing on privileged accounts, etc.

- Access to resources should be defined according to the least privilege principle.

- Access to resources should be set to groups instead of specific users.

- Access to resources should be based on roles in AWS, Azure, GCP, Oracle Cloud, etc.

Audit Trail

It is important to enable auditing in all cloud environments, in-order to gain insights on access to resources, actions performed in the cloud environment and by whom. This is both security and change management reasons.

Common managed audit trail services:

- AWS CloudTrail – It is recommended to enable auditing on all regions and forward the audit logs to a central S3 bucket in a central AWS account (which will be accessible only for a limited amount of user accounts).

- Working with Azure, it is recommended to enable the use of Azure Monitor for the first phase, in-order to audit all access to resources and actions done inside the subscription. Later on, when the environment expands, you may consider using services such as Azure Security Center and Azure Sentinel for auditing purposes.

- Google Cloud Logging – It is recommended to enable auditing on all GCP projects and forward the audit logs to the central GCP project (which will be accessible only for a limited amount of user accounts).

- Oracle Cloud Infrastructure Audit service – It is recommended to enable auditing on all compartments and forward the audit logs to the Root compartment account (which will be accessible only for a limited amount of user accounts).

Budget Control

It is crucial to set a budget and budget alerts for any account in the cloud at in the early stages of working with in cloud environment. This is important in order to avoid scenarios in which high resource consumption happens due to human error, such as purchasing or consuming expensive resources, or of Denial of Wallet scenarios, where external attackers breach an organization’s cloud account and deploys servers for Bitcoin mining.

Common examples of budget control management for various cloud providers:

- AWS Consolidated Billing – Configure central account among all the AWS account in the organization, in-order to forward billing data (which will be accessible only for a limited amount of user accounts).

- GCP Cloud Billing Account – Central repository for storing all billing data from all GCP projects.

- Azure Cost Management – An interface for configuring budget and budget alerts for all Azure subscriptions in the organization. It is possible to consolidate multiple Azure subscriptions to Management Groups in-order to centrally control budgets for all subscriptions.

- Budget on Oracle Cloud Infrastructure – An interface for configuring budget and budget alerts for all compartments.

Secure access to cloud environments

In order to avoid inbound access from the Internet to resources in cloud environments (virtual servers, databases, storage, etc.), it is highly recommended to deploy a bastion host, which will be accessible from the Internet (SSH or RDP traffic) and will allow access and management of resources inside the cloud environment.

Common guidelines for deploying Bastion Host:

- Linux Bastion Hosts on AWS

- Create an Azure Bastion host using the portal

- Securely connecting to VM instances on GCP

- Setting Up the Basic Infrastructure for a Cloud Environment, based on Oracle Cloud

The more we expand the usage of cloud environments, we can consider deploying a VPN tunnel from the corporate network (Site-to-site VPN) or allow client VPN access from the Internet to the cloud environment (such as AWS Client VPN endpoint, Azure Point-to-Site VPN, Oracle Cloud SSL VPN).

Managing compute resources (Virtual Machines and Containers)

When selecting to deploy virtual machines in cloud environment, it is highly recommended to follow the following guidelines:

- Choose an existing image from a pre-defined list in the cloud providers’ marketplace (operating system flavor, operating system build, and sometimes an image that includes additional software inside the base image).

- Configure the image according to organizational or application demands.

- Update all software versions inside the image.

- Store an up-to-date version of the image (“Golden Image”) inside the central image repository in the cloud environment (for reuse).

- In case the information inside the virtual machines is critical, consider using managed backup services (such as AWS Backup or Azure Backup).

- When deploying Windows servers, it is crucial to set complex passwords for the local Administrator’s account, and when possible, join the Windows machine to the corporate domain.

- When deploying Linux servers, it is crucial to use SSH Key authentication and store the private key(s) in a secure location.

- Whenever possible, encrypt data at rest for all block volumes (the server’s hard drives / volumes).

- It is highly recommended to connect the servers to a managed vulnerability assessment service, in order to detect software vulnerabilities (services such as Amazon Inspector or Azure Security Center).

- It is highly recommended to connect the servers to a managed patch management service in-order to ease the work of patch management (services such as AWS Systems Manager Patch Manager, Azure Automation Update Management or Google OS Patch Management).

When selecting to deploy containers in the cloud environment, it is highly recommended to follow the following guidelines:

- Use a Container image from a well know container repository.

- Update all binaries and all dependencies inside the Container image.

- Store all Container images inside a managed container repository inside the cloud environment (services such as Amazon ECR, Azure Container Registry, GCP Container Registry, Oracle Cloud Container Registry, etc.)

- Avoid using Root account inside the Containers.

- Avoid storing data (such as session IDs) inside the Container – make sure the container is stateless.

- It is highly recommended to connect the CI/CD process and the container update process to a managed vulnerability assessment service, in-order to detect software vulnerabilities (services such as Amazon ECR Image scanning, Azure Container Registry, GCP Container Analysis, etc.)

Storing sensitive information

It is highly recommended to avoid storing sensitive information, such as credentials, encryption keys, secrets, API keys, etc., in clear text inside virtual machines, containers, text files or on the local desktop.

Sensitive information should be stored inside managed vault services such as:

- AWS KMS or AWS Secrets Manager

- Azure Key Vault

- Google Cloud KMS or Google Secret Manager

- Oracle Cloud Infrastructure Key Management

- HashiCorp Vault

Object Storage

When using Object Storage, it is recommended to follow the following guidelines:

- Avoid allowing public access to services such as Amazon S3, Azure Blob Storage, Google Cloud Storage, Oracle Cloud Object Storage, etc.

- Enable audit access on Object Storage and store the access logs in a central account in the cloud environment (which will be accessible only for a limited amount of user accounts).

- It is highly recommended to encrypt data at rest on all data inside Object Storage and when there is a business or regulatory requirement, and encrypt data using customer managed keys.

- It is highly recommended to enforce HTTPS/TLS for access to object storage (users, computers and applications).

- Avoid creating object storage bucket names with sensitive information, since object storage bucket names are unique and saved inside the DNS servers worldwide.

Networking

- Make sure access to all resources is protected by access lists (such as AWS Security Groups, Azure Network Security Groups, GCP Firewall Rules, Oracle Cloud Network Security Groups, etc.)

- Avoid allowing inbound access to cloud environments using protocols such as SSH or RDP (in case remote access is needed, use Bastion host or VPN connections).

- As much as possible, it is recommended to avoid outbound traffic from the cloud environment to the Internet. If needed, use a NAT Gateway (such as Amazon NAT Gateway, Azure NAT Gateway, GCP Cloud NAT, Oracle Cloud NAT Gateway, etc.)

- As much as possible, use DNS names to access resources instead of static IPs.

- When developing cloud environments, and subnets inside new environments, avoid IP overlapping between subnets in order to allow peering between cloud environments.

Advanced use of cloud environments

- Prefer to use managed services instead of manually managing virtual machines (services such as Amazon RDS, Azure SQL Database, Google Cloud SQL, etc.)

It allows consumption of services, rather than maintaining servers, operating systems, updates/patches, backup and availability, assuming managed services in cluster or replica mode is chosen.

- Use Infrastructure as a Code (IoC) in-order to ease environment deployments, lower human errors and standardize deployment on multiple environments (Prod, Dev, Test).

Common Infrastructure as a Code alternatives:

Summary

To sum up:

Plan. Know what you need. Think scale.

If you use the best practices outlined here, taking off to the cloud for the first time will be an easier, safer and smoother ride then you might expect.

Additional references

Threat Modeling for Data Protection

When evaluating the security of an application and data model ask the questions:

- What is the sensitivity of the data?

- What are the regulatory, compliance, or privacy requirements for the data?

- What is the attack vector that a data owner is hoping to mitigate?

- What is the overall security posture of the environment, is it a hostile environment or a relatively trusted one?

Data When threat modeling, consider the following common scenarios:

Data at rest (“DAR”)

In information technology means inactive data that is stored physically in any digital form (e.g. database/data warehouses, spreadsheets, archives, tapes, off-site backups, mobile devices etc.).

- Transparent Data Encryption (often abbreviated to TDE) is a technology employed by Microsoft SQL, IBM DB2 and Oracle to encrypt the “table-space” files in a database. TDE offers encryption at the file level. It solves the problem of protecting data at rest by encrypting databases both on the hard drive as well as on backup media. It does not protect data in motion DIM nor data in use DIU.

- Mount-point encryption: This is another form of TDE is available for database systems which do not natively support table-space encryption. Several vendors offer mount-point encryption for Linux/Unix/Microsoft Windows file system mount-points. When a vendor does not support TDE, this type of encryption effectively encrypts the database table-space and stores the encryption keys separate from the file system. So, if the physical or logical storage medium is detached from the compute resource, the database table-space remains encrypted.

Data in Motion (“DIM”)

Data in motion considers the security of data that is being copied from one medium to another. Data in motion typically considers data being transmitted over a network transport. Web Applications represent common data in motion scenarios.

- Transport Layer Security (TLS or SSL): is commonly used to encrypt internet protocol based network transports. TLS works by encrypting the internet layer 7 “application layer” packets of a given network stream using symmetric encryption.

- Secure Shell/Secure File Transport (SSH, SCP, SFTP): SSH is a protocol used to securely login and access remote computers. SFTP runs over the SSH protocol (leveraging SSH security and authentication functionality) but is used for secure transfer of files. The SSH protocol utilizes public key cryptography to authenticate access to remote systems.

- Virtual Private Networks (VPNs) A virtual private network (VPN) extends a private network across a public network, and enables users to send and receive data across shared or public networks as if their computing devices were directly connected to the private network.

Data in Use (“DIU”)

Data in use happens whenever a computer application reads data from a storage medium into volatile memory.

- Full memory encryption: Encryption to prevent data visibility in the event of theft, loss, or unauthorized access or theft. This is commonly used to protect Data in Motion and Data at Rest. Encryption is increasingly recognized as an optimal method for protecting Data in Use. There have been multiple approaches to encrypt data in use within memory. Microsoft’s Xbox has a capability to provide memory encryption. A company Private Core presently has a commercial software product cage to provide attestation along with full memory encryption for x86 servers.

- RAM Enclaves: enable an enclave of protected data to be secured with encryption in RAM. Enclave data is encrypted while in RAM but available as clear text inside the CPU and CPU cache, when written to disk, when traversing networks etc. Intel Corporation has introduced the concept of “enclaves” as part of its Software Guard Extensions in technical papers published in 2013.

- 2013 papers: from Workshop on Hardware and Architectural Support for Security and Privacy 2013

- Innovative Instructions and Software Model for Isolated Execution

- Innovative Technology for CPU Based Attestation and Sealing

Where do traditional data protection techniques fall short?

TDE: Database and mount point encryption both fall short of fully protecting data across the data’s entire lifecycle. For instance: TDE was designed to defend against theft of physical or virtual storage media only. An authorized system administrator, or and unauthorized user or process can gain access to sensitive data either by running a legitimate query and , or by scraping RAM. TDE does not provide granular access control to data at rest once the data has been mounted.

TLS/SCP/STFP/VPN, etc: TCP/IP Transport layer encryption also falls short of protecting data across the entire data lifecycle. For example, TLS does not protect data at rest or in use. Quite often TLS is only enabled on Internet facing application load balancers. Often TLS calls to web applications are plaintext on the datacenter or cloud side of the application load-balancer.

DIU: Memory encryption, Data in use full memory encryption falls short of protecting data across the entire data lifecycle. DIU techniques are cutting edge and not generally available. Commodity compute architecture has just begun to support memory encryption. With DIU memory encryption, data is only encrypted while in memory. Data is in plaintext while in the CPU, Cache, written to disk, and traversing network transports.

Complimentary or Alternative Approach: Tokenization

We need an alternative approach that address all the exposure gaps 100% of the time. In information security, we really want a defense in depth strategy. That is, we want layers of controls so that if a single layer is fails or is compromised another layer can compensate for the failure.

Tokenization and format preserving encryption are unique in the fact they protect sensitive data throughout the data lifecycle/across a data-flow. Tokenization and FPE are portable and remain in force across mixed technology stacks. Tokenization and Format preserving encryption do not share the same exposures as traditional data protection techniques.

How does this work? Fields of sensitive data are cryptographically transformed at the system of origin, that is during intake. A cryptographic transform of a sensitive field is applied, producing a non-sensitive token representation of the original data.

Tokenization, when applied to data security, is the process of substituting a sensitive data element with a non-sensitive equivalent, referred to as a token, that has no extrinsic or exploitable meaning or value. The token is a reference (i.e. identifier) that maps back to the sensitive data through a tokenization system.

Format preserving encryption takes this a step further and allows the data element to maintain its original format and data type. For instance, a 16-digit credit card number can be protected and the result is another 16-digit value. The value here is to reduce the overall impact of code changes to applications and databases while reducing the time to market of implementing end to end data protection.

In Closing

Use of tokenization or format preserving encryption to replace live data in systems results in minimized exposure of sensitive data to those applications, stores, people and processes. Replacing sensitive data results in reduced risk of compromise or accidental exposure and unauthorized access to sensitive data.

Applications can operate using tokens instead of live data, with the exception of a small number of trusted applications explicitly permitted to detokenize when strictly necessary for an approved business purpose. Moreover: in several cases removal of sensitive data from an organization’s applications, databases, business processes will result in reduced compliance and audit scope, resulting in significantly less complex and shorter audits.

This article was originally published in Very Good Security.

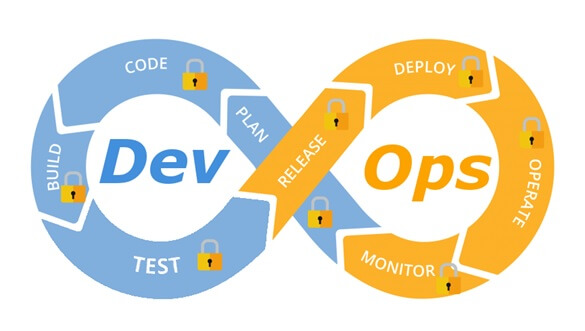

Integrate security aspects in a DevOps process

A diagram of a common DevOps lifecycle:

The DevOps world meant to provide complementary solution for both quick development (such as Agile) and a solution for cloud environments, where IT personnel become integral part of the development process. In the DevOps world, managing large number of development environments manually is practically infeasible. Monitoring mixed environments become a complex solution and deploying large number of different builds is becoming extremely fast and sensitive to changes.

The idea behind any DevOps solution is to provide a solution for deploying an entire CI/CD process, which means supporting constant changes and immediate deployment of builds/versions.

For the security department, this kind of process is at first look a nightmare – dozen builds, partial tests, no human control for any change, etc.

For this reason, it is crucial for the security department to embrace DevOps attitude, which means, embedding security in any part of the development lifecycle, software deployment or environment change.

It is important to understand that there are no constant stages as we used to have in waterfall development lifecycle, and most of the stages are parallel – in the CI/CD world everything changes quickly, components can be part of different stages, and for this reason it is important to confer the processes, methods and tools in all developments and DevOps teams.

In-order to better understand how to embed security into the DevOps lifecycle, we need to review the different stages in the development lifecycle:

Planning phase

This stage in the development process is about gathering business requirements.

At this stage, it is important to embed the following aspects:

- Gather information security requirements (such as authentication, authorization, auditing, encryptions, etc.)

- Conduct threat modeling in-order to detect possible code weaknesses

- Training / awareness programs for developers and DevOps personnel about secure coding

Creation / Code writing phase

This stage in the development process is about the code writing itself.

At this stage, it is important to embed the following aspects:

- Connect the development environments (IDE) to a static code analysis products

- Review the solution architecture by a security expert or a security champion on his behalf

- Review open source components embedded inside the code

Verification / Testing phase

This stage in the development process is about testing, conducted mostly by QA personnel.

At this stage, it is important to embed the following aspects:

- Run SAST (Static application security tools) on the code itself (pre-compiled stage)

- Run DAST (Dynamic application security tools) on the binary code (post-compile stage)

- Run IAST (Interactive application security tools) against the application itself

- Run SCA (Software composition analysis) tools in-order to detect known vulnerabilities in open source components or 3rd party components

Software packaging and pre-production phase

This stage in the development process is about software packaging of the developed code before deployment/distribution phase.

At this stage, it is important to embed the following aspects:

- Run IAST (Interactive application security tools) against the application itself

- Run fuzzing tools in-order to detect buffer overflow vulnerabilities – this can be done automatically as part of the build environment by embedding security tests for functional testing / negative testing

- Perform code signing to detect future changes (such as malwares)

Software packaging release phase

This stage is between the packaging and deployment stages.

At this stage, it is important to embed the following aspects:

- Compare code signature with the original signature from the software packaging stage

- Conduct integrity checks to the software package

- Deploy the software package to a development environment and conduct automate or stress tests

- Deploy the software package in a green/blue methodology for software quality and further security quality tests

Software deployment phase

At this stage, the software package (such as mobile application code, docker container, etc.) is moving to the deployment stage.

At this stage, it is important to embed the following aspects:

- Review permissions on destination folder (in case of code deployment for web servers)

- Review permissions for Docker registry

- Review permissions for further services in a cloud environment (such as storage, database, application, etc.) and fine-tune the service role for running the code

Configure / operate / Tune phase

At this stage, the development is in the production phase and passes modifications (according to business requirements) and on-going maintenance.

At this stage, it is important to embed the following aspects:

- Patch management processes or configuration management processes using tools such as Chef, Ansible, etc.

- Scanning process for detecting vulnerabilities using vulnerability assessment tools

- Deleting and re-deployment of vulnerable environments with an up-to-date environments (if possible)

On-going monitoring phase

At this stage, constant application monitoring is being conducted by the infrastructure or monitoring teams.

At this stage, it is important to embed the following aspects:

- Run RASP (Runtime application self-production) tools

- Implement defense at the application layer using WAF (Web application firewall) products

- Implement products for defending the application from Botnet attacks

- Implement products for defending the application from DoS / DDoS attacks

- Conduct penetration testing

- Implement monitoring solution using automated rules such as automated recovery of sensitive changes (tools such as GuardRails)

Security recommendations for developments based on CI/CD / DevOps process

- It is highly recommended to perform on-going training for the development and DevOps teams on security aspects and secure development

- It is highly recommended to nominate a security champion among the development and DevOps teams in-order to allow them to conduct threat modeling at early stages of the development lifecycle and in-order to embed security aspects as soon as possible in the development lifecycle

- Use automated tools for deploying environments in a simple and standard form.

Tools such as Puppet require root privileges for folders it has access to. In-order to lower the risk, it is recommended to enable folder access auditing. - Avoid storing passwords and access keys, hard-coded inside scripts and code.

- It is highly recommended to store credentials (SSH keys, privileged credentials, API keys, etc.) in a vault (Solutions such as HashiCorp vault or CyberArk).

- It is highly recommended to limit privilege access based on role (Role based access control) using least privileged.

- It is recommended to perform network separation between production environment and Dev/Test environments.

- Restrict all developer teams’ access to production environments, and allow only DevOps team’s access to production environments.

- Enable auditing and access control for all development environments and identify access attempts anomalies (such as developers access attempt to a production environment)

- Make sure sensitive data (such as customer data, credentials, etc.) doesn’t pass in clear text at transit. In-case there is a business requirement for passing sensitive data at transit, make sure the data is passed over encrypted protocols (such as SSH v2, TLS 1.2, etc.), while using strong cipher suites.

- It is recommended to follow OWASP organization recommendations (such as OWASP Top10, OWASP ASVS, etc.)

- When using Containers, it is recommended to use well-known and signed repositories.

- When using Containers, it is recommended not to rely on open source libraries inside the containers, and to conduct scanning to detect vulnerable versions (including dependencies) during the build creation process.

- When using Containers, it is recommended to perform hardening using guidelines such as CIS Docker Benchmark or CIS Kubernetes Benchmark.

- It is recommended to deploy automated tools for on-going tasks, starting from build deployments, code review for detecting vulnerabilities in the code and open source code, and patch management processes that will be embedded inside the development and build process.

- It is recommended to perform scanning to detect security weaknesses, using vulnerability management tools during the entire system lifetime.

- It is recommended to deploy configuration management tools, in-order to detect and automatically remediate configuration anomalies from the original configuration.

Additional reading sources:

- 20 Ways to Make Application Security Move at the Speed of DevOps

- The DevOps Security Checklist

- Making AppSec Testing Work in CI/CD

- Value driven threat modeling

- Automated Security Testing

- Security at the Speed of DevOps

- DevOps Security Best Practices

- The integration of DevOps and security

- When DevOps met Security - DevSecOps in a nutshell

- Grappling with DevOps Security

- Minimizing Risk and Improving Security in DevOps

- Security In A DevOps World

- Application Security in Devops

- Five Security Defenses Every Containerized Application Needs

- 5 ways to find and fix open source vulnerabilities

This article was written by Eyal Estrin, cloud security architect and Vitaly Unic, application security architect.

How to Use E-mail PGP Encryption

Every day internet users send more than 200 billion emails and this statistics makes anonymous e-mail a number one feature to use in day-to-day communication.

Below here is a step by step guide to set up PGP encryption and communicate securely online. The tutorial is developed using Privatoria E-mail service, though one can use any e-mail client supporting this feature. The device used in this installation wizard is MacBook Air, OS X Yosemite. Pay attention that you can set up PGP on any device, regardless of OS.

Step 1

Please login to your Control Panel and go to Services /Anonymous E-Mail / Go to your inbox. You will be redirected to your mail inbox.

Step 2

Go to Settings section in the right top corner of your page. Click Open PGP – Enable OpenPGP – Save.

To start sending encrypted emails you need to first of all generate a KEY and send it to the recipient.

Click on Generate new key. It is recommended to set own password on this step and use it each and every time you want to send/receive encrypted emails. It provides additional security meaning that your PGP email can never be decrypted and/or your signature is really yours! Please note that this password cannot be reset, but you can create a new one in case you forget it 🙂

Click Generate and wait for about half a minute until it is being generated. Once ready, you will see the following screen

To send an encrypted email you will need to use Public Key. Click View and then choose Send.

Step 3

You will be redirected to email interface ready to send your email. Send this email to the recipient first and wait until they send you their key. Once received click Import. Now, once the key is received you can proceed with sending the actual both-end encrypted email. Type in the recipient’s email, add subject and body of your email.

To finalize the encryption click on PGP Sign/Encrypt and proceed further. You will receive a message “OpenPGP supports plain text only. Click OK to remove all the formatting and continue.” Click OK.

NB! Private key is your own key that encrypts the data and is being generated for you only.

Email recipient should use any email client supporting PGP encryption function.

9 Essential System Security Interview Questions

- What is a pentest?

“Pentest” is short for “penetration test”, and involves having a trusted security expert attack a system for the purpose of discovering, and repairing, security vulnerabilities before malicious attackers can exploit them. This is a critical procedure for securing a system, as the alternative method for discovering vulnerabilities is to wait for unknown agents to exploit them. By this time it is, of course, too late to do anything about them.

In order to keep a system secure, it is advisable to conduct a pentest on a regular basis, especially when new technology is added to the stack, or vulnerabilities are exposed in your current stack.

2. What is social engineering?

“Social engineering” refers to the use of humans as an attack vector to compromise a system. It involves fooling or otherwise manipulating human personnel into revealing information or performing actions on the attacker’s behalf. Social engineering is known to be a very effective attack strategy, since even the strongest security system can be compromised by a single poor decision. In some cases, highly secure systems that cannot be penetrated by computer or cryptographic means, can be compromised by simply calling a member of the target organization on the phone and impersonating a colleague or IT professional.

Common social engineering techniques include phishing, clickjacking, and baiting, although several other tricks are at an attacker’s disposal. Baiting with foreign USB drives was famously used to introduce the Stuxnet worm into Iran’s uranium enrichment facilities, damaging the nation’s ability to produce nuclear material.

For more information, a good read is Christopher Hadnagy’s book Social Engineering: The Art of Human Hacking.

3. You find PHP queries overtly in the URL, such as /index.php=?page=userID. What would you then be looking to test?

This is an ideal situation for injection and querying. If we know that the server is using a database such as SQL with a PHP controller, it becomes quite easy. We would be looking to test how the server reacts to multiple different types of requests, and what it throws back, looking for anomalies and errors.

One example could be code injection. If the server is not using authentication and evaluating each user, one could simply try /index.php?arg=1;system(‘id’) and see if the host returns unintended data.

4. You find yourself in an airport in the depths of of a foreign superpower. You’re out of mobile broadband and don’t trust the WI-FI. What do you do? Further, what are the potential threats from open WI-FIs?

Ideally you want all of your data to pass through an encrypted connection. This would usually entail tunneling via SSH into whatever outside service you need, over a virtual private network (VPN). Otherwise, you’re vulnerable to all manner of attacks, from man-in-the-middle, to captive portals exploitation, and so on.

5. What does it mean for a machine to have an “air gap”? Why are air gapped machines important?

An air gapped machine is simply one that cannot connect to any outside agents. From the highest level being the internet, to the lowest being an intranet or even bluetooth.

Air gapped machines are isolated from other computers, and are important for storing sensitive data or carrying out critical tasks that should be immune from outside interference. For example, a nuclear power plant should be operated from computers that are behind a full air gap. For the most part, real world air gapped computers are usually connected to some form of intranet in order to make data transfer and process execution easier. However, every connection increases the risk that outside actors will be able to penetrate the system.

6. You’re tasked with setting up an email encryption system for certain employees of a company. What’s the first thing you should be doing to set them up? How would you distribute the keys?

The first task is to do a full clean and make sure that the employees’ machines aren’t compromised in any way. This would usually involve something along the lines of a selective backup. One would take only the very necessary files from one computer and copy them to a clean replica of the new host. We give the replica an internet connection and watch for any suspicious outgoing or incoming activity. Then one would perform a full secure erase on the employee’s original machine, to delete everything right down to the last data tick, before finally restoring the backed up files.

The keys should then be given out by transferring them over wire through a machine or device with no other connections, importing any necessary .p7s email certificate files into a trusted email client, then securely deleting any trace of the certificate on the originating computer.

The first step, cleaning the computers, may seem long and laborious. Theoretically, if you are 100% certain that the machine is in no way affected by any malicious scripts, then of course there is no need for such a process. However in most cases, you’ll never know this for sure, and if any machine has been backdoored in any kind of way, this will usually mean that setting up secure email will be done in vain.

7. You manage to capture email packets from a sender that are encrypted through Pretty Good Privacy (PGP). What are the most viable options to circumvent this?

First, one should be considering whether to even attempt circumventing the encryption directly. Decryption is nearly impossible here unless you already happen to have the private key. Without this, your computer will be spending multiple lifetimes trying to decrypt a 2048-bit key. It’s likely far easier to simply compromise an end node (i.e. the sender or receiver). This could involve phishing, exploiting the sending host to try and uncover the private key, or compromising the receiver to be able to view the emails as plain text.

8. What makes a script fully undetectable (FUD) to antivirus software? How would you go about writing a FUD script?

A script is FUD to an antivirus when it can infect a target machine and operate without being noticed on that machine by that AV. This usually entails a script that is simple, small, and precise

To know how to write a FUD script, one must understand what the targeted antivirus is actually looking for. If the script contains events such as Hook_Keyboard(), File_Delete(), or File_Copy(), it’s very likely it wil be picked up by antivirus scanners, so these events are not used. Further, FUD scripts will often mask function names with common names used in the industry, rather than naming them things like fToPwn1337(). A talented attacker might even break up his or her files into smaller chunks, and then hex edit each individual file, thereby making it even more unlikely to be detected.

As antivirus software becomes more and more sophisticated, attackers become more sophisticated in response. Antivirus software such as McAfee is much harder to fool now than it was 10 years ago. However, there are talented hackers everywhere who are more than capable of writing fully undetectable scripts, and who will continue to do so. Virus protection is very much a cat and mouse game.

9. What is a “Man-in-the-Middle” attack?

A man-in-the-middle attack is one in which the attacker secretly relays and possibly alters the communication between two parties who believe they are directly communicating with each other. One example is active eavesdropping, in which the attacker makes independent connections with the victims and relays messages between them to make them believe they are talking directly to each other over a private connection, when in fact the entire conversation is controlled by the attacker, who even has the ability to modify the content of each message. Often abbreviated to MITM, MitM, or MITMA, and sometimes referred to as a session hijacking attack, it has a strong chance of success if the attacker can impersonate each party to the satisfaction of the other. MITM attacks pose a serious threat to online security because they give the attacker the ability to capture and manipulate sensitive information in real-time while posing as a trusted party during transactions, conversations, and the transfer of data. This is straightforward in many circumstances; for example, an attacker within reception range of an unencrypted WiFi access point, can insert himself as a man-in-the-middle.

This article is from Toptal.