Navigating Brownfield Environments in AWS: Steps for Successful Cloud Use

In an ideal world, we would have the luxury of building greenfield cloud environments, however, this is not always the situation we as cloud architects have to deal with.

Greenfield environments allow us to design our cloud environment following industry (or cloud vendor) best practices, setting up guardrails, selecting an architecture to meet the business requirements (think about event-driven architecture, scale, managed services, etc.), backing cost into architecture decisions, etc.

In many cases, we inherit an existing cloud environment due to mergers and acquisitions or we just stepped into the position of a cloud architect in a new company, that already serves customers, and there are almost zero chances that the business will grant us the opportunity to fix mistakes already been taken.

In this blog post, I will try to provide some steps for handling brownfield cloud environments, based on the AWS platform.

Step 1 – Create an AWS Organization

If you have already inherited multiple AWS accounts, the first thing you need to do is create a new AWS account (without any resources) to serve as the management account and create an AWS organization, as explained in the AWS documentation.

Once the new AWS organization is created, make sure you select an email address (from your organization’s SMTP domain), select a strong password for the Root AWS user account, revoke and remove all AWS access keys (if there are any), and configure an MFA for the Root AWS user account.

Update the primary and alternate contact details for the AWS organization (recommend using an SMTP mailing list instead of a single-user email address).

The next step is to design an OU structure for the AWS organization. There are various ways to structure the organization, and perhaps the most common one is by lines of business, and underneath, a similar structure by SDLC stage – i.e., Dev, Test, and Prod, as discussed in the AWS documentation.

Step 2 – Handle Identity and Access management

Now that we have an AWS organization, we need to take care of identity and access management across the entire organization.

To make sure all identities authenticate against the same identity provider (such as the on-prem Microsoft Active Directory), enable AWS IAM Identity Center, as explained in the AWS documentation.

Once you have set up the AWS IAM Identity Center, it is time to avoid using the Root AWS user account and create a dedicated IAM user for all administrative tasks, as explained in the AWS documentation.

Step 3 – Moving AWS member accounts to the AWS Organization

Assuming we have inherited multiple AWS accounts, it is now the time to move the member AWS accounts into the previously created OU structure, as explained in the AWS documentation.

Once all the member accounts have been migrated, it is time to remove the Root AWS user account, as explained in the AWS documentation.

Step 4 – Manage cost

The next thing we need to consider is cost. If a workload was migrated from the on-prem using a legacy data center mindset, or if a temporary or development environment became a production environment over time, designed by an inexperienced architect or engineer, there is a good chance that cost was not a top priority from day 1.

Even before digging into cost reduction or right-sizing, we need to have visibility into cost aspects, at least to be able to stop wasting money regularly.

Define the AWS management account as the payer account for the entire AWS organization, as explained in the AWS documentation.

Create a central S3 bucket to store the cost and usage report for the entire AWS organization, as explained in the AWS documentation.

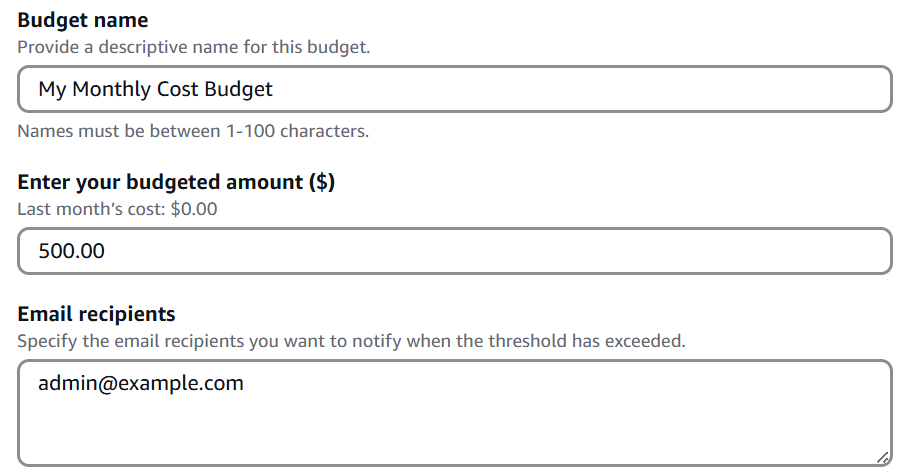

Create a budget for each AWS account, create alerts once a certain threshold of the monthly budget has been reached (for example at 75%, 85%, and 95%), and send alerts once a pre-defined threshold has been reached.

Create a monthly report for each of the AWS accounts in the organization and review the reports regularly.

Enforce tagging policy across the AWS organization (such as tags by line of business, by application, by SDLC stage, etc.), to be able to track resources and review their cost regularly, as explained in the AWS documentation.

Step 5 – Creating a central audit and logging

To have central observability across our AWS organization, it is recommended to create a dedicated AWS member account for logging.

Create a central S3 bucket to store CloudTrail logs from all AWS accounts in the organization, as explained in the AWS documentation.

Make sure access to the CloudTrail bucket is restricted to members of the SOC team only.

Create a central S3 bucket to store CloudWatch logs from all AWS accounts in the organization, and export CloudWatch logs to the central S3 bucket, as explained in the AWS documentation.

Step 6 – Manage security posture

Now that we become aware of the cost, we need to look at our entire AWS organization security posture, and a common assumption is that we have public resources, or resources that are accessible by external identities (such as third-party vendors, partners, customers, etc.)

To be able to detect access to our resources by external identities, we should run the IAM Access Analyzer, generate access reports, and regularly review the report (or send their output to a central SIEM system), as explained in the AWS documentation.

We should also use the IAM Access Analyzer to detect excessive privileges, as explained in the AWS documentation.

Begin assigning Service control policies (SCPs) to OUs in the AWS organizations, with guardrails such as denying the ability to create resources in certain regions (due to regulations) or preventing Internet access.

Use tools such as Prowler, to generate security posture reports for every AWS account in the organization, as mentioned in the AWS documentation – focus on misconfigurations such as resources with public access.

Step 7 – Observability into cloud resources

The next step is visibility into our resources.

To have a central view of logs, metrics, and traces across AWS organizations, we can leverage the CloudWatch cross-account capability, as explained in the AWS documentation. This capability will allow us to create dashboards and perform queries to better understand how our applications are performing, but we need to recall that the more logs we store, has cost implications, so for the first stage, I recommend selecting production applications (or at least the applications that produces the most value to our organization).

To have central visibility over vulnerabilities across the AWS organizations (such as vulnerabilities in EC2 instances, container images in ECR, or Lambda functions), we can use Amazon Inspector, to regularly scan and generate findings from all members in our AWS organizations, as explained in the AWS documentation. With the information from Amazon Inspector, we can later use the AWS SSM to deploy missing security patches, as explained in the AWS documentation.

Summary

In this blog post, I have reviewed some of the most common recommendations I believe should grant you better control and visibility into existing brownfield AWS environments.

I am sure there are many more recommendations and best practices, and perhaps next steps such as resource rightsizing, re-architecting existing workloads, adding third-party solutions for observability and security posture, and more.

I encourage readers of this blog post, to gain control over existing AWS environments, question past decisions (for topics such as cost, efficiency, sustainability, etc.), and always look for the next level in taking full benefit of the AWS environment.

About the author

Eyal Estrin is a cloud and information security architect, an AWS Community Builder, and the author of the books Cloud Security Handbook and Security for Cloud Native Applications, with more than 20 years in the IT industry.

You can connect with him on social media (https://linktr.ee/eyalestrin).

Opinions are his own and not the views of his employer.

Navigating AWS Anti-Patterns: Common Pitfalls and Strategies to Avoid Them

Before beginning the conversation about AWS anti-patterns, we should ask ourselves — what is an anti-pattern?

I have searched the web, and found the following quote:

“An antipattern is just like a pattern, except that instead of a solution, it gives something that looks superficially like a solution but isn’t one” (“Patterns and Antipatterns” by Andrew Koenig)

Key characteristics of antipatterns include:

- They are commonly used processes, structures, or patterns of action.

- They initially seem appropriate and effective.

- They ultimately produce more negative consequences than positive results.

- There exists a better, documented, and proven alternative solution.

In this blog post, I will review some of the common anti-patterns we see on AWS environments, and how to properly use AWS services.

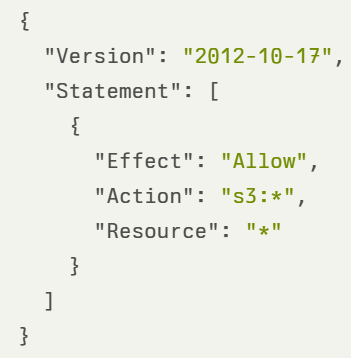

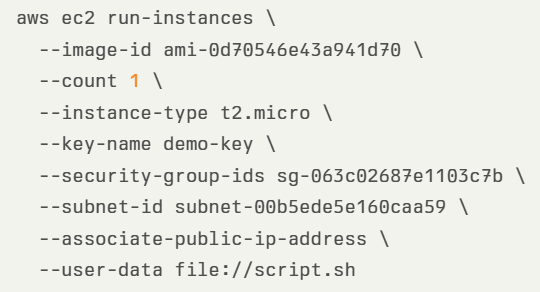

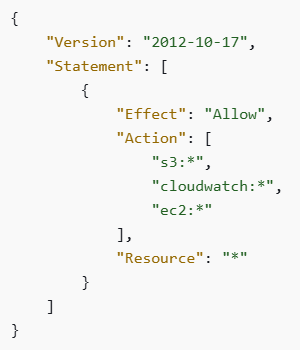

Using a permissive IAM policy

This is common for organizations migrating from the on-prem to AWS, and lack the understanding of how IAM policy works, or in development environments, where “we are just trying to check if some action will work and we will fix the permissions later…” (and in many cases, we fail to go back and limit the permissions).

In the example below, we see an IAM policy allowing access to all S3 buckets, including all actions related to S3:

In the example below, we see a strict IAM policy allowing access to specific S3 buckets, with specific S3 actions:

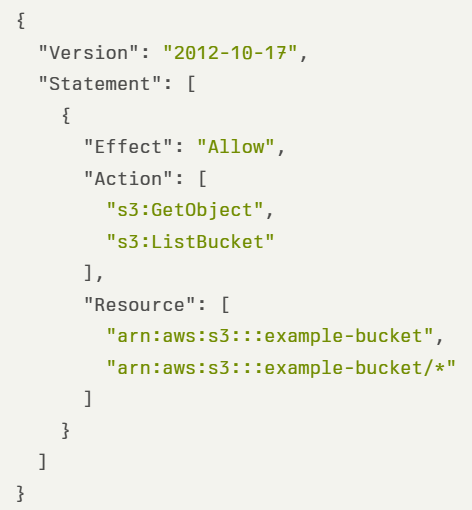

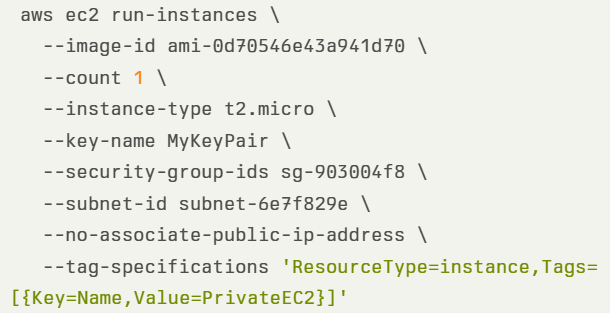

Publicly accessible resources

For many years, deploying resources such as S3 buckets, an EC2 instance, or an RDS database, caused them to be publicly accessible, which made them prone to attacks from external or unauthorized parties.

In production environments, there are no reasons for creating publicly accessible resources (unless we are talking about static content accessible via a CDN). Ideally, EC2 instances will be deployed in a private subnet, behind an AWS NLB or AWS ALB, and RDS / Aurora instances will be deployed in a private subnet (behind a strict VPC security group).

In the case of EC2 or RDS, it depends on the target VPC you are deploying the resources — the default VPC assigns a public IP while creating custom VPC allows us to decide if we need a public subnet or not.

In the example below, we see an AWS CLI command for deploying an EC2 instance with public IP:

In the example below, we see an AWS CLI command for deploying an EC2 instance without a public IP:

In the case of the S3 bucket, since April 2023, when creating a new S3 bucket, by default the “S3 Block Public Access” is enabled, making it private.

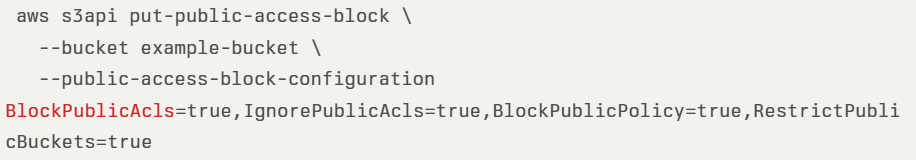

In the example below, we see an AWS CLI command for creating an S3 bucket, while enforcing private access:

Using permissive network access

By default, when launching an EC2 instance, the only default rule is port 22 for SSH access for Linux instances, accessible from 0.0.0.0/0 (i.e., all IPs), which makes all Linux instances (such as EC2 instances or Kubernetes Pods), publicly accessible from the Internet.

As a rule of thumb — always implement the principle of least privilege, meaning, enforce minimal network access according to business needs.

In the case of EC2 instances, there are a couple of alternatives:

- Remotely connect to EC2 instances using EC2 instance connect or using AWS Systems Manager Session Manager.

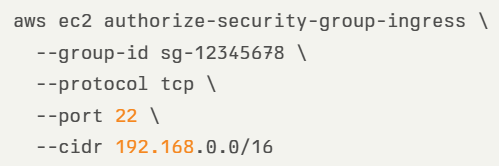

- If you insist on connecting to a Linux EC2 instance using SSH, make sure you configure a VPC security group to restrict access through SSH protocol from specific (private) CIDR. In the example below, we see an AWS CLI command for creating a strict VPC security group:

- In the case of Kubernetes Pods, one of the alternatives is to create a network security policy, to restrict access to SSH protocol from specific (private) CIDR, as we can see in the example below:

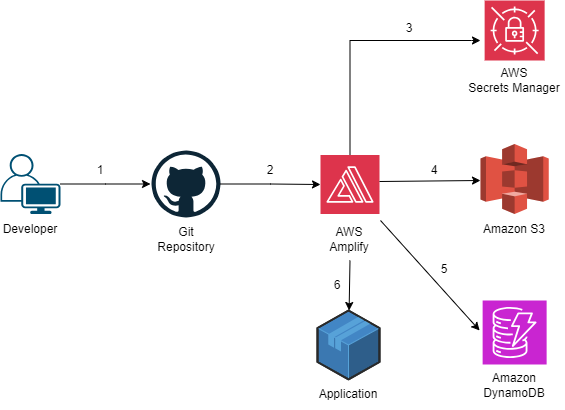

Using hard-coded credentials

This is a common pattern organizations have been doing for many years. Storing (cleartext) static credentials in application code, configuration files, automation scripts, code repositories, and more.

Anyone with read access to the mentioned above will gain access to the credentials and will be able to use them to harm the organization (from data leakage to costly resource deployment such as VMs for Bitcoin mining).

Below are alternatives for using hard-coded credentials:

- Use an IAM role to gain temporary access to resources, instead of using static (or long-lived credentials)

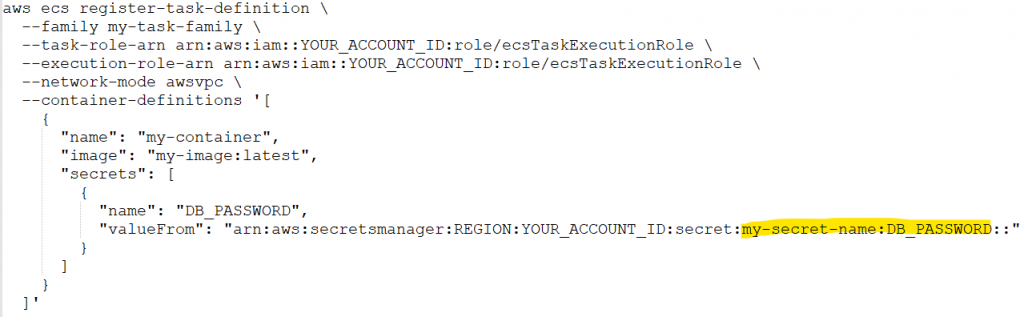

- Use AWS Secrets Manager or AWS Systems Manager Parameter Store to generate, store, retrieve, rotate, and revoke any static credentials. Connect your applications and CI/CD processes to AWS Secrets Manager, to pull the latest credentials. In the example below we see an AWS CLI command for an ECS task pulling a database password from AWS Secrets Manager:

Ignoring service cost

Almost any service in AWS has its pricing, which we need to be aware of while planning an architecture. Sometimes it is fairly easy to understand the pricing — such as EC2 on-demand (pay by the time an instance is running), and sometimes the cost estimation can be fairly complex, such as Amazon S3 (storage cost per storage class, actions such as PUT or DELETE, egress data, data retrieval from archive, etc.)

When deploying resources in an AWS environment, we may find ourselves paying thousands of dollars every month, simply because we ignore the cost factor.

There is no good alternative for having visibility into cloud costs — we still have to pay for the services we deploy and consume, but with simple steps, we will have at least basic visibility into the costs, before we go bankrupt.

In the example below, we can see a monthly budget created in an AWS account to send email notifications when the monthly budget reaches 500$:

Naturally, the best advice is to embed cost in any design consideration, as explained in “The Frugal Architect” (https://www.thefrugalarchitect.com/)

Failing to use auto-scaling

One of the biggest benefits of the public cloud and modern applications is the use of auto-scaling capabilities to add or remove resources according to customer demands.

Without autoscaling, our applications will reach resource limits (such as CPU, memory, or network), and availability issues (in case an application was deployed on a single EC2 instance or single RDS node) which will have a direct impact on customers, or high cost (in case we have provisioned more compute resources than required).

Many IT veterans think of auto-scaling as the ability to add more compute resources such as additional EC2 instances, ECS tasks, DynamoDB tables, Aurora replicas, etc.

Autoscaling is not just about adding resources, but also the ability to adjust the number of resources (i.e., compute instances/replicas) to the actual customer’s demand.

A good example of a scale-out scenario (i.e., adding more compute resources), is a scenario where a publicly accessible web application is under a DDoS attack. An autoscale capability will allow us to add more compute resources, to keep the application accessible to legitimate customers’ requests, until the DDoS is handled by the ISP, or by AWS (through the Advanced Shield service).

A good example of a scale-down scenario (i.e., removing compute resources) is 24 hours after Black Friday or Cyber Monday when an e-commerce website receives less traffic from customers, and fewer resources are required. It makes sense when we think about the number of required VMs, Kubernetes Pods, or ECS tasks, but what about databases?

Some services, such as Aurora Serverless v2, support scaling to 0 capacity, which automatically pauses after a period of inactivity by scaling down to 0 Aurora Capacity Units (ACUs), allowing you to benefit from cost reduction for workloads with inactivity periods.

Failing to leverage storage class

A common mistake when building applications in AWS is to choose the default storage alternative (such as Amazon S3 or Amazon EFS), without considering data access patterns, and as a result, we may be paying a lot of money every month, while storing objects/files (such as logs or snapshots), which are not accessed regularly.

As with any work with AWS services, we need to review the service documentation, understand the data access patterns for each workload that we design, and choose the right storage service and storage class.

Amazon S3 is the most commonly used storage solution for cloud-native applications (from logs, static content, AI/ML, data lakes, etc.

When using S3, consider the following:

- For unpredictable data access patterns (for example when you cannot determine when or how often objects will be accessed), choose Amazon S3 Intelligent-Tiering.

- If you know the access pattern of your data (for example logs accessed for 30 days and then archived) choose S3 lifecycle policies.

Amazon EFS is commonly used when you need to share file storage with concurrent access (such as multiple EC2 instances reading from a shared storage, or multiple Kubernetes Pods writing data to a shared storage).

When using EFS, consider the following:

- For unpredictable data access patterns (for example if files are moved between performance-optimized tiers and cost-optimized tiers), choose Amazon EFS Intelligent Tiering.

- If you know the access pattern of your data (for example logs older than 30 days), configure lifecycle policies.

Summary

The more we deep dive into application design and architecture, the more anti-patterns we will find. Our applications will run, but they will be inefficient, costly, insecure, and more.

In this blog post, we have reviewed anti-patterns from various domains (such as security, cost, and resource optimization).

I encourage the readers to read AWS documentation (including the AWS Well-Architected Framework and the AWS Decision Guides), consider what are you trying to achieve, and design your architectures accordingly.

Embrace a dynamic mindset — Always question your past decisions — there might be better or more efficient ways to achieve similar goals.

About the author

Eyal Estrin is a cloud and information security architect, an AWS Community Builder, and the author of the books Cloud Security Handbook and Security for Cloud Native Applications, with more than 20 years in the IT industry.

You can connect with him on social media (https://linktr.ee/eyalestrin).

Opinions are his own and not the views of his employer.

Hosting Services — The Short and Mid-Term Solution Before Transition to the Public Cloud

Anyone who follows my posts on social networks knows that I am an advocate of the public cloud and cloud adoption.

According to Synergy Research Group, by 2029, “hyperscale operators will account for over 60% of all capacity, while on-premise will drop to just 20%”.

In this blog post, I will share my personal opinion on where I see the future of IT infrastructure.

Before we begin the conversation, I believe we can all agree that many organizations are still maintaining on-prem data centers and legacy applications, and they are not going away any time in the foreseeable future.

Another thing I hope we can agree on is that IT infrastructure aims to support the business, but it does not produce direct revenue for the organization.

Now, let us talk about how I see the future of IT infrastructure in the short or mid-term, and in the long term.

Short or Mid-term future — Hosting or co-location services

Many organizations are still maintaining on-prem data centers of various sizes. There are many valid reasons to keep maintaining on-prem data centers, to name a few:

- Keeping investment in purchased hardware (physical servers, network equipment, storage arrays, etc.)

- Multi-year software license agreements to commercial vendors (such as virtualization, operating systems, databases, etc.)

- Legacy application constraints (from license bound to a physical CPU to monolith applications that were developed many years ago and simply were not developed to run in cloud environments, least not efficient)

- Regulatory constraints requiring to keep data (such as customer data) in a specific country/region.

- Large volumes of data are generated and stored for many years, and the cost/time constraints to move it to the public cloud.

- Employee knowledge — many IT veterans have already invested years over years learning how to deploy hardware/virtualization, how to maintain network or storage equipment, and let us be honest — they may be afraid of making a change, learning something new such as moving to the public cloud.

Regardless of how organizations see on-prem data centers, they will never have the expertise that large-scale hosting providers have, to name a few:

- Ability to deploy high-scale and redundant data centers, in multiple locations (think about primary and DR data centers or active-active data centers)

- Invest in physical security (i.e., who has physical access to the data center and specific cage), while providing services to multiple different customers.

- Build and maintain sustainable data centers, using the latest energy technologies, while keeping the carbon footprint to the minimum.

- Ability to recruit highly skilled personnel (from IT, security, DevOps, DBA, etc.) to support multiple customers of the hosting service data centers.

What are the benefits of hosting services?

Organizations debating about migration to the cloud, and the efforts required to re-architect or adjust their applications and infrastructure to make them efficient to run in the public cloud, could use hosting services.

Here are a few examples for using hosting services:

- Keeping legacy hardware that still produces value for the business, such as Mainframe servers (for customers such as banks or government agencies). Organizations would still be able to consume their existing applications, without having to take care of the ongoing maintenance of legacy hardware.

- Cloud local alternative on-prem — Several CSPs are offering their hardware racks for local or hosting facilities deployment, such as AWS Outposts, Azure Local, or Oracle Cloud@Customer, allowing customers to consume hardware identical to the hardware that the CSPs offer (managed remotely by the CSPs), while using the same APIs, but locally.

- Many organizations are limited by their Internet bandwidth. By using hosting services, organizations can leverage the network bandwidth of their hosting provider, and the ability to have multiple ISPs, allowing them both inbound and outbound network connectivity.

- Many organizations would like to begin developing GenAI applications, which requires them an invest in expensive GPU hardware. The latest hardware costs a lot of money, requires dedicated cooling and it gets outdated over time. Instead, hosting service provider can purchase and maintain a large number of GPUs, offering their customers the ability to consume GPU to AI/ML workloads, while paying for the time they used the hardware.

- Modern pricing model — Hosting services can offer customers modern pricing plans, such as paying for the actual amount of storage consumed, paying for the time a machine was running (or offering to purchase hardware for several years in advance), Internet bandwidth (or traffic consumed on the network equipment), etc.

Hosting services and the future of modern applications

Just because organizations will migrate from their on-prem to hosting services, does not mean they cannot begin developing modern applications.

Although hosting services do not offer all the capabilities of the public cloud (such as infinite scale, perform actions, and consumption information via API calls, etc.), there are still many things organizations can begin today, to name a few:

- Deploy applications inside containers — a hosting provider can deploy and maintain Kubernetes control plane for his customers, allowing them to consume Kubernetes, without having to take care of the burden related to Kubernetes maintenance. A common example of Kubernetes that can be deployed locally at a hosting provider facility, and later on can be used in public cloud environments is OpenShift.

- Consume object storage — a hosting provider can deploy and maintain object storage services (such as Min.io), offering his customers to begin consuming storage capabilities that exist in cloud-native environments.

- Consume open-source queuing services — customers can deploy message brokers such as ActiveMQ or RabbitMQ, to develop asynchronous applications, and when moving to the public cloud, use the cloud providers managed services alternatives.

- Consume message streaming services — customers can begin deploying event-driven architectures using Apache Kafka, to stream a large number of messages, in near real-time, and when moving to the public cloud, use the cloud providers managed services alternatives.

- Deploy components using Infrastructure as Code. Some of the common IaC alternatives such as Terraform and Pulumi, already support providers from the on-prem environment (such as Kubernetes), which allows organizations to already begin using modern deployment capabilities.

Note — perhaps the biggest downside of hosting services in the area of modern applications is the lack of function-as-a-service capabilities. Most FaaS are vendor-opinionated, and I have not heard of many customers using FaaS locally.

Transitioning from on-prem to hosting services

The transition between the on-prem and a hosting facility should be straightforward, after all, you are keeping your existing hardware (from Mainframe servers to physical appliances) and simply have the hosting provider take care of the ongoing maintenance.

Hosting services will allow organizations access to managed infrastructure (similar to the Infrastructure as a Service model in the public cloud), and some providers will also offer you managed services (such as storage, WAF, DDoS protection, and perhaps even managed Kubernetes or databases).

Similarly to the public cloud, the concept of shared responsibility is still relevant. The hosting provider is responsible for all the lower layers (from physical security, physical hardware, network, and storage equipment, up until the virtualization layer), and organizations are responsible for whatever happens within their virtual servers (such as who has access, what permissions are granted, what data is being stored, etc.). In case an organization needs to comply with regulations (such as PCI-DSS, FedRAMP, etc.), the organization needs to work with the hosting provider to figure out how to comply with the regulation end-to-end (do not assume that if your hosting provider’s physical layer is compliant, so does your OS and data layers).

Long-term future — The public cloud

I have been a cloud advocate for many years, so my opinion about the public cloud is a little bit biased.

The public cloud brings agility into the traditional IT infrastructure — when designing an architecture, you have multiple ways to achieve similar goals — from traditional architecture based on VMs to modern designs such as microservice architecture built on top of containers, FaaS, or event-driven architecture.

One of the biggest benefits of using the public cloud is the ability to consume managed services (such as managed database services, managed Kubernetes services, managed load-balancers, etc.), where from a customer point of view, you do not need to take care of compute scale (i.e., selecting the underlying compute hardware resources or number of deployed compute instances), or the ongoing maintenance of the underlying layers.

Elasticity and infinite resource scale are huge benefits of the public cloud (at least for the hyper-scale cloud providers), which no data center can compete with. Organizations can design architectures that will dynamically adjust the number of resources according to customers’ load (up or down).

For many IT veterans, moving to the public cloud requires a long learning curve (among others, switching from being a specialist in a certain domain to becoming a generalist in multiple domains). Organizations need to invest resources in employee training and knowledge sharing.

Another important topic that organizations need to focus on while moving to the public cloud is cost — it should be embedded in any architecture or design decision. Engineers and architects need to understand the pricing model for each service they are planning to use and try to select the most efficient service alternative (such as storage tier, compute size, database type, etc.)

Summary

The world of IT infrastructure as we currently know it is constantly changing. Organizations would still like to gain value from their past investment in hardware and legacy applications. In my personal opinion, using legacy applications that still produce value (until they finally reach the decommission phase), simply does not worth the burden of having to maintain on-prem data centers.

Organizations should focus on what brings them value, such as developing new products or providing better services for their customers, and shift the ongoing maintenance to providers who specialize in this field.

For startups who were already born in the cloud, the best alternative is building cloud-native applications in the public cloud.

For traditional organizations, that still maintain legacy hardware and applications, the most suitable alternative for the short or mid-term is to move away from their existing data centers, to one of the hyper-scale or dedicated hosting providers in their local country.

In the long term, organizations should assess all their existing applications and infrastructure, and either decommission old applications, or re-architect / modernize to be deployed in the public cloud, using managed services, modern architectures (microservices, containers, FaaS, event-driven architecture, etc.), using modern deployment methods (i.e., Infrastructure as Code).

The future will probably be a mix of hyper-clouds (hosting facilities combined with one or more public clouds), and single or multiple public cloud providers.

About the author

Eyal Estrin is a cloud and information security architect, an AWS Community Builder, and the author of the books Cloud Security Handbook and Security for Cloud Native Applications, with more than 20 years in the IT industry.

You can connect with him on social media (https://linktr.ee/eyalestrin).

Opinions are his own and not the views of his employer.

Stop bringing old practices to the cloud

When organizations migrate to the public cloud, they often mistakenly look at the cloud as “somebody else’s data center”, or “a suitable place to run a disaster recovery site”, hence, bringing old practices to the public cloud.

In the blog, I will review some of the common old (and perhaps bad) practices organizations are still using today in the cloud.

Mistake #1 — The cloud is cheaper

I often hear IT veterans comparing the public cloud to the on-prem data center as a cheaper alternative, due to versatile pricing plans and cost of storage.

In some cases, this may be true, but focusing on specific use cases from a cost perspective is too narrow, and missing the benefits of the public cloud — agility, scale, automation, and managed services.

Don’t get me wrong — cost is an important factor, but it is time to look at things from an efficiency point of view and embed it as part of any architecture decision.

Begin by looking at the business requirements and ask yourself (among others):

- What am I trying to achieve, what capabilities do I need, and then figure out which services will allow you to accomplish your needs?

- Do you need persistent storage? Great. What are your data access patterns? Do you need the data to be available in real time, or can you store data in an archive tier?

- Your system needs to respond to customers’ requests — does your application need to provide a fast response to API calls, or is it ok to provide answers from a caching service, while calls are going through an asynchronous queuing service to fetch data?

Mistake #2 — Using legacy architecture components

Many organizations are still using legacy practices in the public cloud — from moving VMs in a “lift & shift” pattern to cloud environments, using SMB/CIFS file services (such as Amazon FSx for Windows File Server, or Azure Files), deploying databases on VMs and manually maintaining them, etc.

For static and stable legacy applications, the old practices will work, but for how long?

Begin by asking yourself:

- How will your application handle unpredictable loads? Autoscaling is great, but can your application scale down when resources are not needed?

- What value are you getting by maintaining backend services such as storage and databases?

- What value are you getting by continuing to use commercial license database engines? Perhaps it is time to consider using open-source or community-based database engines (such as Amazon RDS for PostgreSQL, Azure Database for MySQL, or OpenSearch) to have wider community support and perhaps be able to minimize migration efforts to another cloud provider in the future.

Mistake #3 — Using traditional development processes

In the old data center, we used to develop monolith applications, having a stuck of components (VMs, databases, and storage) glued together, making it challenging to release new versions/features, upgrade, scale, etc.

The more organizations began embracing the public cloud, the shift to DevOps culture, allowed organizations the ability to develop and deploy new capabilities much faster, using smaller teams, each own specific component, being able to independently release new component versions, and take the benefit of autoscaling capability, responding to real-time load, regardless of other components in the architecture.

Instead of hard-coded, manual configuration files, pruning to human mistakes, it is time to move to modern CI/CD processes. It is time to automate everything that does not require a human decision, handle everything as code (from Infrastructure as Code, Policy as Code, and the actual application’s code), store everything in a central code repository (and later on in a central artifact repository), and be able to control authorization, auditing, roll-back (in case of bugs in code), and fast deployments.

Using CI/CD processes, allows us to minimize changes between different SDLC stages, by using the same code (in code repository) to deploy Dev/Test/Prod environments, by using environment variables to switch between target environments, backend services connection settings, credentials (keys, secrets, etc.), while using the same testing capabilities (such as static code analysis, vulnerable package detection, etc.)

Mistake #4 — Static vs. Dynamic Mindset

Traditional deployment had a static mindset. Applications were packed inside VMs, containing code/configuration, data, and unique characteristics (such as session IDs). In many cases architectures kept a 1:1 correlation between the front-end component, and the backend, meaning, a customer used to log in through a front-end component (such as a load-balancer), a unique session ID was forwarded to the presentation tier, moving to a specific business logic tier, and from there, sometimes to a specific backend database node (in the DB cluster).

Now consider what will happen if the front tier crashes or is irresponsive due to high load. What will happen if a mid-tier or back-end tier is not able to respond on time to a customer’s call? How will such issues impact customers’ experience having to refresh, or completely re-login again?

The cloud offers us a dynamic mindset. Workloads can scale up or down according to load. Workloads may be up and running offering services for a short amount of time, and decommission when not required anymore.

It is time to consider immutability. Store session IDs outside compute nodes (from VMs, containers, and Function-as-a-Service).

Still struggling with patch management? It’s time to create immutable images, and simply replace an entire component with a newer version, instead of having to pet each running compute component.

Use CI/CD processes to package compute components (such as VM or container images). Keep artifacts as small as possible (to decrease deployment and load time).

Regularly scan for outdated components (such as binaries and libraries), and on any development cycle update the base images.

Keep all data outside the images, on a persistent storage — it is time to embrace object storage (suitable for a variety of use cases from logging, data lakes, machine learning, etc.)

Store unique configuration inside environment variables, loaded at deployment/load time (from services such as AWS Systems Manager Parameter Store or Azure App Configuration), and for variables containing sensitive information use secrets management services (such as AWS Secrets Manager, Azure Key Vault, or Google Secret Manager).

Mistake #5 — Old observability mindset

Many organizations migrated workloads to the public cloud, still kept their investment in legacy monitoring solutions (mostly built on top of deployed agents), and shipping logs (from application, performance, security, etc.) from the cloud environment back to on-prem, without considering the cost of egress data from the cloud, or the cost to store vast amounts of logs generated by the various services in the cloud, in many cases still based on static log files, and sometimes even based on legacy protocols (such as Syslog).

It is time to embrace a modern mindset. It is fairly easy to collect logs from various services in the cloud (as a matter of fact, some logs such as audit logs are enabled for 90 days by default).

Time to consider cloud-native services — from SIEM services (such as Microsoft Sentinel or Google Security Operations) to observability services (such as Amazon CloudWatch, Azure Monitor, or Google Cloud Observability), capable of ingesting (almost) infinite amount of events, streaming logs and metrics in near real-time (instead of still using log files), and providing an overview of entire customers service (made out of various compute, network, storage and database services).

Speaking about security — the dynamic nature of cloud environments does not allow us to keep legacy systems scanning configuration and attack surface in long intervals (such as 24 hours or several days) just to find out that our workload is exposed to unauthorized parties, that we made a mistake leaving configuration in a vulnerable state (still deploying resources expose to the public Internet?), or kept our components outdated?

It is time to embrace automation and continuously scan configuration and authorization, and gain actionable insights on what to fix, as soon as possible (and what is vulnerable, but not directly exposed from the Internet, and can be taken care of at lower priority).

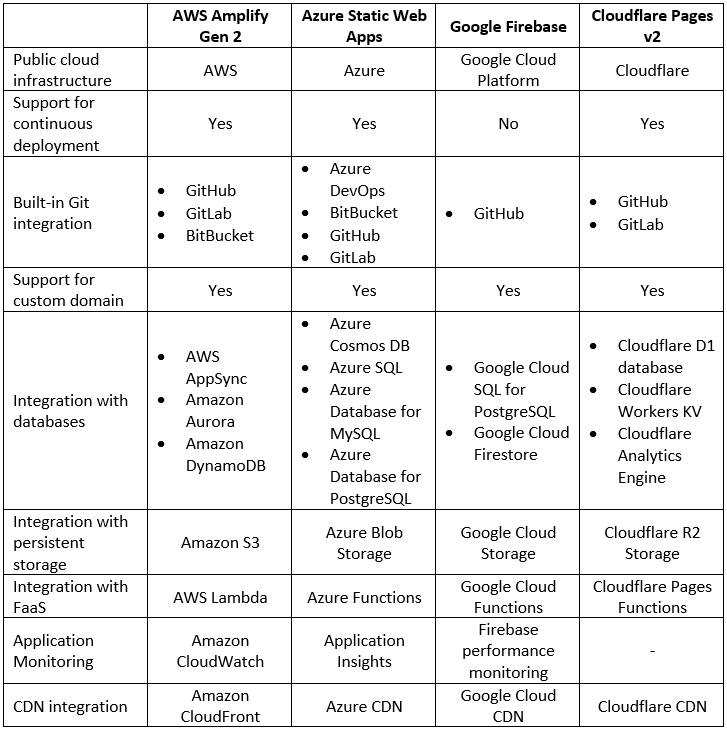

Mistake #6 — Failing to embrace cloud-native services

This is often due to a lack of training and knowledge about cloud-native services or capabilities.

Many legacy workloads were built on top of 3-tier architecture since this was the common way most IT/developers knew for many years. Architectures were centralized and monolithic, and organizations had to consider scale, and deploy enough compute resources, many times in advance, failing to predict spikes in traffic/customer requests.

It is time to embrace distributed systems, based on event-driven architectures, using managed services (such as Amazon EventBridge, Azure Event Grid, or Google Eventarc), where the cloud provider takes care of load (i.e., deploying enough back-end compute services), and we can stream events, and be able to read events, without having to worry whether the service will be able to handle the load.

We can’t talk about cloud-native services without mentioning functions (such as AWS Lambda, Azure Functions, or Cloud Run functions). Although functions have their challenges (from vendor opinionated, maximum amount of execution time, cold start, learning curve, etc.), they have so much potential when designing modern applications. To name a few examples where FaaS is suitable we can look at real-time data processing (such as IoT sensor data), GenAI text generation (such as text response for chatbots, providing answers to customers in call centers), video transcoding (such as converting videos to different formats of resolutions), and those are just small number of examples.

Functions can be suitable in a microservice architecture, where for example one microservice can stream logs to a managed Kafka, some microservices can be trigged to functions to run queries against the backend database, and some can store data to a persistent datastore in a fully-managed and serverless database (such as Amazon DynamoDB, Azure Cosmos DB, or Google Spanner).

Mistake #7 — Using old identity and access management practices

No doubt we need to authenticate and authorize every request and keep the principle of least privileged, but how many times we have seen bad malpractices such as storing credentials in code or configuration files? (“It’s just in the Dev environment; we will fix it before moving to Prod…”)

How many times we have seen developers making changes directly on production environments?

In the cloud, IAM is tightly integrated into all services, and some cloud providers (such as the AWS IAM service) allow you to configure fine-grained permissions up to specific resources (for example, allow only users from specific groups, who performed MFA-based authentication, access to specific S3 bucket).

It is time to switch from using static credentials to using temporary credentials or even roles — when an identity requires access to a resource, it will have to authenticate, and its required permissions will be reviewed until temporary (short-lived / time-based) access is granted.

It is time to embrace a zero-trust mindset as part of architecture decisions. Assume identities can come from any place, at any time, and we cannot automatically trust them. Every request needs to be evaluated, authorized, and eventually audited for incident response/troubleshooting purposes.

When a request to access a production environment is raised, we need to embrace break-glass processes, making sure we authorize the right number of permissions (usually for members of the SRE or DevOps team), and permissions will be automatically revoked.

Mistake #8 — Rushing into the cloud with traditional data center knowledge

We should never ignore our team’s knowledge and experience.

Rushing to adopt cloud services, while using old data center knowledge is prone to failure — it will cost the organization a lot of money, and it will most likely be inefficient (in terms of resource usage).

Instead, we should embrace the change, learn how cloud services work, gain hands-on practice (by deploying test labs and playing with the different services in different architectures), and not be afraid to fail and quickly recover.

To succeed in working with cloud services, you should be a generalist. The old mindset of specialty in certain areas (such as networking, operating systems, storage, database, etc.) is not sufficient. You need to practice and gain wide knowledge about how the different services work, how they communicate with each other, what are their limitations, and don’t forget — what are their pricing options when you consider selecting a service for a large-scale production system.

Do not assume traditional data center architectures will be sufficient to handle the load of millions of concurrent customers. The cloud allows you to create modern architectures, and in many cases, there are multiple alternatives for achieving business goals.

Keep learning and searching for better or more efficient ways to design your workload architectures (who knows, maybe in a year or two there will be new services or new capabilities to achieve better results).

Summary

There is no doubt that the public cloud allows us to build and deploy applications for the benefit of our customers while breaking loose from the limitations of the on-prem data center (in terms of automation, scale, infinite resources, and more).

Embrace the change by learning how to use the various services in the cloud, adopt new architecture patterns (such as event-driven architectures and APIs), prefer managed services (to allow you to focus on developing new capabilities for your customers), and do not be afraid to fail — this is the only way you will gain knowledge and experience using the public cloud.

About the author

Eyal Estrin is a cloud and information security architect, an AWS Community Builder, and the author of the books Cloud Security Handbook and Security for Cloud Native Applications, with more than 20 years in the IT industry.

You can connect with him on social media (https://linktr.ee/eyalestrin).

Opinions are his own and not the views of his employer.

Enforcing guardrails in the AWS environment

When building workloads in the public cloud, one of the most fundamental topics to look at is permissions to access resources and take actions.

This is true for both human identities (also known as interactive authentication) and for application or service accounts (also known as non-interactive authentication).

AWS offers its customers multiple ways to enforce guardrails – a mechanism to allow developers or DevOps teams to achieve their goals (i.e., develop and deploy new applications/capabilities) while keeping pre-defined controls (as explained later in this blog post).

In this blog post, I will review the various alternatives for enforcing guardrails in AWS environments.

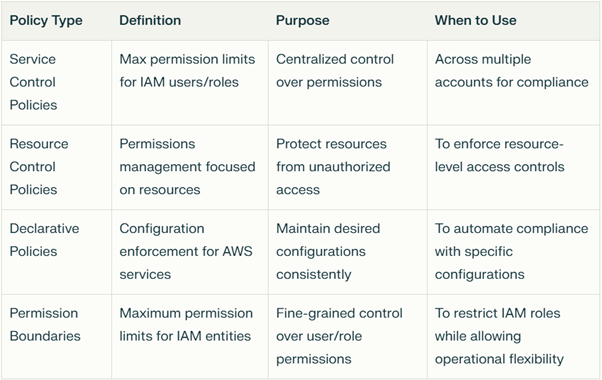

Service control policies (SCPs)

SCPs are organizational policies written in JSON format. They are available only for customers who enabled all features of AWS Organization.

Unlike IAM policies, which grant identities access to resources, SCPs allow configuring the maximum allowed permissions identities (IAM users and IAM roles) have over resources (i.e., permissions guardrails), within an AWS Organization.

SCPs can be applied at an AWS Organization root hierarchy (excluding organization management account), an OU level, or to a specific AWS account in the AWS Organization, which makes them impact maximum allowed permissions outside IAM identities control (outside the context of AWS accounts).

AWS does not grant any access by default – if an AWS service has not been allowed using an SCP somewhere in the AWS Organization hierarchy, no identity will be able to consume it.

A good example of using SCP is to configure which AWS regions are enabled at the organization level, and as a result, no resources will be created in regions that were not specifically allowed. This can be very useful if you have a regulation that requires storing customers’ data in a specific AWS region (for example – keep all EU citizens’ data in EU regions and deny all regions outside the EU).

Another example of configuring guardrails using SCP is enforcing encryption at rest for all objects in S3 buckets. See the policy below:

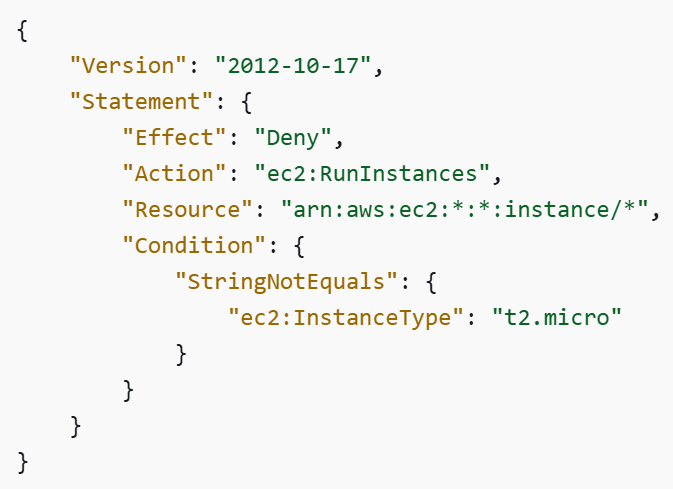

SCPs are not only limited to security controls; they can also be used for cost. In the example below, we allow the deployment of specific EC2 instance types:

Source: https://docs.aws.amazon.com/organizations/latest/userguide/orgs_manage_policies_scps_syntax.html

When designing SCPs as guardrails, we need to recall that it has limitations. A service control policy has a maximum size of 5120 characters (including spaces), which means there is a maximum number of conditions and amount of fine-grain policy you can configure using SCPs.

Additional references:

- https://docs.aws.amazon.com/organizations/latest/userguide/orgs_manage_policies_scps.html

- https://github.com/aws-samples/service-control-policy-examples

Resource control policies (RCPs)

RCPs, similarly, to SCPs are organizational policies written in JSON format. They are available only for customers who enabled all features of AWS Organization.

Unlike IAM policies, which grant identities access to resources, RCPs allow configuring the maximum allowed permissions on resources, within an AWS Organization.

RCPs are not enough to be able to grant permissions to resources – they only serve as guardrails. To be able to access a resource, you need to assign the resource an IAM policy (an identity-based or resource-based policy).

Currently (December 2024), RCPs support only Amazon S3, AWS STS, Amazon SQS, AWS KMS and AWS Secrets Manager.

RCPs can be applied at an AWS Organization root hierarchy (excluding organization management account), an OU level, or to a specific AWS account in the AWS Organization, which makes them impact maximum allowed permissions outside IAM identities control (outside the context of AWS accounts).

An example of using RCP can be to require a minimum version of TLS protocol when accessing an S3 bucket:

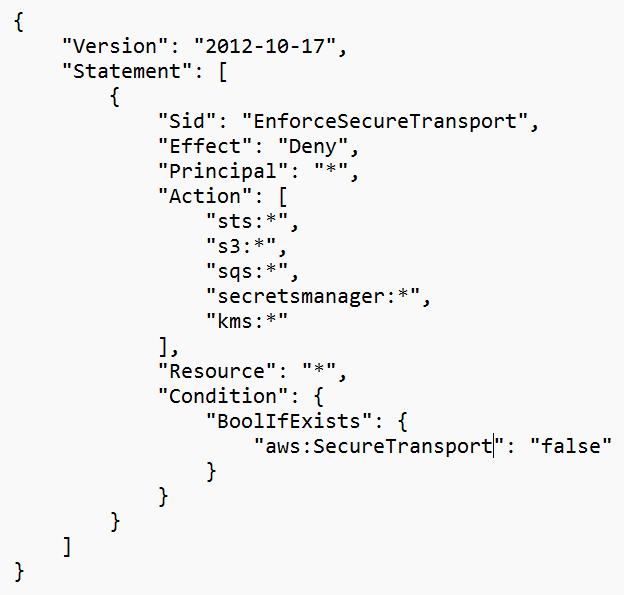

Another example can be to enforce HTTPS traffic to all supported services:

Source: https://docs.aws.amazon.com/organizations/latest/userguide/orgs_manage_policies_rcps_examples.html

Another example of using RCPs is to prevent external access to sensitive resources such as S3 buckets.

Like SCPs, RCPs have limitations. A resource control policy has a maximum size of 5120 characters (including spaces), which means there is a maximum number of conditions and amount of fine-grain policy you can configure using RCPs.

Additional references:

- https://docs.aws.amazon.com/organizations/latest/userguide/orgs_manage_policies_rcps.html

- https://github.com/aws-samples/resource-control-policy-examples

- https://github.com/aws-samples/data-perimeter-policy-examples

Declarative policies

Declarative policies allow customers to centrally enforce a desired configuration state for AWS services, regardless of changes in service features or APIs.

Declarative policies can be created using AWS Organizations console, AWS CLI, CloudFormation templates, and AWS Control Tower.

Currently (December 2024), declarative policies support only Amazon EC2, Amazon EBS, and Amazon VPC.

Declarative policies can be applied at an AWS Organization root hierarchy, an OU level, or to a specific AWS account in the AWS Organization.

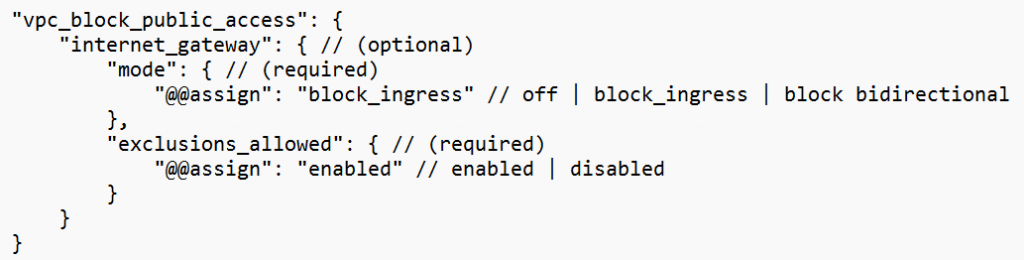

An example of using Declarative policies is to block resources inside a VPC from reaching the public Internet:

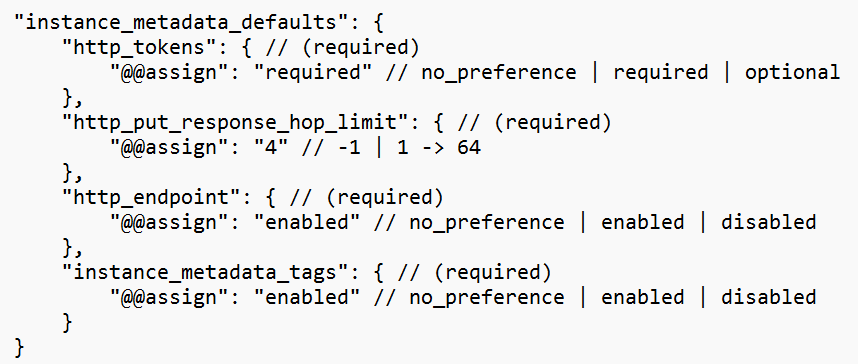

Another example is to configure default IMDS settings for new EC2 instances:

Like SCPs and RCPs, Declarative policies have their limitations. A declarative policy has a maximum size of 10,000 characters (including spaces).

Additional references:

- https://docs.aws.amazon.com/organizations/latest/userguide/orgs_manage_policies_declarative.html

- https://aws.amazon.com/blogs/aws/simplify-governance-with-declarative-policies/

- https://docs.aws.amazon.com/controltower/latest/controlreference/declarative-controls.html

Permission boundaries

Permission boundaries are advanced IAM features that define the maximum permissions granted using identity-based policies attached to an IAM user or IAM role (but not directly to IAM groups), effectively creating a boundary around their permissions, within the context of an AWS account.

Permissions boundaries serve as guardrails, allowing customers to centrally configure restrictions (i.e., limit permissions) on top of IAM policies – they do not grant permissions.

When applied, the effective permissions are as follows:

- Identity level: Identity-based policy + permission boundaries = effective permissions

- Resource level: Resource-based policy + Identity-based policy + permission boundaries = effective permissions

- Organizations level: Organizations SCP + Identity-based policy + permission boundaries = effective permissions

- Temporary Session level: Session policy + Identity-based policy + permission boundaries = effective permissions

An example of using permission boundaries to allow access only to Amazon S3, Amazon CloudWatch, and Amazon EC2 (which can be applied to a specific IAM user):

Source: https://docs.aws.amazon.com/IAM/latest/UserGuide/access_policies_boundaries.html

Another example is to restrict an IAM user to specific actions and resources:

Source: https://docs.aws.amazon.com/IAM/latest/UserGuide/access_policies_boundaries.html

Like SCPs, RCPs, and Declarative policies, permission boundaries have their limitations. A permission boundary has a maximum size of 6144 characters (including spaces), and you can have up to 10 managed policies and 1 permissions boundary attached to an IAM role.

Additional references:

- https://docs.aws.amazon.com/IAM/latest/UserGuide/access_policies_boundaries.html

- https://aws.amazon.com/blogs/security/when-and-where-to-use-iam-permissions-boundaries/

- https://github.com/aws-samples/example-permissions-boundary

Summary

In this blog post, I have reviewed the various alternatives that AWS offers its customers to configure guardrails for accessing resources within AWS Organizations at a large scale.

Each alternative serves a slightly different purpose, as summaries below:

I encourage you to read AWS documentation, explaining the logic for evaluating requests to access resources:

I also highly recommend you watch the lecture “Security invariants: From enterprise chaos to cloud order from AWS re:Invent 2024”:

About the author

Eyal Estrin is a cloud and information security architect, an AWS Community Builder, and the author of the books Cloud Security Handbook and Security for Cloud Native Applications, with more than 20 years in the IT industry.

You can connect with him on social media (https://linktr.ee/eyalestrin).

Opinions are his own and not the views of his employer.

My 2025 Wishlist for Public Cloud Providers

Anyone who has been following me on social media knows that I am a huge advocate of the public cloud.

By now, we are just after the biggest cloud conferences — Microsoft Ignite 2024 and AWS re:Invent 2024, and just before the end of 2024.

As we are heading to 2025, I thought it would be interesting to share my wishes from the public cloud providers in the coming year.

Resiliency and Availability

The public cloud has existed for more than a decade, and at least according to the CSPs documentation, it is designed to survive major or global outages impacting customers all over the world.

And yet, in 2024 each of the CSPs had suffered from major outages. To name a few:

- Summary of the Amazon Kinesis Data Streams Service Event in Northern Virginia (US-EAST-1) Region

- Azure Incident Retrospective: Storage issues in Central US

- Incident affecting Cloud Firestore, Google App Engine, Google Cloud Functions

In most cases, the root cause of outages originates from unverified code/configuration changes, or lack of resources due to spike or unexpected use of specific resources.

The result always impacts customers in a specific region, or worse in multiple regions.

Although CSPs implement different regions and AZs to limit the blast radius and decrease the chance of major customer impact, in many cases we realize that critical services have their control plane (the central management system that provides orchestration, configuration, and monitoring capabilities) deployed in a central region (usually in East US data centers), and the blast radius impact customers all over the world.

My wish for 2025 from CSPs — improve the level of testing, and observability, for any code or configuration change (whether done by engineers, or by automated systems).

For the long term, CSPs should find a way to design the service control plane to be synced and spread across multiple regions (at least one copy in each continent), to limit the blast radius of global outages.

Secure by Default

Reading the announcements of new services, and the service official documentation, we can learn the CSPs understand the importance of “secure by default”, i.e., enabling a service or capability, where security configuration was designed from day 1.

And yet, in 2024 each of the CSPs had suffered from security incidents resulting from a misconfiguration. To name a few:

- AWS Security Bulletin AWS-2024–003

- Microsoft Power Pages: Data Exposure Reviewed

- Exploring Google Cloud Default Service Accounts: Deep Dive and Real-World Adoption Trends

It is always best practice to read the vendor’s documentation, and understand the default settings or behavior of every service or capability we are enabling, however, following the shared responsibility model, as customers, we expect the CSPs to design everything secured by default.

I understand that some CSPs’ product groups have an agenda for releasing new services to the market as quickly as possible, allowing customers to experience and adopt new capabilities, but security must be job zero.

My wish for 2025 from CSPs is to put security higher in your priorities — this is relevant for both the product groups and the development teams of each product.

Invest in threat modeling, from the design phase until each service/capability is deployed to production, and try to anticipate what could go wrong.

Choose secure/close by default (and provide documentation to allow customers to choose if they wish to change the default settings), instead of keeping services exposed, which forces customers to make changes after the fact, after their data was already exposed to unauthorized parties).

Service Retirements

I understand that from time to time a product group, or even the business of a CSP reviews the list of currently available services and decides to retire a service, leaving their customers with no alternative or migration path.

In 2024 we saw several publications of service retirements. To name a few:

- AWS to discontinue Cloud9, CodeCommit, CloudSearch, and several other services

- Azure Media Services retirement guide

- Google Cloud Platform (GCP) has announced the end-of-sale for Cloud Source Repositories

The leader of service retirement/deprecation is GCP, followed by Azure.

In some cases, customers receive (short) notice, asking them to migrate their data and find an alternate solution, but from a customer point of view, it does not look good (to be politically correct), that the services that we have been using for a while are now stopped working and we need to find alternate solutions for production environments.

Although AWS service was far from being ideal while decommissioning services such as Cloud9, and Code Commit, their approach is different from the rest of the cloud providers, with their working backwards development methodology.

My wish for 2025 from CSPs is to put customers first and do market research before head. Check with your customers what capabilities are they looking for, before beginning the development of a new service.

Even if the market changes over time, remember that you have production customers using your services. Prepare alternatives in advance and a documented migration path to those alternatives. Do everything you can to support services for a very long time, and if there is no other alternative, keep supporting your services, even with no new capabilities, but at least your customers will know that in case of production issues, or discovered security vulnerabilities, they will have support and an SLA.

Cost and Economics of Scale

When organizations began migrating their on-prem workloads to the public cloud, the notion was that due to economics of scale, the CSPs would be able to offer their customers cost-effective alternatives for consuming services and infrastructure, compared to the traditional data centers.

Many customers got the equation wrong, trying to compare the cost of hardware (such as VMs and storage) between their data center, and the public cloud alternative, without adding to the equation the cost of maintenance, licensing, manpower, etc., and the result was a higher cost for “lift & shift” migrations in the public cloud. In the long run, after a decade of organizations working with the public cloud, the alternative of re-architecture provides much better and cost-effective results.

Although we have not seen documented publications of CSPs announcing an increase in service costs, there are cases that from a customer’s point of view simply do not make sense.

A good example is egress data cost. If all CSPs do not charge customers for ingress data costs, there is no reason to charge for egress data costs. It is the same hardware, so I really cannot understand the logic in high (or any) charges of egress data. Customers should have the option to pull data from their cloud accounts (sometimes to keep data on-prem in hybrid environments, and sometimes to allow migration to other CSPs), without being charged.

The same rule applies to inter-zone traffic charges (see AWS and GCP documentation), or to enabling private traffic inside the CSPs backbone (see AWS, Azure, and GCP documentation).

My wish for 2025 from CSPs is to put customers first. CSPs are already encouraging customers to build highly-available infrastructure spanned across multiple AZs, and encouraging customers to keep the services that support customers’ data private (and not exposed to the public Internet). Although the public cloud is a business that wishes to gain revenue, CSPs should think about their customers, and offer them more capabilities, but at lower prices, to make the public cloud the better and cost-effective alternative to the traditional data centers.

Vendor Lock-In

This was a challenge from the initial days of the public cloud. Each CSP offered its alternative and list of services, with different capabilities, and naturally different APIs.

From an architectural point of view, customers should first understand the business demands, before choosing a technology (or specific services from a specific CSP).

Each CSP offers its services, and it does not mean it has to be a negative thing — if in doubt, I highly recommend you to watch the lecture “Do modern cloud applications lock you in?” by Gregor Hohpe, from AWS re:Invent 2023.

In the past, there was the notion that packaging our applications inside containers and perhaps using Kubernetes (in its various managed alternatives), would enable customers to switch between cloud providers or deploy highly-available workloads on top of multiple CSPs. This notion was found to be false since containers do not leave in a vacuum, and customers do not pack their entire application inside a single container/microservice. Cloud-native applications are deployed inside a cloud eco-system, and consume data from other services such as storage, networking, databases, message queuing, etc., so trying to migrate between CSPs will still require a lot of effort connecting to different sets of APIs.

My wish for 2025 from CSPs, and I know it is a lot to ask, but could you invest in standardization of your APIs?

Instead of customers having to add abstraction layers on top of cloud services, forcing them to choose the lower common denominator, why not offer the same APIs, and hopefully the same (or mostly the same) capabilities?

If we look at Kubernetes, and its CSI Storage, as two examples — they allow customers to consume container orchestration and backend storage using similar APIs, and they are both supported by the CNCF, which allows customers an easy alternative to deploy and maintain cloud resources, even on top of different CSPs.

Summary

There are a lot more things I wish Santa Claus could bring me in 2025, but as it relates to the public cloud, I truly wish each of the CSPs product group could read my blog post and begin making the required changes to allow customers better experience in all the areas that I have mentioned in my blog post.

For the readers in the audience, feel free to contact me on social media, and share with me your thoughts about this blog post.

About the author

Eyal Estrin is a cloud and information security architect, an AWS Community Builder, and the author of the books Cloud Security Handbook and Security for Cloud Native Applications, with more than 20 years in the IT industry.

You can connect with him on social media (https://linktr.ee/eyalestrin).

Opinions are his own and not the views of his employer.

Qualities of a Good Cloud Architect

In 2020 I published a blog post called “What makes a good cloud architect?“, where I tried to lay out some of the main qualities required to become a good cloud architect.

Four years later, I still believe most of the qualities are crucial, but I would like to focus on what I believe today is critical to succeed as a cloud architect.

Be able to see the bigger picture

A good cloud architect must be able to see the bigger picture.

Before digging into details, an architect must be able to understand what the business is trying to achieve (from a chatbot, e-commerce mobile app, a reporting system for business analytics, etc.)

Next, it is important to understand technological constraints (such as service cost, resiliency, data residency, service/quota limits, etc.)

A good architect will be able to translate business requirements, together with technology constraints, into architecture.

Being multi-lingual

An architect should be able to speak multiple languages – speak to business decision-makers understand their goals, and be able to translate it to technical teams (such as developers, DevOps, IT, engineers, etc.)

The business never comes with a requirement “We would like to expose an API to end-customers”. They will probably say “We would like to provide customers valuable information about investments” or “Provide patients with insights about their health”.

Being multi-disciplinary

There is always the debate between someone who is a specialist in a certain area (from being an expert in a specific cloud provider’s eco-system or being an expert in specific technology such as Containers or Serverless) and someone who is a generalist (having broad knowledge about cloud technology from multiple cloud providers).

I am always in favor of being a generalist, having hands-on experience working with multiple services from multiple cloud providers, knowing the pros and cons of each service, making it easier to later decide on the technology and services to implement as part of an architecture.

Being able to understand modern technologies

The days of architectures based on VMs are almost gone.

A good cloud architect will be able to understand what an application is trying to achieve, and be able to embed modern technologies:

- Microservice architecture, to split a complex workload into small pieces, developed and owned by different teams

- Containerization solutions, from managed Kubernetes services to simpler alternatives such as Amazon ECS, Azure Container Apps, or Google Cloud Run

- Function-as-a-Service, been able to process specific tasks such as image processing, handling user registration, error handling, and much more.

Note: Although FaaS is considered vendor-opinionated, and there is no clear process to migrate between cloud providers, once decided on a specific CSP, a good architect should be able to find the pros for using FaaS as part of an application architecture.

- Event-driven architecture has many benefits in modern applications, from decoupling complex architecture, the ability for different components to operate independently, the ability to scale specific components (according to customers’ demand) without impacting other components of the application, and more.

Microservices, Containers, or FaaS does not have to be the answer for every architecture, but a good cloud architect will be able to find the right tools to achieve the business goals, sometimes by combining different technologies.

We must remember that technology and architecture change and evolve. A good cloud architect should reassess past architecture decisions, to see if, over time, different architecture can provide better results (in terms of cost, security, resiliency, etc.)

Understanding cloud vs. on-prem

As much as I admire organizations that can design, build, and deploy production-scale applications in the public cloud, I admit the public cloud is not a solution for 100% of the use cases.

A good cloud architect will be able to understand the business goals, with technological constraints (such as cost, resiliency requirements, regulations, team knowledge, etc.), and be able to understand which workloads can be developed as cloud-native applications, and which workloads can remain, or even developed from scratch on-prem.

I do believe that to gain the full benefits of modern technologies (from elasticity, infinite scale, use of GenAI technology, etc.) an organization should select the public cloud, but for simple or stable workloads, an organization can find suitable solutions on-prem as well.

Thoughts of Experienced Architects

Make unbiased decisions

“A good architecture allows major decisions to be deferred (to a time when you have more information). A good architecture maximizes the number of decisions that are not made. A good architecture makes the choice of tools (database, frameworks, etc.) irrelevant.”

Source: Allen Holub

Beware the Assumptions

“Unconscious decisions often come in the form of assumptions. Assumptions are risky because they lead to non-requirements, those requirements that exist but were not documented anywhere. Tacit assumptions and unconscious decisions both lead to missed expectations or surprises down the road.”

Source: Gregor Hohpe

Cloud building blocks – putting things together

“A cloud architect is a system architect responsible for putting together all the building blocks of a system to make an operating application. This includes understanding networking, network protocols, server management, security, scaling, deployment pipelines, and secrets management. They must understand what it takes to keep systems operational.”

Source: Lee Atchison

Being a generalist

“Good generalists need to cast a wider net to define the best-optimized technologies and configurations for the desired business solution. This means understanding the capabilities of all cloud services and the trade-offs of deploying a heterogeneous cloud solution.”

Source: David Linthicum

The importance of cost considerations

“By considering cost implications early and continuously, systems can be designed to balance features, time-to-market, and efficiency. Development can focus on maintaining lean and efficient code. And operations can fine-tune resource usage and spending to maximize profitability.”

Source: Dr. Werner Vogels

Summary

There are many more qualities of a good and successful cloud architect (from understanding cost decisions, cybersecurity threats and mitigations, designing for scalability, high availability, resiliency, and more), but in this blog post, I have tried to mention the qualities that in 2024 I believe are the most important ones.

Whether you just entered the role of a cloud architect, or if you are an experienced cloud architect, I recommend you keep learning, gain hands-on experience with cloud services and the latest technologies, and share your knowledge with your colleagues, for the benefit of the entire industry.

About the author

Eyal Estrin is a cloud and information security architect, and the author of the books Cloud Security Handbook and Security for Cloud Native Applications, with more than 20 years in the IT industry.

You can connect with him on social media (https://linktr.ee/eyalestrin).

Opinions are his own and not the views of his employer.

Unpopular opinion about “Moving back to on-prem”

Over the past year, I have seen a lot of posts on social media about organizations moving back from the public cloud to on-prem.

In this blog post, I will explain why I believe it is nothing more than a myth, and why the public cloud is the future.

Introduction

Anyone who follows my posts on social media knows that I am a huge advocate of the public cloud.

Back in 2023, I published a blog post called “How to Avoid Cloud Repatriation“, where I explained why I believe that organizations rushed to the public cloud, without having a clear strategy, that would guide them on which workloads are suitable to run in the public cloud, to invest in cost management and employee training, etc.

I am aware of Barclay’s report from mid-2024 claiming that according to conversations they had with CIOs, 83% of the surveyed organizations plan to move workloads back to private cloud, while another report from Synergy Research Group (published in August 2024), claiming that “hyper-scale operators are 41% of the worldwide capacity of all data centers”, and “Looking ahead to 2029, hyper-scale operators will account for over 60% of all capacity, while on-premises will drop to just 20%”.

Analysts claim there is a trend of organizations to move back to on-prem, but the newspapers are far from been filled with customer stories (specifically enterprises), who moved their production workloads from the public cloud to the on-prem.

You may be able to find some stories about small companies (with stable workloads and highly skilled personnel), who decided to move back to on-prem, but it is far from being a trend.

I do not disagree that large workloads in the public cloud will cost an organization a lot of money, but it raises a question:

Has the organization embedded cost as part of any architecture decision from day 1, or has the organization ignored cost for a long time and realized now that the usage of cloud resources costs a lot of money if not managed properly?

Why do I believe the future is in the public cloud?

I am not looking at the public cloud as a solution for all IT questions/issues.

As with any (kind of) new field, an organization must invest in learning the topic from the bottom up, consult with experts, create a cloud strategy, and invest in cost, security, sustainability, and employee training, to be able to get the full benefits of the public cloud.

Let us dig deeper into some of the main areas where we see benefits of the public cloud:

Scalability

One of the huge benefits of the public cloud is the ability to scale horizontally (i.e., add or remove compute, storage, or network resources according to customer demand).

Were you able to horizontally scale using the traditional virtualization on-prem? Yes.

Did you have the capacity to scale virtually unlimited? No. Organizations are always limited by the amount of hardware they purchase and deploy in their on-prem data center.

Data center management

Regardless of what people may believe, most organizations do not have the experience of building and maintaining data centers to be physically secured, energetic sustainable, and to be CSP grade highly available.

Data centers do not produce any business value (unless you are in the data center or hosting industry), and in most cases, moving the responsibility to a cloud provider will be more beneficial for most organizations.

Hardware maintenance

Let us assume your organization decided to purchase expensive hardware for their SAP HANA cluster, or an NVIDIA cluster with the latest GPUs for AI/ML workloads.

In this scenario, your organization will need to pay in advance for several years, train your IT on deployment and maintenance of the purchased hardware (do not forget the cooling of GPUs…), and the moment you complete deploying the new hardware, your organization is in charge of the on-going maintenance, until the hardware will become outdated (probably couple of weeks/months after you purchased the hardware), and not you are stacked with old hardware, that will not be able to suit your business needs (such as the latest GenAI LLMs).

In the public cloud, you pay for the resources that you need, scale as needed, and pay only for the resources being used (unless you decide to go for Spot, or savings plans, to lower the total costs).

Using or experimenting with new technology

In the traditional data center, we are stacked with a static data center mentality, i.e., use what you currently have.

One of the greatest capabilities the public cloud offers us is switching to a dynamic mindset. Business managers would like their organizations to provide new services to their customers, in a short time-to-market.

A new mindset encourages experimentation, allowing development teams to build new products, experiment with them, and if the experiment fails, switch to something else.

One of the examples of experimentation is the spiky usage of GenAI technology. Suddenly everyone is using (or planning to use) LLMs to build solutions (from chatbots, through text summarization, and image or video generation).

Only the public cloud will allow organizations to experiment with the latest hardware and the latest LLMs for building GenAI applications.

If you try to experiment with GenAI, you will have to purchase dedicated hardware (which will soon get outdated and will not be sufficient for your business needs for a long time), and you will suffer from resource limitations (at least when using the latest LLMs).

Storage capacity

In the traditional data center, organizations (almost) always suffer from limited storage capacity.

The more organizations collect data (for business analytics, providing customers added-value, research, AI/ML, etc.), to more data will be produced and needs to be stored.