Archive for the ‘Oracle’ Category

Building Resilient Applications in the Cloud

When building an application for serving customers, one of the questions raised is how do I know if my application is resilient and will survive a failure?

In this blog post, we will review what it means to build resilient applications in the cloud, and we will review some of the common best practices for achieving resilient applications.

What does it mean resilient applications?

AWS provides us with the following definition for the term resiliency:

“The ability of a workload to recover from infrastructure or service disruptions, dynamically acquire computing resources to meet demand, and mitigate disruptions, such as misconfigurations or transient network issues.”

Resiliency is part of the Reliability pillar for cloud providers such as AWS, Azure, GCP, and Oracle Cloud.

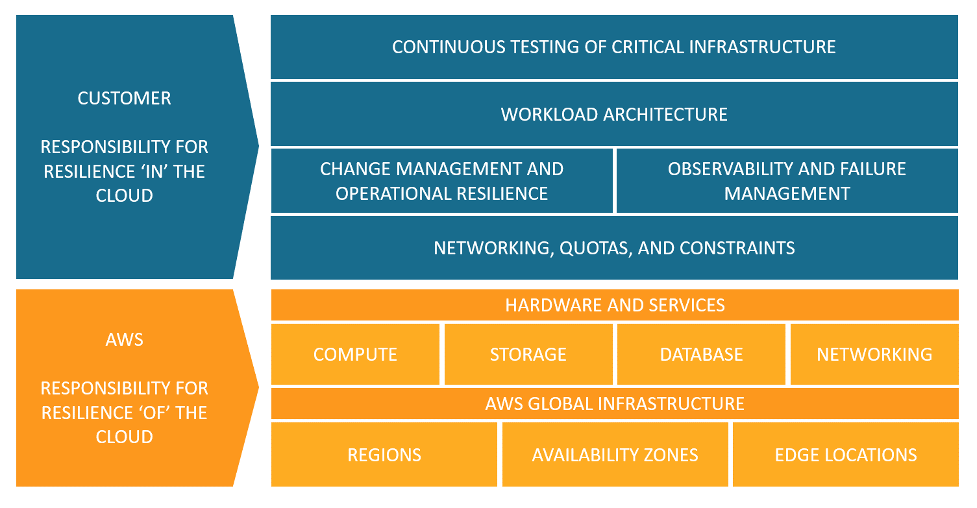

AWS takes it one step further, and shows how resiliency is part of the shared responsibility model:

- The cloud provider is responsible for the resilience of the cloud (i.e., hardware, software, computing, storage, networking, and anything related to their data centers)

- The customer is responsible for the resilience in the cloud (i.e., selecting the services to use, building resilient architectures, backup strategies, data replication, and more).

How do we build resilient applications?

This blog post assumes that you are building modern applications in the public cloud.

We have all heard of RTO (Recovery time objective).

Resilient workload (a combination of application, data, and the infrastructure that supports it), should not only recover automatically, but it must recover within a pre-defined RTO, agreed by the business owner.

Below are common best practices for building resilient applications:

Design for high-availability

The public cloud allows you to easily deploy infrastructure over multiple availability zones.

Examples of implementing high availability in the cloud:

- Deploying multiple VMs behind an auto-scaling group and a front-end load-balancer

- Spreading container load over multiple Kubernetes worker nodes, deploying in multiple AZs

- Deploying a cluster of database instances in multiple AZs

- Deploying global (or multi-regional) database services (such as Amazon Aurora Global Database, Azure Cosmos DB, Google Cloud Spanner, and Oracle Global Data Services (GDS)

- Configure DNS routing rules to send customers’ traffic to more than a single region

- Deploy global load-balancer (such as AWS Global Accelerator, Azure Cross-region Load Balancer, or Google Global external Application Load Balancer) to spread customers’ traffic across regions

Implement autoscaling

Autoscaling is one of the biggest advantages of the public cloud.

Assuming we built a stateless application, we can add or remove additional compute nodes using autoscaling capability, and adjust it to the actual load on our application.

In a cloud-native infrastructure, we will use a managed load-balancer service, to receive traffic from customers, and send an API call to an autoscaling group, to add or remove additional compute nodes.

Implement microservice architecture

Microservice architecture is meant to break a complex application into smaller parts, each responsible for certain functionality of the application.

By implementing microservice architecture, we are decreasing the impact of failed components on the rest of the application.

In case of high load on a specific component, it is possible to add more compute resources to the specific component, and in case we discover a bug in one of the microservices, we can roll back to a previous functioning version of the specific microservice, with minimal impact on the rest of the application.

Implement event-driven architecture

Event-driven architecture allows us to decouple our application components.

Resiliency can be achieved using event-driven architecture, by the fact that even if one component fails, the rest of the application continues to function.

Components are loosely coupled by using events that trigger actions.

Event-driven architectures are usually (but not always) based on services managed by cloud providers, who are responsible for the scale and maintenance of the managed infrastructure.

Event-driven architectures are based on models such as pub/sub model (services such as Amazon SQS, Azure Web PubSub, Google Cloud Pub/Sub, and OCI Queue service) or based on event delivery (services such as Amazon EventBridge, Azure Event Grid, Google Eventarc, and OCI Events service).

Implement API Gateways

If your application exposes APIs, use API Gateways (services such as Amazon API Gateway, Azure API Management, Google Apigee, or OCI API Gateway) to allow incoming traffic to your backend APIs, perform throttling to protect the APIs from spikes in traffic, and perform authorization on incoming requests from customers.

Implement immutable infrastructure

Immutable infrastructure (such as VMs or containers) are meant to run application components, without storing session information inside the compute nodes.

In case of a failed component, it is easy to replace the failed component with a new one, with minimal disruption to the entire application, allowing to achieve fast recovery.

Data Management

Find the most suitable data store for your workload.

A microservice architecture allows you to select different data stores (from object storage to backend databases) for each microservice, decreasing the risk of complete failure due to availability issues in one of the backend data stores.

Once you select a data store, replicate it across multiple AZs, and if the business requires it, replicate it across multiple regions, to allow better availability, closer to the customers.

Implement observability

By monitoring all workload components, and sending logs from both infrastructure and application components to a central logging system, it is possible to identify anomalies, anticipate failures before they impact customers, and act.

Examples of actions can be sending a command to restart a VM, deploying a new container instead of a failed one, and more.

It is important to keep track of measurements — for example, what is considered normal response time to a customer request, to be able to detect anomalies.

Implement chaos engineering

The base assumption is that everything will eventually fail.

Implementing chaos engineering, allows us to conduct controlled experiments, inject faults into our workloads, testing what will happen in case of failure.

This allows us to better understand if our workload will survive a failure.

Examples can be adding load on disk volumes, injecting timeout when an application tier connects to a backend database, and more.

Examples of services for implementing chaos engineering are AWS Fault Injection Simulator, Azure Chaos Studio, and Gremlin.

Create a failover plan

In an ideal world, your workload will be designed for self-healing, meaning, it will automatically detect a failure and recover from it, for example, replace failed components, restart services, or switch to another AZ or even another region.

In practice, you need to prepare a failover plan, keep it up to date, and make sure your team is trained to act in case of major failure.

A disaster recovery plan without proper and regular testing is worth nothing — your team must practice repeatedly, and adjust the plan, and hopefully, they will be able to execute the plan during an emergency with minimal impact on customers.

Resilient applications tradeoffs

Failure can happen in various ways, and when we design our workload, we need to limit the blast radius on our workload.

Below are some common failure scenarios, and possible solutions:

- Failure in a specific component of the application — By designing a microservice architecture, we can limit the impact of a failed component to a specific area of our application (depending on the criticality of the component, as part of the entire application)

- Failure or a single AZ — By deploying infrastructure over multiple AZs, we can decrease the chance of application failure and impact on our customers

- Failure of an entire region — Although this scenario is rare, cloud regions also fail, and by designing a multi-region architecture, we can decrease the impact on our customers

- DDoS attack — By implementing DDoS protection mechanisms, we can decrease the risk of impacting our application with a DDoS attack

Whatever solution we design for our workloads, we need to understand that there is a cost and there might be tradeoffs for the solution we design.

Multi-region architecture aspects

A multi-region architecture will allow the most highly available resilient solution for your workloads; however, multi-region adds high cost for cross-region egress traffic, most services are limited to a single region, and your staff needs to know to support such a complex architecture.

Another limitation of multi-region architecture is data residency — if your business or regulator demands that customers’ data be stored in a specific region, a multi-region architecture is not an option.

Service quota/service limits

When designing a highly resilient architecture, we must take into consideration service quotas or service limits.

Sometimes we are bound to a service quota on a specific AZ or region, an issue that we may need to resolve with the cloud provider’s support team.

Sometimes we need to understand there is a service limit in a specific region, such as a specific service that is not available in a specific region, or there is a shortage of hardware in a specific region.

Autoscaling considerations

Horizontal autoscale (the ability to add or remove compute nodes) is one of the fundamental capabilities of the cloud, however, it has its limitations.

Provisioning a new compute node (from a VM, container instance, or even database instance) may take a couple of minutes to spin up (which may impact customer experience) or to spin down (which may impact service cost).

Also, to support horizontal scaling, you need to make sure the compute nodes are stateless, and that the application supports such capability.

Failover considerations

One of the limitations of database failover is their ability to switch between the primary node and one of the secondary nodes, either in case of failure or in case of scheduled maintenance.

We need to take into consideration the data replication, making sure transactions were saved and moved from the primary to the read replica node.

Summary

In this blog post, we have covered many aspects of building resilient applications in the cloud.

When designing new applications, we need to understand the business expectations (in terms of application availability and customer impact).

We also need to understand the various architectural design considerations, and their tradeoffs, to be able to match the technology to the business requirements.

As I always recommend — do not stay on the theoretical side of the equation, begin designing and building modern and highly resilient applications to serve your customers — There is no replacement for actual hands-on experience.

References

- Understand resiliency patterns and trade-offs to architect efficiently in the cloud

- Building resilience to your business requirements with Azure

- Success through culture: why embracing failure encourages better software delivery

- Building Resilient Solutions in OCI

About the Author

Eyal Estrin is a cloud and information security architect, and the author of the book Cloud Security Handbook, with more than 20 years in the IT industry. You can connect with him on Twitter.

Opinions are his own and not the views of his employer.

The Future of Data Security Lies in the Cloud

We have recently read a lot of posts about the SolarWinds hack, a vulnerability in a popular monitoring software used by many organizations around the world.

This is a good example of supply chain attack, which can happen to any organization.

We have seen similar scenarios over the past decade, from the Heartbleed bug, Meltdown and Spectre, Apache Struts, and more.

Organizations all around the world were affected by the SolarWinds hack, including the cybersecurity company FireEye, and Microsoft.

Events like these make organizations rethink their cybersecurity and data protection strategies and ask important questions.

Recent changes in the European data protection laws and regulations (such as Schrems II) are trying to limit data transfer between Europe and the US.

Should such security breaches occur? Absolutely not.

Should we live with the fact that such large organization been breached? Absolutely not!

Should organizations, who already invested a lot of resources in cloud migration move back workloads to on-premises? I don’t think so.

But no organization, not even major financial organizations like banks or insurance companies, or even the largest multinational enterprises, have enough manpower, knowledge, and budget to invest in proper protection of their own data or their customers’ data, as hyperscale cloud providers.

There are several of reasons for this:

- Hyperscale cloud providers invest billions of dollars improving security controls, including dedicated and highly trained personnel.

- Breach of customers’ data that resides at hyperscale cloud providers can drive a cloud provider out of business, due to breach of customer’s trust.

- Security is important to most organizations; however, it is not their main line of expertise.

Organization need to focus on their core business that brings them value, like manufacturing, banking, healthcare, education, etc., and rethink how to obtain services that support their business goals, such as IT services, but do not add direct value.

Recommendations for managing security

Security Monitoring

Security best practices often state: “document everything”.

There are two downsides to this recommendation: One, storage capacity is limited and two, most organizations do not have enough trained manpower to review the logs and find the top incidents to handle.

Switching security monitoring to cloud-based managed systems such as Azure Sentinel or Amazon GuardDuty, will assist in detecting important incidents and internally handle huge logs.

Encryption

Another security best practice state: “encrypt everything”.

A few years ago, encryption was quite a challenge. Will the service/application support the encryption? Where do we store the encryption key? How do we manage key rotation?

In the past, only banks could afford HSM (Hardware Security Module) for storing encryption keys, due to the high cost.

Today, encryption is standard for most cloud services, such as AWS KMS, Azure Key Vault, Google Cloud KMS and Oracle Key Management.

Most cloud providers, not only support encryption at rest, but also support customer managed key, which allows the customer to generate his own encryption key for each service, instead of using the cloud provider’s generated encryption key.

Security Compliance

Most organizations struggle to handle security compliance over large environments on premise, not to mention large IaaS environments.

This issue can be solved by using managed compliance services such as AWS Security Hub, Azure Security Center, Google Security Command Center or Oracle Cloud Access Security Broker (CASB).

DDoS Protection

Any organization exposing services to the Internet (from publicly facing website, through email or DNS service, till VPN service), will eventually suffer from volumetric denial of service.

Only large ISPs have enough bandwidth to handle such an attack before the border gateway (firewall, external router, etc.) will crash or stop handling incoming traffic.

The hyperscale cloud providers have infrastructure that can handle DDoS attacks against their customers, services such as AWS Shield, Azure DDoS Protection, Google Cloud Armor or Oracle Layer 7 DDoS Mitigation.

Using SaaS Applications

In the past, organizations had to maintain their entire infrastructure, from messaging systems, CRM, ERP, etc.

They had to think about scale, resilience, security, and more.

Most breaches of cloud environments originate from misconfigurations at the customers’ side on IaaS / PaaS services.

Today, the preferred way is to consume managed services in SaaS form.

These are a few examples: Microsoft Office 365, Google Workspace (Formerly Google G Suite), Salesforce Sales Cloud, Oracle ERP Cloud, SAP HANA, etc.

Limit the Blast Radius

To limit the “blast radius” where an outage or security breach on one service affects other services, we need to re-architect infrastructure.

Switching from applications deployed inside virtual servers to modern development such as microservices based on containers, or building new applications based on serverless (or function as a service) will assist organizations limit the attack surface and possible future breaches.

Example of these services: Amazon ECS, Amazon EKS, Azure Kubernetes Service, Google Kubernetes Engine, Google Anthos, Oracle Container Engine for Kubernetes, AWS Lambda, Azure Functions, Google Cloud Functions, Google Cloud Run, Oracle Cloud Functions, etc.

Summary

The bottom line: organizations can increase their security posture, by using the public cloud to better protect their data, use the expertise of cloud providers, and invest their time in their core business to maximize value.

Security breaches are inevitable. Shifting to cloud services does not shift an organization’s responsibility to secure their data. It simply does it better.

Confidential Computing and the Public Cloud

What exactly is “confidential computing” and what are the reasons and benefits for using it in the public cloud environment?

Introduction to data encryption

To protect data stored in the cloud, we usually use one of the following methods:

· Encryption at transit — Data transferred over the public Internet can be encrypted using the TLS protocol. This method prohibits unwanted participants from entering the conversation.

· Encryption at rest — Data stored at rest, such as databases, object storage, etc., can be encrypted using symmetric encryption which means using the same encryption key to encrypt and decrypt the data. This commonly uses the AES256 algorithm.

When we wish to access encrypted data, we need to decrypt the data in the computer’s memory to access, read and update the data.

This is where confidential computing comes in — trying to protect the gap between data at rest and data at transit.

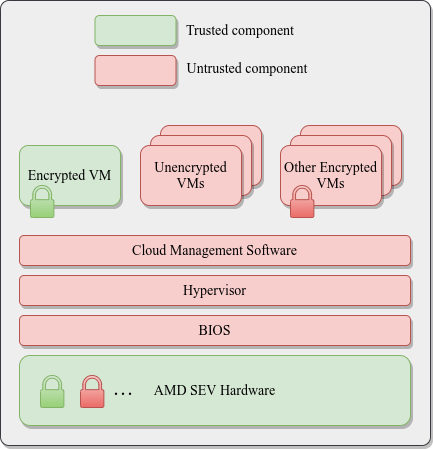

Confidential Computing uses hardware to isolate data. Data is encrypted in use by running it in a trusted execution environment (TEE).

As of November 2020, confidential computing is supported by Intel Software Guard Extensions (SGX) and AMD Secure Encrypted Virtualization (SEV), based on AMD EPYC processors.

Comparison of the available options

| Intel SGX | Intel SGX2 | AMD SEV 1 | AMD SEV 2 | |

| Purpose | Microservices and small workloads | Machine Learning and AI | Cloud and IaaS workloads (above the hypervisor), suitable for legacy applications or large workloads | Cloud and IaaS workloads (above the hypervisor), suitable for legacy applications or large workloads |

| Cloud VM support (November 2020) | – | |||

| Cloud containers support (November 2020) | – | – | ||

| Operating system supported | Windows, Linux | Linux | Linux | Linux |

| Memory limitation | Up to 128MB | Up to 1TB | Up to available RAM | Up to available RAM |

| Software changes | Require software rewrite | Require software rewrite | Not required | – |

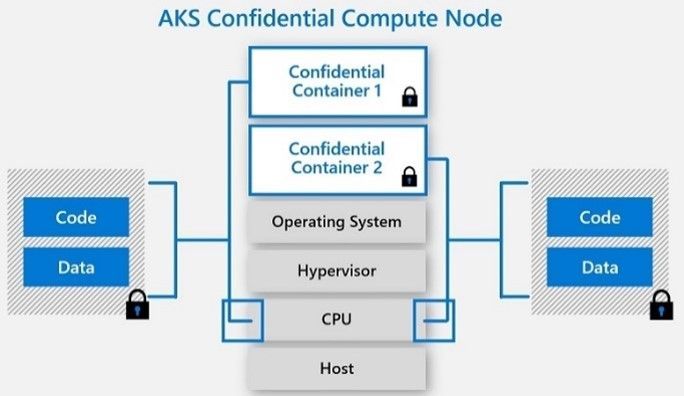

Reference Architecture

AMD SEV Architecture:

Azure Kubernetes Service (AKS) Confidential Computing:

References

· Confidential Computing: Hardware-Based Trusted Execution for Applications and Data

· Google Cloud Confidential VMs vs Azure Confidential Computing

https://msandbu.org/google-cloud-confidential-vms-vs-azure-confidential-computing/

· A Comparison Study of Intel SGX and AMD Memory Encryption Technology

https://caslab.csl.yale.edu/workshops/hasp2018/HASP18_a9-mofrad_slides.pdf

· SGX-hardware listhttps://github.com/ayeks/SGX-hardware

· Performance Analysis of Scientific Computing Workloads on Trusted Execution Environments

https://arxiv.org/pdf/2010.13216.pdf

· Helping Secure the Cloud with AMD EPYC Secure Encrypted Virtualization

https://developer.amd.com/wp-content/resources/HelpingSecuretheCloudwithAMDEPYCSEV.pdf

· Azure confidential computing

https://azure.microsoft.com/en-us/solutions/confidential-compute/

· Azure and Intel commit to delivering next generation confidential computing

· DCsv2-series VM now generally available from Azure confidential computing

· Confidential computing nodes on Azure Kubernetes Service (public preview)

https://docs.microsoft.com/en-us/azure/confidential-computing/confidential-nodes-aks-overview

· Expanding Google Cloud’s Confidential Computing portfolio

· A deeper dive into Confidential GKE Nodes — now available in preview

https://cloud.google.com/blog/products/identity-security/confidential-gke-nodes-now-available

· Using HashiCorp Vault with Google Confidential Computing

https://www.hashicorp.com/blog/using-hashicorp-vault-with-google-confidential-computing

· Confidential Computing is cool!

https://medium.com/google-cloud/confidential-computing-is-cool-1d715cf47683

· Data-in-use protection on IBM Cloud using Intel SGX

https://www.ibm.com/cloud/blog/data-use-protection-ibm-cloud-using-intel-sgx

· Why IBM believes Confidential Computing is the future of cloud security

· Alibaba Cloud Released Industry’s First Trusted and Virtualized Instance with Support for SGX 2.0 and TPM

Tips for Selecting a Public Cloud Provider

When an organization needs to select a public cloud service provider, there are several variables and factors to take into consideration that will help you choose the most appropriate cloud provider suitable for the organization’s needs.

In this post, we will review various considerations that will help organizations in the decision-making process.

Business goals

Before deciding to use a public cloud solution, or migrating existing environments to the cloud, it is important that organizations review their business goals. Explore what brings the organization value by maintaining existing systems on premise and what value does the migration to the cloud promise. In accordance with what you discover, decide which systems will be deployed in the cloud first, or which systems your organization will choose to use as managed services.

Review the lists of services offered in the cloud

Public cloud providers publish a list of services in various areas.

Review the list of current services and see how they stand up to your organization’s needs. This will help you narrow down the most suitable options.

Here are some examples of public cloud service catalogs:

· AWS — https://aws.amazon.com/products/

· Azure — https://azure.microsoft.com/en-us/services/

· GCP — https://cloud.google.com/products

· Oracle Cloud — https://www.oracle.com/cloud/products.html

· IBM — https://www.ibm.com/cloud/products

· Salesforce — https://www.salesforce.com/eu/products/

· SAP — https://www.sap.com/products.html

Centrally authenticating users against Active Directory in IaaS / PaaS environments

Many organizations manage access rights to various systems based on an organizational Active Directory.

Although it is possible to deploy Domain Controllers based on virtual servers in an IaaS environment, or create a federation between the on-premise and the cloud environments, at least some cloud providers offer managed Active Directory service based on Kerberos protocol (the most common authentication protocol in the on-premise environments) might ease the migration to the public cloud.

Examples of managed Active Directory services:

· Azure Active Directory Domain Services

· Google Managed Service for Microsoft Active Directory

Understanding IaaS / PaaS pricing models

Public cloud providers publish pricing calculators and documentation on their service pricing models.

Understanding pricing models might be complex for some services. For this reason, it is highly recommended to contact an account manager, a partners or reseller for assistance.

Comparing similar services among different cloud providers will enable an organization to identify and choose the most suitable cloud provider based on the organization’s needs and budget.

Examples of pricing calculators:

· AWS Simple Monthly Calculator

· Google Cloud Platform Pricing Calculator

Check if your country has a local region of one of the public cloud providers

The decision may be easier, or it may be easier to select one provider over a competitor, if in your specific country the provider has a local region. This can help for example in cases where there are limitations on data transfer outside a specific country’s borders (or between continents), or issues of network latency when transferring large amount of data sets between the local data centers and cloud environments,

This is relevant for all cloud service models (IaaS / PaaS / SaaS).

Examples of regional mapping:

· AWS:

AWS Regions and Availability Zones

· Azure and Office 365:

o Where your Microsoft 365 customer data is stored

· Google Cloud Platform:

· Oracle Cloud:

Oracle Data Regions for Platform and Infrastructure Services

· Salesforce:

Where is my Salesforce instance located?

· SAP:

SAP Cloud Platform Regions and Service Portfolio

Service status reporting and outage history

Mature cloud providers transparently publish their service availability status in various regions around the world, including outage history of their services.

Mature cloud providers transparently share service status and outages with customers, and know how to build stable and available infrastructure over the long term, and over multiple geographic locations, as well as how to minimize the “blast radius”, which might affect many customers.

A thorough review of an outage history report allows organizations to get a good picture over an extended period and help in the decision-making process.

Example of cloud providers’ service status and outage history documentation:

· AWS:

· Azure:

· Google Cloud Platform:

Google Cloud Status Dashboard — Incidents Summary

· Oracle Cloud:

Oracle Cloud Infrastructure — Current Status

Oracle Cloud Infrastructure — Incident History

· Salesforce:

· SAP:

SAP Cloud Platform Status Page

Summary

As you can see, there are several important factors to take into consideration when selecting a specific cloud provider. We have covered some of the more common ones in this post.

For an organization to make an educated decision, it is recommended to check what brings value for the organization, in both the short and long-term. It is important to review cloud providers’ service catalogs, alongside a thorough review of global service availability, transparency, understanding pricing models and hybrid architecture that connects local data centers to the cloud.

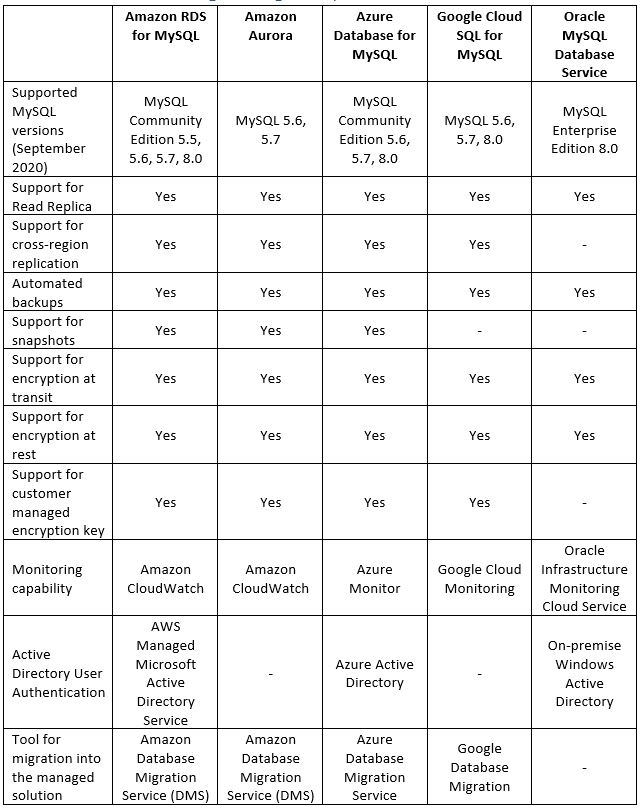

Running MySQL Managed Database in the Cloud

Today, more and more organizations are moving to the public cloud and choosing open source databases. They are choosing this for a variety of reasons, but license cost is one of the main ones.

In this post, we will review some of the common alternatives for running MySQL database inside a managed environment.

Legacy applications may be a reason for manually deploying and managing MySQL database.

Although it is possible to deploy a virtual machine, and above it manually install MySQL database (or even a MySQL cluster), unless your organization have a dedicated and capable DBA, I recommend looking at what brings value to your organization. Unless databases directly influence your organization’s revenue, I recommend paying the extra money and choosing a managed solution based on a Platform as a Service model.

It is important to note that several cloud providers offer data migration services to assist migrating existing MySQL (or even MS-SQL and Oracle) databases from on-premise to a managed service in the cloud.

Benefits of using managed database solutions

- Easy deployment – With a few clicks from within the web console, or using CLI tools, you can deploy fully managed MySQL databases (or a MySQL cluster)

- High availability and Read replica – Configurable during the deployment phase and after the product has already been deployed, according to customer requirements

- Maintenance – The entire service maintenance (including database fine-tuning, operating system, and security patches, etc.) is done by the cloud provider

- Backup and recovery – Embedded inside the managed solution and as part of the pricing model

- Encryption at transit and at rest – Embedded inside the managed solution

- Monitoring – As with any managed solution, cloud providers monitor service stability and allow customers access to metrics for further investigation (if needed)

Alternatives for running managed MySQL database in the cloud

Summary

As you can read in this article, running MySQL database in a managed environment in the cloud is a viable option, and there are various reasons for taking this step (from license cost, decrease man power maintaining the database and operating system, backups, security, availability, etc.)

References

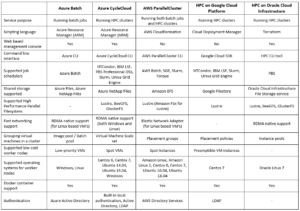

How to run HPC in the cloud?

Is it feasible to run HPC in the cloud? How different is it from running a local HPC cluster? What are some of the common alternatives for running HPC in the cloud?

Introduction

Before beginning our discussion about HPC (High Performance Computing) in the cloud, let us talk about what exactly HPC really means?

“High Performance Computing most generally refers to the practice of aggregating computing power in a way that delivers much higher performance than one could get out of a typical desktop computer or workstation in order to solve large problems in science, engineering, or business.” (https://www.usgs.gov/core-science-systems/sas/arc/about/what-high-performance-computing)

In more technical terms – it refers to a cluster of machines composed of multiple cores (either physical or virtual cores), a lot of memory, fast parallel storage (for read/write) and fast network connectivity between cluster nodes.

HPC is useful when you need a lot of compute resources, from image or video rendering (in batch mode) to weather forecasting (which requires fast connectivity between the cluster nodes).

The world of HPC is divided into two categories:

- Loosely coupled – In this scenario you might need a lot of compute resources, however, each task can run in parallel and is not dependent on other tasks being completed.

Common examples of loosely coupled scenarios: Image processing, genomic analysis, etc.

- Tightly coupled – In this scenario you need fast connectivity between cluster resources (such as memory and CPU), and each cluster node depends on other nodes for the completion of the task. Common examples of tightly coupled scenarios: Computational fluid dynamics, weather prediction, etc.

Pricing considerations

Deploying an HPC cluster on premise requires significant resources. This includes a large investment in hardware (multiple machines connected in the cluster, with many CPUs or GPUs, with parallel storage and sometimes even RDMA connectivity between the cluster nodes), manpower with the knowledge to support the platform, a lot of electric power, and more.

Deploying an HPC cluster in the cloud is also costly. The price of a virtual machine with multiple CPUs, GPUs or large amount of RAM can be very high, as compared to purchasing the same hardware on premise and using it 24×7 for 3-5 years.

The cost of parallel storage, as compared to other types of storage, is another consideration.

The magic formula is to run HPC clusters in the cloud and still have the benefits of (virtually) unlimited compute/memory/storage resources is to build dynamic clusters.

We do this by building the cluster for a specific job, according to the customer’s requirements (in terms of number of CPUs, amount of RAM, storage capacity size, network connectivity between the cluster nodes, required software, etc.). Once the job is completed, we copy the job output data and take down the entire HPC cluster in-order to save unnecessary hardware cost.

Alternatives for running HPC in the cloud

Summary

As you can see, running HPC in the public cloud is a viable option. But you need to carefully plan the specific solution, after gathering the customer’s exact requirements in terms of required compute resources, required software and of course budget estimation.

Product documentation

- Azure Batch

https://azure.microsoft.com/en-us/services/batch/

- Azure CycleCloud

https://azure.microsoft.com/en-us/features/azure-cyclecloud/

- AWS ParallelCluster

https://aws.amazon.com/hpc/parallelcluster/

- Slurm on Google Cloud Platform

https://github.com/SchedMD/slurm-gcp

- HPC on Oracle Cloud Infrastructure

What makes a good cloud architect?

Virtually any organization active in the public cloud needs at least one cloud architect to be able to see the big picture and to assist designing solutions.

So, what makes a cloud architect a good cloud architect?

In a word – be multidisciplinary.

Customer-Oriented

While the position requires good technical skills, a good cloud architect must have good customer facing skills. A cloud architect needs to understand the business needs, from the end-users (usually connecting from the Internet) to the technological teams. That means being able to speak many “languages,” and translate from one to the another while navigating the delicate nuances of each. All in the same conversation.

At the end of the day, the technology is just a means to serve your customers.

Sometimes a customer may ask for something non-technical at all (“Draw me a sheep…”) and sometimes it could be very technical (“I want to expose an API to allow read and update backend database”).

A good cloud architect knows how to take make a drawing of a sheep into a full-blown architecture diagram, complete with components, protocols, and more. In other worlds, translating a business or customer requirement into a technical requirement.

Technical Skills

Here are a few of the technical skills good cloud architects should have under their belts.

- Operating systems – Know how to deploy and troubleshoot problems related to virtual machines, based on both Windows and Linux.

- Cloud services – Be familiar with at least one public cloud provider’s services (such as AWS, Azure, GCP, Oracle Cloud, etc.). Even better to be familiar with at least two public cloud vendors since the world is heading toward multi-cloud environments.

- Networking – Be familiar with network-related concepts such as OSI model, TCP/IP, IP and subnetting, ACLs, HTTP, routing, DNS, etc.

- Storage – Be familiar with storage-related concepts such as object storage, block storage, file storage, snapshots, SMB, NFS, etc.

- Database – Be familiar with database-related concepts such as relational database, NoSQL database, etc.

- Architecture – Be familiar with concepts such as three-tier architecture, micro-services, serverless, twelve-factor app, API, etc.

Information Security

A good cloud architect can read an architecture diagram and knows which questions to ask and which security controls to embed inside a given solution.

- Identity management – Be familiar with concepts such as directory services, Identity and access management (IAM), Active Directory, Kerberos, SAML, OAuth, federation, authentication, authorization, etc.

- Auditing – Be familiar with concepts such as audit trail, access logs, configuration changes, etc.

- Cryptography – Be familiar with concepts such as TLS, public key authentication, encryption at transit & at rest, tokenization, hashing algorithms, etc.

- Application Security – Be familiar with concepts such as input validation, OWASP Top10, SDLC, SQL Injection, etc.

Laws, Regulation and Standards

In our dynamic world a good cloud architect needs to have at least a basic understanding of the following topics:

- Laws and Regulation – Be familiar with privacy regulations such as GDPR, CCPA, etc., and how they affect your organization’s cloud environments and products

- Standards – Be familiar with standards such as ISO 27001 (Information Security Management), ISO 27017 (Cloud Security), ISO 27018 (Protection of PII in public clouds), ISO 27701 (Privacy), SOC 2, CSA Security Trust Assurance and Risk (STAR), etc.

- Contractual agreements – Be able to read contracts between customers and public cloud providers, and know which topics need to appear in a typical contract (SLA, business continuity, etc.)

Code

Good cloud architects, like a good DevOps guys or gals, are not afraid to get their hands dirty and be able read and write code, mostly for automation purposes.

The required skills vary from scenario to scenario, but in most cases include:

- CLI – Be able to run command line tools, in-order to query existing environment settings up to updating or deploying new components.

- Scripting – Be familiar with at least one scripting language, such as PowerShell, Bash scripts, Python, Java Script, etc.

- Infrastructure as a Code – Be familiar with at least one declarative language, such as HashiCorp Terraform, AWS CloudFormation, Azure Resource Manager, Google Cloud Deployment Manager, RedHat Ansible, etc.

- Programming languages – Be familiar with at least one programming language, such as Java, Microsoft .NET, Ruby, etc.

Sales

A good cloud architect needs to be able to “sell” a solution to various audiences. Again the required skills vary from scenario to scenario, but in most cases include:

- Pricing calculators – Be familiar with various cloud service pricing models and be able to estimate cloud service costs using tools such as AWS Simple Monthly Calculator, Azure Pricing Calculator, Google Cloud Platform Pricing Calculator, Oracle Cloud Cost Estimator, etc.

- Cloud vs. On-Premise – Be able to have weigh in on the pros and cons of cloud vs. on premise, with different audiences.

- Architecture alternatives – Be able to present different architecture alternatives (from VM to micro-services up to Serverless) for each scenario. It is always good idea to have backup plan.

Summary

Recruiting a good cloud architect is indeed challenging. The role requires multidisciplinary skills – from soft skills (been a customer-oriented and salesperson) to deep technical skills (technology, cloud services, information security, etc.)

There is no alternative to years of hands-on experience. The more areas of experience cloud architects have, the better they will succeed at the job.

References

- What is a cloud architect? A vital role for success in the cloud.

- Want to Become a Cloud Architect? Here’s How

https://www.businessnewsdaily.com/10767-how-to-become-a-cloud-architect.html

The Public Cloud is Coming to Your Local Data Center

For a long time, public cloud providers have given users (almost) unlimited access to compute resources (virtual servers, storage, database, etc.) inside their end-to-end managed data centers. Recently the need for local on-premise solutions is now being felt.

In scenarios where network latency or there is a need to store sensitive or critical data inside a local data center, public cloud providers have built server racks meant for deployment of familiar virtual servers, storage and network equipment cloud infrastructure, while using the same user interface and the same APIs for controlling components using CLI or SDK.

Managing the lower infrastructure layers (monitoring of hardware/software/licenses and infrastructure updates) is done remotely by the public cloud providers, which in some cases, requires constant inbound Internet connectivity.

This solution allows customers to enjoy all the benefits of the public cloud (minus the scale), transparently expand on-premise environments to the public cloud, continue storing and processing data inside local data centers as much as required, and in in cases where there is demand for large compute power, migrate environments (or deploy new environments) to the public cloud.

The solution is suitable for military and defense users, or organizations with large amounts of data sets which cannot be moved to the public cloud in a reasonable amount of time. Below is a comparison of three solutions currently available:

| Azure Stack Hub | AWS Outposts | Oracle Private Cloud at Customer | |

| Ability to work in disconnect mode from the public cloud / Internet | Fully supported / Partially supported | The solution requires constant connectivity to a region in the cloud | The solution requires remote connectivity of Oracle support for infrastructure monitoring and software updates |

| VM deployment support | Fully supported | Fully supported | Fully supported |

| Containers or Kubernetes deployment support | Fully supported | Fully supported | Fully supported |

| Support Object Storage locally | Fully supported | Will be supported in 2020 | Fully supported |

| Support Block Storage locally | Fully supported | Fully supported | Fully supported |

| Support managed database deployment locally | – | Fully supported (MySQL, PostgreSQL) | Fully supported (Oracle Database) |

| Support data analytics deployment locally | – | Fully supported (Amazon EMR) | – |

| Support load balancing services locally | Fully supported | Fully supported | Fully supported |

| Built in support for VPN connectivity to the solution | Fully supported | – | – |

| Support connectivity between the solution and resources from on premise site | – | Fully supported | – |

| Built in support for encryption services (data at rest) | Fully supported (Key Vault) | Fully supported (AWS KMS) | – |

| Maximum number of physical cores (per rack) | 100 physical cores | – | 96 physical cores |

| Maximum storage capacity (per rack) | 5TB | 55TB | 200TB |

Summary

The private cloud solutions noted here are not identical in terms of their capabilities. At least for the initial installation and support, a partner who specializes in this field is a must.

Support for the well-known services from public cloud environments (virtual servers, storage, database, etc.) will expand over time, as these solutions become more commonly used by organizations or hosting providers.

These solutions are not meant for every customer. However they provide a suitable solution in scenarios where it is not possible to use the public cloud, for regulatory or military/defense reasons for example, or when organizations are planning for a long term migration to the public cloud a few years in advance. These plans can be due to legacy applications not built for the cloud, network latency issues or a large amount of data sets that need to be copied to the cloud.

Best Practices for Deploying New Environments in the Cloud for the First Time

When organizations take their first steps to use public cloud services, they tend to look at a specific target.

My recommendation – think scale!

Plan a couple of steps ahead instead of looking at single server that serves just a few customers. Think about a large environment comprised of hundreds or thousands of servers, serving 10,000 customers concurrently.

Planning will allow you to manage the environment (infrastructure, information security and budget) when you do reach a scale of thousands of concurrent customers. The more we plan the deployment of new environments in advance, according to their business purposes and required resources required for each environment, it will be easier to plan to scale up, while maintaining high level security, budget and change management control and more.

In this three-part blog series, we will review some of the most important topics that will help avoid mistakes while building new cloud environments for the first time.

Resource allocation planning

The first step in resources allocation planning is to decide how to divide resources based on an organizational structure (sales, HR, infrastructure, etc.) or based on environments (production, Dev, testing, etc.)

In-order to avoid mixing resources (or access rights) between various environments, the best practice is to separate the environments as follows:

- Share resource account (security products, auditing, billing management, etc.)

- Development environment account (consider creating separate account for test environment purposes)

- Production environment account

Separating different accounts or environments can be done using:

- Azure Subscriptions or Azure Resource Groups

- AWS Accounts

- GCP Projects

- Oracle Cloud Infrastructure Compartments

Tagging resources

Even when deploying a single server inside a network environment (AWS VPC, Azure Resource Group, GCP VPC), it is important to tag resources. This allows identifying which resources belong to which projects / departments / environments, for billing purposes.

Common tagging examples:

- Project

- Department

- Environment (Prod, Dev, Test)

Beyond tagging, it is recommended to add a description to resources that support this kind of meta-data, in-order to locate resources by their target use.

Authentication, Authorization and Password Policy

In-order to ease the management of working with accounts in the cloud (and in the future, multiple accounts according to the various environments), the best practice is to follow the rules below:

- Central authentication – In case the organization isn’t using Active Directory for central account management and access rights, the alternative is to use managed services such as AWS IAM, Google Cloud IAM, Azure AD, Oracle Cloud IAM, etc.

If managed IAM service is chosen, it is critical to set password policy according to the organization’s password policy (minimum password length, password complexity, password history, etc.)

- If the central directory service is used by the organization, it is recommended to connect and sync the managed IAM service in the cloud to the organizational center directory service on premise (federated authentication).

- It is crucial to protect privileged accounts in the cloud environment (such as AWS Root Account, Azure Global Admin, Azure Subscription Owner, GCP Project Owner, Oracle Cloud Service Administrator, etc.), among others, by limiting the use of privileged accounts to the minimum required, enforcing complex passwords, and password rotation every few months. This enables multi-factor authentication and auditing on privileged accounts, etc.

- Access to resources should be defined according to the least privilege principle.

- Access to resources should be set to groups instead of specific users.

- Access to resources should be based on roles in AWS, Azure, GCP, Oracle Cloud, etc.

Audit Trail

It is important to enable auditing in all cloud environments, in-order to gain insights on access to resources, actions performed in the cloud environment and by whom. This is both security and change management reasons.

Common managed audit trail services:

- AWS CloudTrail – It is recommended to enable auditing on all regions and forward the audit logs to a central S3 bucket in a central AWS account (which will be accessible only for a limited amount of user accounts).

- Working with Azure, it is recommended to enable the use of Azure Monitor for the first phase, in-order to audit all access to resources and actions done inside the subscription. Later on, when the environment expands, you may consider using services such as Azure Security Center and Azure Sentinel for auditing purposes.

- Google Cloud Logging – It is recommended to enable auditing on all GCP projects and forward the audit logs to the central GCP project (which will be accessible only for a limited amount of user accounts).

- Oracle Cloud Infrastructure Audit service – It is recommended to enable auditing on all compartments and forward the audit logs to the Root compartment account (which will be accessible only for a limited amount of user accounts).

Budget Control

It is crucial to set a budget and budget alerts for any account in the cloud at in the early stages of working with in cloud environment. This is important in order to avoid scenarios in which high resource consumption happens due to human error, such as purchasing or consuming expensive resources, or of Denial of Wallet scenarios, where external attackers breach an organization’s cloud account and deploys servers for Bitcoin mining.

Common examples of budget control management for various cloud providers:

- AWS Consolidated Billing – Configure central account among all the AWS account in the organization, in-order to forward billing data (which will be accessible only for a limited amount of user accounts).

- GCP Cloud Billing Account – Central repository for storing all billing data from all GCP projects.

- Azure Cost Management – An interface for configuring budget and budget alerts for all Azure subscriptions in the organization. It is possible to consolidate multiple Azure subscriptions to Management Groups in-order to centrally control budgets for all subscriptions.

- Budget on Oracle Cloud Infrastructure – An interface for configuring budget and budget alerts for all compartments.

Secure access to cloud environments

In order to avoid inbound access from the Internet to resources in cloud environments (virtual servers, databases, storage, etc.), it is highly recommended to deploy a bastion host, which will be accessible from the Internet (SSH or RDP traffic) and will allow access and management of resources inside the cloud environment.

Common guidelines for deploying Bastion Host:

- Linux Bastion Hosts on AWS

- Create an Azure Bastion host using the portal

- Securely connecting to VM instances on GCP

- Setting Up the Basic Infrastructure for a Cloud Environment, based on Oracle Cloud

The more we expand the usage of cloud environments, we can consider deploying a VPN tunnel from the corporate network (Site-to-site VPN) or allow client VPN access from the Internet to the cloud environment (such as AWS Client VPN endpoint, Azure Point-to-Site VPN, Oracle Cloud SSL VPN).

Managing compute resources (Virtual Machines and Containers)

When selecting to deploy virtual machines in cloud environment, it is highly recommended to follow the following guidelines:

- Choose an existing image from a pre-defined list in the cloud providers’ marketplace (operating system flavor, operating system build, and sometimes an image that includes additional software inside the base image).

- Configure the image according to organizational or application demands.

- Update all software versions inside the image.

- Store an up-to-date version of the image (“Golden Image”) inside the central image repository in the cloud environment (for reuse).

- In case the information inside the virtual machines is critical, consider using managed backup services (such as AWS Backup or Azure Backup).

- When deploying Windows servers, it is crucial to set complex passwords for the local Administrator’s account, and when possible, join the Windows machine to the corporate domain.

- When deploying Linux servers, it is crucial to use SSH Key authentication and store the private key(s) in a secure location.

- Whenever possible, encrypt data at rest for all block volumes (the server’s hard drives / volumes).

- It is highly recommended to connect the servers to a managed vulnerability assessment service, in order to detect software vulnerabilities (services such as Amazon Inspector or Azure Security Center).

- It is highly recommended to connect the servers to a managed patch management service in-order to ease the work of patch management (services such as AWS Systems Manager Patch Manager, Azure Automation Update Management or Google OS Patch Management).

When selecting to deploy containers in the cloud environment, it is highly recommended to follow the following guidelines:

- Use a Container image from a well know container repository.

- Update all binaries and all dependencies inside the Container image.

- Store all Container images inside a managed container repository inside the cloud environment (services such as Amazon ECR, Azure Container Registry, GCP Container Registry, Oracle Cloud Container Registry, etc.)

- Avoid using Root account inside the Containers.

- Avoid storing data (such as session IDs) inside the Container – make sure the container is stateless.

- It is highly recommended to connect the CI/CD process and the container update process to a managed vulnerability assessment service, in-order to detect software vulnerabilities (services such as Amazon ECR Image scanning, Azure Container Registry, GCP Container Analysis, etc.)

Storing sensitive information

It is highly recommended to avoid storing sensitive information, such as credentials, encryption keys, secrets, API keys, etc., in clear text inside virtual machines, containers, text files or on the local desktop.

Sensitive information should be stored inside managed vault services such as:

- AWS KMS or AWS Secrets Manager

- Azure Key Vault

- Google Cloud KMS or Google Secret Manager

- Oracle Cloud Infrastructure Key Management

- HashiCorp Vault

Object Storage

When using Object Storage, it is recommended to follow the following guidelines:

- Avoid allowing public access to services such as Amazon S3, Azure Blob Storage, Google Cloud Storage, Oracle Cloud Object Storage, etc.

- Enable audit access on Object Storage and store the access logs in a central account in the cloud environment (which will be accessible only for a limited amount of user accounts).

- It is highly recommended to encrypt data at rest on all data inside Object Storage and when there is a business or regulatory requirement, and encrypt data using customer managed keys.

- It is highly recommended to enforce HTTPS/TLS for access to object storage (users, computers and applications).

- Avoid creating object storage bucket names with sensitive information, since object storage bucket names are unique and saved inside the DNS servers worldwide.

Networking

- Make sure access to all resources is protected by access lists (such as AWS Security Groups, Azure Network Security Groups, GCP Firewall Rules, Oracle Cloud Network Security Groups, etc.)

- Avoid allowing inbound access to cloud environments using protocols such as SSH or RDP (in case remote access is needed, use Bastion host or VPN connections).

- As much as possible, it is recommended to avoid outbound traffic from the cloud environment to the Internet. If needed, use a NAT Gateway (such as Amazon NAT Gateway, Azure NAT Gateway, GCP Cloud NAT, Oracle Cloud NAT Gateway, etc.)

- As much as possible, use DNS names to access resources instead of static IPs.

- When developing cloud environments, and subnets inside new environments, avoid IP overlapping between subnets in order to allow peering between cloud environments.

Advanced use of cloud environments

- Prefer to use managed services instead of manually managing virtual machines (services such as Amazon RDS, Azure SQL Database, Google Cloud SQL, etc.)

It allows consumption of services, rather than maintaining servers, operating systems, updates/patches, backup and availability, assuming managed services in cluster or replica mode is chosen.

- Use Infrastructure as a Code (IoC) in-order to ease environment deployments, lower human errors and standardize deployment on multiple environments (Prod, Dev, Test).

Common Infrastructure as a Code alternatives:

Summary

To sum up:

Plan. Know what you need. Think scale.

If you use the best practices outlined here, taking off to the cloud for the first time will be an easier, safer and smoother ride then you might expect.

Additional references

Benefits of using managed database as a service in the cloud

When using public cloud services for relational databases, you have two options:

- IaaS solution – Install a database server on top of a virtual machine

- PaaS solution – Connect to a managed database service

In the traditional data center, organizations had to maintain the operating system and the database by themselves.

The benefits are very clear – full control over the entire stack.

The downside – The organization needs to maintain availability, license cost and security (access control, patch level, hardening, auditing, etc.)

Today, all the major public cloud vendors offer managed services for databases in the cloud.

To connect to the database and begin working, all a customer needs is a DNS name, port number and credentials.

The benefits of a managed database service are:

- Easy administration – No need to maintain the operating system (including patch level for the OS and for the database, system hardening, backup, etc.)

- Scalability – The number of virtual machines in the cluster will grow automatically according to load, in addition to the storage space required for the data

- High availability – The cluster can be configured to span across multiple availability zones (physical data centers)

- Performance – Usually the cloud provider installs the database on SSD storage

- Security – Encryption at rest and in transit

- Monitoring – Built-in the service

- Cost – Pay only for what you use

Not all features available on the on-premises version of the database are available on the PaaS version, and not all common databases are available as managed service of the major cloud providers.

Amazon RDS

Amazon managed services currently (as of April 2018) supports the following database engines:

- Microsoft SQL Server (2008 R2, 2012, 2014, 2016, and 2017)

Amazon RDS for SQL Server FAQs:

https://aws.amazon.com/rds/sqlserver/faqs

Known limitations:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/CHAP_SQLServer.html#SQLServer.Concepts.General.FeatureSupport.Limits

- MySQL (5.5, 5.6 and 5.7)

Amazon RDS for MySQL FAQs:

https://aws.amazon.com/rds/mysql/faqs

Known limitations:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/MySQL.KnownIssuesAndLimitations.html

- Oracle (11.2 and 12c)

Amazon RDS for Oracle Database FAQs:

https://aws.amazon.com/rds/oracle/faqs

Known limitations:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/CHAP_Limits.html

- PostgreSQL (9.3, 9.4, 9.5, and 9.6)

Amazon RDS for PostgreSQL FAQs:

https://aws.amazon.com/rds/postgresql/faqs

Known limitations:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/CHAP_PostgreSQL.html#PostgreSQL.Concepts.General.Limits

- MariaDB (10.2)

Amazon RDS for MariaDB FAQs:

https://aws.amazon.com/rds/mariadb/faqs

Known limitations:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/CHAP_Limits.html

Azure Managed databases

Microsoft Azure managed database services currently (as of April 2018) support the following database engines:

- Azure SQL Database

Technical overview:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-technical-overview

Known limitations:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-dtu-resource-limits

- MySQL (5.6 and 5.7)

Technical overview:

https://docs.microsoft.com/en-us/azure/mysql/overview

Known limitations:

https://docs.microsoft.com/en-us/azure/mysql/concepts-limits

- PostgreSQL (9.5, and 9.6)

Technical overview:

https://docs.microsoft.com/en-us/azure/postgresql/overview

Known limitations:

https://docs.microsoft.com/en-us/azure/postgresql/concepts-limits

Google Cloud SQL

Google managed database services currently (as of April 2018) support the following database engines:

- MySQL (5.6 and 5.7)

Product documentation:

https://cloud.google.com/sql/docs/mysql

Known limitations:

https://cloud.google.com/sql/docs/mysql/known-issues

- PostgreSQL (9.6)

Product documentation:

https://cloud.google.com/sql/docs/postgres

Known limitations:

https://cloud.google.com/sql/docs/postgres/known-issues

Oracle Database Cloud Service

Oracle managed database services currently (as of April 2018) support the following database engines:

- Oracle (11g and 12c)

Product documentation:

https://cloud.oracle.com/en_US/database/features

Known issues:

https://docs.oracle.com/en/cloud/paas/database-dbaas-cloud/kidbr/index.html#KIDBR109

- MySQL (5.7)

Product documentation:

https://cloud.oracle.com/en_US/mysql/features