Archive for the ‘Chaos Engineering’ Category

Introduction to AWS Resilience Hub

When deploying a new application in the public cloud, we need to ask the business owner what are the resiliency (or SLA) requirements – How long can the business survive while our application is down and does not serve customers?

There are various answers to that question – from 24/7 availability (not realistic) to uptime of 99.9%, etc.

The domain of resiliency has two main concepts:

- RTO (Recovery Time Objective) – the amount of time it takes to recover a system after disruption

- RPO (Recovery Point Objective) – the amount of data loss, measured by time

To achieve high resiliency, or follow business SLA requirements, there are technical and cost consequences.

Naturally, we want to provision resources in high-availability (such as a farm of front-end web servers behind load-balancer), in a cluster (such as a cluster of database instances), deployed in multiple availability zones or perhaps in multiple regions, and try to avoid single point of failure.

We need to plan an architecture that will support our business resiliency requirements.

In theory, an architect can look at proposed architecture and say whether or not he sees potential availability failures, but it does not scale in large and complex architectures.

In 2021, AWS announced the general availability of the AWS Resilience Hub.

In this blog post, I will review what is the purpose of this service and how can we use it regularly, as part of our CI/CD process.

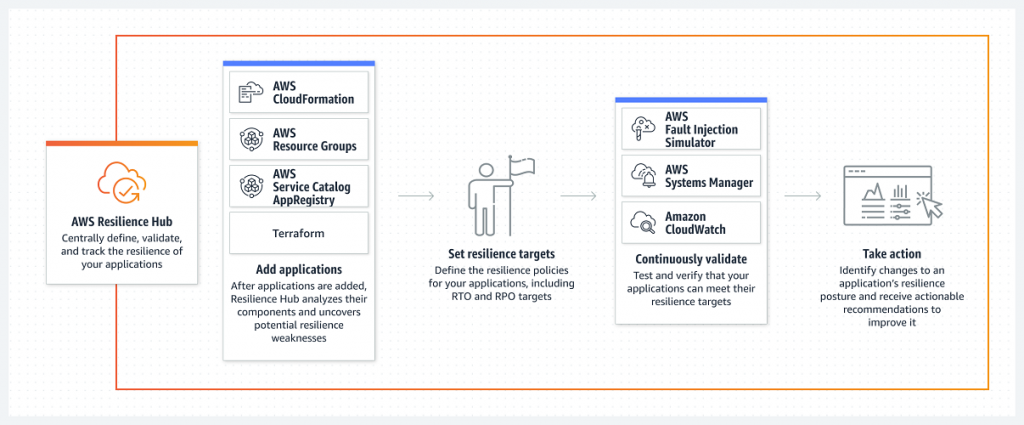

How does AWS Resilience Hub work?

Source: https://docs.aws.amazon.com/resilience-hub/latest/userguide/how-it-works.html

To work with AWS Resilience Hub, follow the steps below:

Add an application

AWS Resilience Hub allows you to assess an application by scanning the following resources:

- AWS Resource Groups

- AWS AppRegistry applications

- AWS CloudFormation stacks

- Terraform state files

- Amazon EKS cluster configuration

Set resilience targets

AWS Resilience Hub supports the following built-in tiers:

- Foundational IT core services

- Mission critical

- Critical

- Important

- Non-critical

Choose the target policy according to the application business requirements of RTO and RPO.

Select one of the predefined suggested policies:

- Non-critical application

- Important Application

- Critical Application

- Global Critical Application

- Mission Critical Application

- Global Mission Critical Application

- Foundational Core Service

AWS Resilience Hub allows you to evaluate the resiliency of an application against the following types of disruption:

- Customer Application RTO and RPO

- AWS Infrastructure RTO and RPO

- Cloud Infrastructure Availability Zone (AZ) disruption

- AWS Region disruption

Run an assessment

AWS Resilience Hub allows you to either run manual on-time assessments or schedule an assessment daily.

To get the most value from AWS Resilience Hub, you can integrate it as part of a CI/CD pipeline, as an additional step, once you provision Infrastructure as Code (using CloudFormation templates or Terraform modules).

A common example of integration with CI/CD pipeline:

In a mature environment, you can take one step further and integrate AWS Resilience Hub with the built-in chaos engineering service AWS Fault Injection Simulator to conduct controlled experiments on your application and evaluate its resiliency.

Review results and continue improvements

Once an assessment was completed, it is time to review the results, to make sure your application meets the business resiliency requirements (in terms of RTO/RPO).

The results will be written in a report, with recommendations for improvements to your application resiliency, such as adding another node to an RDS cluster, deploying another EC2 instance in another availability zone, enabling S3 bucket versioning, etc.

To make things easy to understand and improve over time, you can build dashboards using Amazon QuickSight and send alerts using CloudWatch, as explained in the blog post:

https://aws.amazon.com/blogs/mt/resilience-reporting-dashboard-aws-resilience-hub/

For continuous and automated improvement, you can integrate AWS Resilience Hub with AWS Systems Manager to efficiently recover your application in the event of outages, as explained in the blog post:

https://docs.aws.amazon.com/resilience-hub/latest/userguide/create-custom-ssm-doc.html

Summary

In this blog post, we learned about the purpose of AWS Resilience Hub, what are the various steps for using it, and perhaps most important – how to automate the assessment as part of a CI/CD pipeline for continuous improvement.

I encourage anyone who builds applications on top of AWS to learn about the benefits of this service, providing insights into the resiliency of applications to meet business requirements.

Additional References:

Introduction to Chaos Engineering

In the past couple of years, we hear the term “Chaos Engineering” in the context of cloud.

Mature organizations have already begun to embrace the concepts of chaos engineering, and perhaps the most famous use of chaos engineering began at Netflix when they developed Chaos Monkey.

To quote Werner Vogels, Amazon CTO: “Everything fails, all the time”.

What is chaos engineering and what are the benefits of using chaos engineering for increasing the resiliency and reliability of workloads in the public cloud?

What is Chaos Engineering?

“Chaos Engineering is the discipline of experimenting on a system to build confidence in the system’s capability to withstand turbulent conditions in production.” (Source: https://principlesofchaos.org)

Production workloads on large scale, are built from multiple services, creating distributed systems.

When we design large-scale workloads, we think about things such as:

- Creating high-available systems

- Creating disaster recovery plans

- Decreasing single point of failure

- Having the ability to scale up and down quickly according to the load on our application

One thing we usually do not stop to think about is the connectivity between various components of our application and what will happen in case of failure in one of the components of our application.

What will happen if, for example, a web server tries to access a backend database, and it will not be able to do so, due to network latency on the way to the backend database?

How will this affect our application and our customers?

What if we could test such scenarios on a live production environment, regularly?

Do we trust our application or workloads infrastructure so much, that we are willing to randomly take down parts of our infrastructure, just so we will know the effect on our application?

How will this affect the reliability of our application, and how will it allow us to build better applications?

History of Chaos Engineering

In 2010 Netflix developed a tool called “Chaos Monkey“, whose goal was to randomly take down compute services (such as virtual machines or containers), part of the Netflix production environment, and test the impact on the overall Netflix service experience.

In 2011 Netflix released a toolset called “The Simian Army“, which added more capabilities to the Chaos Monkey, from reliability, security, and resiliency (i.e., Chaos Kong which simulates an entire AWS region going down).

In 2012, Chaos Monkey became an open-source project (under Apache 2.0 license).

In 2016, a company called Gremlin released the first “Failure-as-a-Service” platform.

In 2017, the LitmusChaos project was announced, which provides chaos jobs in Kubernetes.

In 2019, Alibaba Cloud announced ChaosBlade, an open-source Chaos Engineering tool.

In 2020, Chaos Mesh 1.0 was announced as generally available, an open-source cloud-native chaos engineering platform.

In 2021, AWS announced the general availability of AWS Fault Injection Simulator, a fully managed service to run controlled experiments.

In 2021, Azure announced the public preview of Azure Chaos Studio.

What exactly is Chaos Engineering?

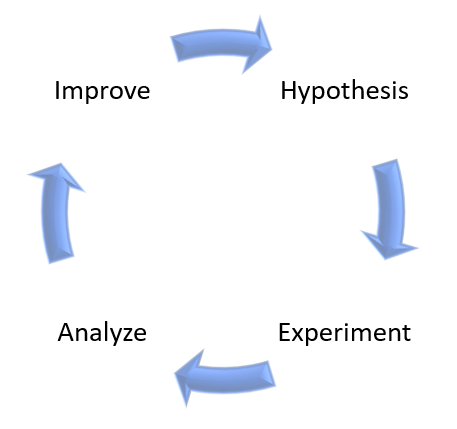

Chaos Engineering is about experimentation based on real-world hypotheses.

Think about Chaos Engineering, as one of the tests you run as part of a CI/CD pipeline, but instead of a unit test or user acceptance test, you inject controlled faults into the system to measure its resiliency.

Chaos Engineering can be used for both modern cloud-native applications (built on top of Kubernetes) and for the legacy monolith, to achieve the same result – answering the question – will my system or application survive a failure?

On high-level, Chaos Engineering is made of the following steps:

- Create a hypothesis

- Run an experiment

- Analyze the results

- Improve system resiliency

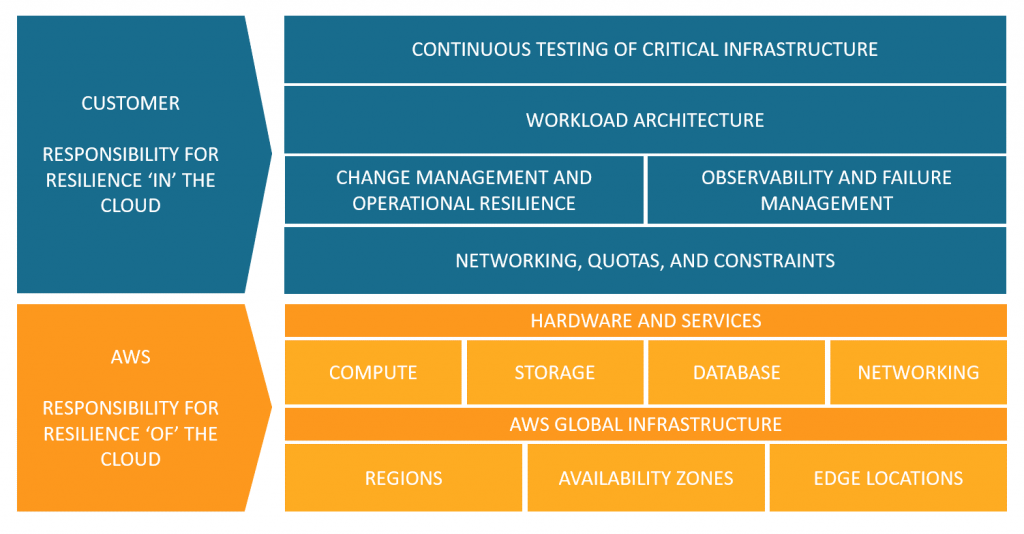

As an example, here is AWS’s point of view regarding the shared responsibility model, in the context of resiliency:

Source: https://aws.amazon.com/blogs/architecture/chaos-engineering-in-the-cloud

Chaos Engineering managed platform comparison

In the table below we can see a comparison between AWS and Azure-managed services for running Chaos Engineering experiments:

Additional References:

Summary

In this post, I have explained the concept of Chaos Engineering and compared alternatives to cloud-managed services.

Using Chaos Engineering as part of a regular development process will allow you to increase the resiliency of your applications, by studying the effect of failures and designing recovery processes.

Chaos Engineering can also be used as part of a disaster recovery and business continuity process, by testing the resiliency of your systems.

Additional References

- Chaos engineering (Wikipedia)

- Principles of Chaos Engineering

- Chaos Engineering in the Cloud

- What Chaos Engineering Is (and is not)

- AWS re:Invent 2022 – The evolution of chaos engineering at Netflix (NFX303)

- What is AWS Fault Injection Simulator?

- What is Azure Chaos Studio?

- Public Chaos Engineering Stories / Implementations