Archive for the ‘Artificial Intelligence’ Category

Introduction to AI Code Generators

The past couple of years brought us tons of examples of using generative AI to improve many aspects of our lives.

We can see vendors, with strong community and developers’ support, introducing more and more services for almost any aspect of our lives.

The two most famous examples are ChatGPT (AI Chatbot) and Midjourney (Image generator).

Wikipedia provides us with the following definition for Generative AI:

“Generative artificial intelligence (also generative AI or GenAI) is artificial intelligence capable of generating text, images, or other media, using generative models. Generative AI models learn the patterns and structure of their input training data and then generate new data that have similar characteristics.”

Source: https://en.wikipedia.org/wiki/Generative_artificial_intelligence

In this blog post, I will compare some of the alternatives for using Gen AI to assist developers in producing code.

What are AI Code Generators?

AI code generators are services using AI/ML engines, integrated as part of the developer’s Integrated Development Environment (IDE), and provide the developer suggestions for code, based on the programming language and the project’s context.

In most cases, AI code generators come as a plugin or an addon to the developer’s IDE.

Mature AI code generators support multiple programming languages, can be integrated with most popular IDEs, and can provide valuable code samples, by understanding both the context of the code and the cloud provider’s eco-system.

AI Code Generators Terminology

Below are a couple of terms to know when using AI code generators:

- Suggestions – The output of AI code generators is code samples

- Prompts – Collection of code and supporting contextual information

- User engagement data / Client-side telemetry – Events generated at the client IDE (error messages, latency, feature engagement, etc.)

- Code snippets – Lines of code created by the developer inside the IDE

- Code References – Code originated from open-source or externally trained data

AI Code Generators – Alternative Comparison

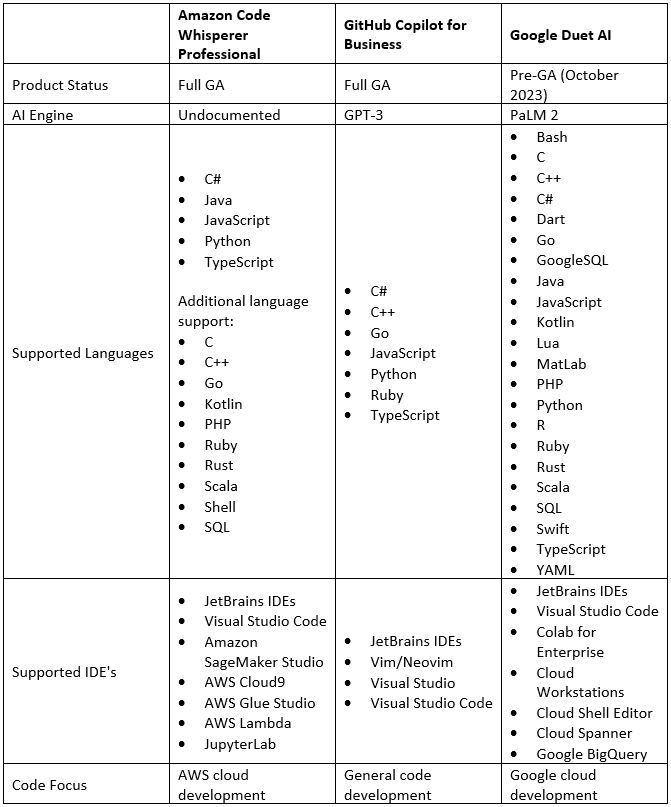

The table below provides a comparison between the alternatives the largest cloud providers offer their customers:

AI Code Generators – Security Aspects

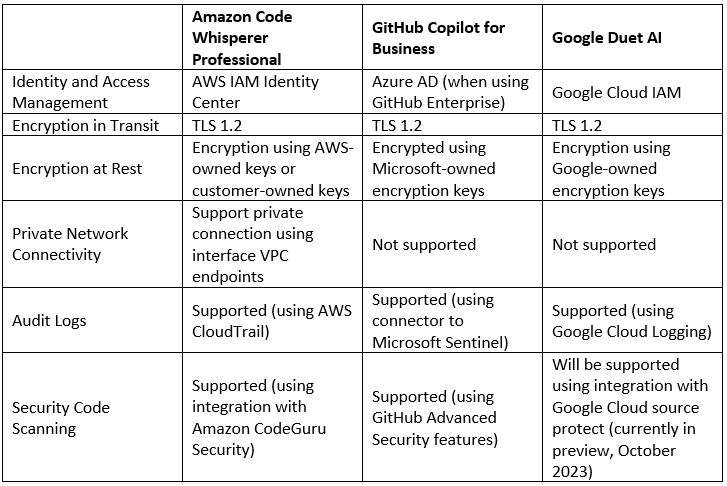

AI Code Generators can provide a lot of benefits for the developers, but at the end of the day we need to recall that these are still cloud-based services, deployed in a multi-tenant environment, and as with the case of any AI/ML, the vendor is aiming at training their AI/ML engines to provide better answers.

Code may contain sensitive data – from static credentials (secrets, passwords, API keys), hard-coded IP addresses or DNS names (for accessing back-end or even internal services), or even intellectual property code (as part of the organization’s internal IP).

Before consuming AI code generators, it is recommended to thoroughly review the vendors’ documentation, understand what data (such as telemetry) is transferred from the developer’s IDE back to the cloud, and how data is protected at all layers.

The table below provides a comparison between the alternatives the largest cloud providers offer their customers from a security point of view:

Summary

In this blog post, we have reviewed alternatives of AI code generators, offered by AWS, Azure, and GCP.

Although there are many benefits from using those services, allowing developers fast coding capabilities, the work on those services is still a work in progress.

Customers should perform their risk analysis before using those services, and limit as much as possible the amount of data shared with the cloud providers (since they are all built on multi-tenant environments).

As with any code developed, it is recommended to embed security controls, such as Static application security testing (SAST) tools, and invest in security training for developers.

References

- What is Amazon Code Whisperer?

https://docs.aws.amazon.com/codewhisperer/latest/userguide/what-is-cwspr.html

- GitHub Copilot documentation

https://docs.github.com/en/copilot

- Duet AI in Google Cloud overview