Archive for the ‘DevOps’ Category

Cloud Native Applications – Part 1: Introduction

In the past couple of years, there is a buzz about cloud-native applications.

In this series of posts, I will review what exactly is considered a cloud-native application and how can we secure cloud-native applications.

Before speaking about cloud-native applications, we should ask ourselves – what is cloud native anyway?

The CNCF (Cloud Native Computing Foundation) provides the following definition:

“Cloud native technologies empower organizations to build and run scalable applications in modern, dynamic environments such as public, private, and hybrid clouds. Containers, service meshes, microservices, immutable infrastructure, and declarative APIs exemplify this approach.

These techniques enable loosely coupled systems that are resilient, manageable, and observable. Combined with robust automation, they allow engineers to make high-impact changes frequently and predictably with minimal toil.”

Source: https://github.com/cncf/toc/blob/main/DEFINITION.md

It means – taking full advantage of cloud capabilities, from elasticity (scale in and scale out according to workload demands), use of managed services (such as compute, database, and storage services), and the use of modern design architectures based on micro-services, APIs, and event-driven applications.

What are the key characteristics of cloud-native applications?

Use of modern design architecture

Modern applications are built from loosely coupled architecture, which allows us to replace a single component of the application, with minimal or even no downtime to the entire application.

Examples can be code update/change or scale in/scale out a component according to application load.

- RESTful APIs are suitable for communication between components when fast synchronous communication is required. We use API gateways as managed service to expose APIs and control inbound traffic to various components of our application.

Example of services:

- Amazon API Gateway

- Azure API Management

- Google API Gateway

- Oracle API Gateway

- Event-driven architecture is suitable for asynchronous communication. It uses events to trigger and communicate between various components of our application. In this architecture, one component produces/publishes an event (such as a file uploaded to object storage) and another component subscribes/consumes the events (in a Pub/Sub model) and reacts to the event (for example reads the file content and steam it to a database). This type of architecture handles load very well.

Example of services:

- Amazon EventBridge

- Amazon Simple Queue Service (SQS)

- Azure Event Grid

- Azure Service Bus

- Google Eventarc

- Google Cloud Pub/Sub

- Oracle Cloud Infrastructure (OCI) Events Service

Additional References:

- AWS – What is API management?

- AWS – What is an Event-Driven Architecture?

- Azure – API Design

- Azure – Event-driven architecture style

- Google API design guide

- GCP – Event-driven architectures

- Oracle API For Developers

- Oracle Cloud – Modern App Development – Event-Driven

Use of microservices

Microservices represent the concept of distributed applications, and they enable us to decouple our applications into small independent components.

Components in a microservice architecture usually communicate using APIs (as previously mentioned in this post).

Each component can be deployed independently, which provides a huge benefit for code change and scalability.

Additional references:

- AWS – What are Microservices?

- Azure – Microservice architecture style

- GCP – What is Microservices Architecture?

- Oracle Cloud – Design a Microservices-Based Application

- The Twelve-Factor App

Use of containers

Modern applications are heavily built upon containerization technology.

Containers took virtual machines to the next level in the evolution of computing services.

They contain a small subset of the operating system – only the bare minimum binaries and libraries required to run an application.

Containers bring many benefits – from the ability to run anywhere, small footprint (for container images), isolation (in case of a container crash), fast deployment time, and more.

The most common orchestration and deployment platform for containers is Kubernetes, used by many software development teams and SaaS vendors, capable of handling thousands of containers in many production environments.

Example of services:

- Amazon Elastic Kubernetes Service (EKS)

- Azure Kubernetes Service (AKS)

- Google Kubernetes Engine (GKE)

- Oracle Container Engine for Kubernetes (OKE)

Additional References:

Use of Serverless / Function as a Service

More and more organizations are beginning to embrace serverless or function-as-a-service technology.

This is considered the latest evolution in computing services.

This technology allows us to write code and import it into a managed environment, where the cloud provider is responsible for the maintenance, availability, scalability, and security of the underlining infrastructure used to run our code.

Serverless / Function as a Service, demonstrates a very good use case for event-driven applications (for example – an event written to a log file triggers a function to update a database record).

Functions can also be part of a microservice architecture, where some of the application components are based on serverless technology, to run specific tasks.

Example of services:

Additional References:

- Serverless on AWS

- Azure serverless

- Google Cloud Serverless computing

- Oracle Cloud – What is serverless?

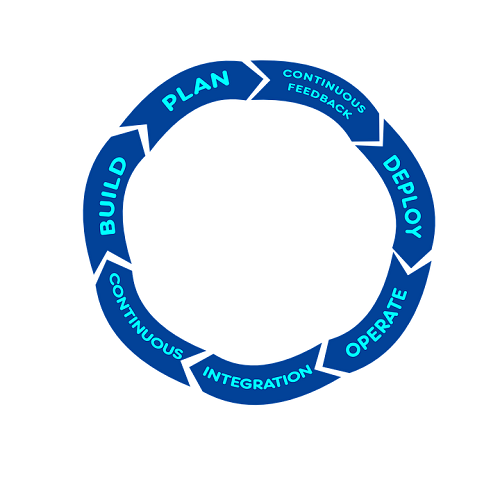

Use of DevOps processes

To support rapid application development and deployment, modern applications use CI/CD processes, which follow DevOps principles.

We use pipelines to automate the process of continuous integration and continuous delivery or deployment.

The process allows us to integrate multiple steps or gateways, where in each step we can embed additional automated tests, from static code analysis, functional test, integration test, and more.

Example of services:

Additional References:

Use of automated deployment processes

Modern application deployment takes an advantage of automation using Infrastructure as Code.

Infrastructure as Code is using declarative scripting languages, in in-order to deploy an entire infrastructure or application infrastructure stack in an automated way.

The fact that our code is stored in a central repository allows us to enforce authorization mechanisms, auditing of actions, and the ability to roll back to the previous version of our Infrastructure as Code.

Infrastructure as Code integrates perfectly with CI/CD processes, which enables us to re-use the knowledge we already gained from DevOps principles.

Example of solutions:

Additional References:

- Automation as key to cloud adoption success

- AWS – Infrastructure as Code

- Azure – What is infrastructure as code (IaC)?

- Want a repeatable scale? Adopt infrastructure as code on GCP

- Oracle Cloud – What Is Infrastructure as Code (IaC)?

Summary

In this post, we have reviewed the key characteristics of cloud-native applications, and how can we take full advantage of the cloud, when designing, building, and deploying modern applications.

I recommend you continue expanding your knowledge about cloud-native applications, whether you are a developer, IT team member, architect, or security professional.

Stay tuned for the next chapter of this series, where we will focus on securing cloud-native applications.

Additional references

Using immutable infrastructure to achieve cloud security

Maintaining cloud infrastructure, especially compute components, requires a lot of effort – from patch management, secure configuration, and more.

Other than the efforts it takes for the maintenance part, it simply will not scale.

Will we be able to support our workloads when we need to scale to thousands of machines at peak?

Immutable infrastructure is a deployment method where compute components (virtual machines, containers, etc.) are never updated – we simply replace a running component with a new one and decommission the old one.

Immutable infrastructure has its advantages, such as:

- No dependent on previous VM/container state

- No configuration drifts

- The fast configuration management process

- Easy horizontal scaling

- Simple rollback/recovery process

The Twelve-Factor App

Designing modern or cloud-native applications requires us to follow 12 principles, documents in https://12factor.net

Looking at this guide, we see that factor number 3 (config) guides us to store configuration in environment variables, outside our code (or VMs/containers).

For further reading, see:

- The Twelve-Factor App – Config

- AWS – Applying the Twelve-Factor App Methodology to Serverless Applications

- Azure – The Twelve-Factor Application

- GCP – Twelve-factor app development on Google Cloud

https://cloud.google.com/architecture/twelve-factor-app-development-on-gcp#3_configuration

If we continue to follow the guidelines, factor number 6 (processes) guides us to create stateless processes, meaning, separating the execution environment and the data, and keeping all stateful or permanent data in an external service such as a database or object storage.

For further reading, see:

- The Twelve-Factor App – Processes

https://12factor.net/processes

How do we migrate to immutable infrastructure?

Build a golden image

Follow the cloud vendor’s documentation about how to download the latest VM image or container image (from a container registry), update security patches, binaries, and libraries to the latest version, customize the image to suit the application’s needs, and store the image in a central image repository.

It is essential to copy/install only necessary components inside the image and remove any unnecessary components – it will allow you to keep a minimal image size and decrease the attack surface.

It is recommended to sign your image during the storage process in your private registry, to make sure it was not changed and that it was created by a known source.

For further reading, see:

- Automate OS Image Build Pipelines with EC2 Image Builder

https://aws.amazon.com/blogs/aws/automate-os-image-build-pipelines-with-ec2-image-builder/

- Creating a container image for use on Amazon ECS

https://docs.aws.amazon.com/AmazonECS/latest/userguide/create-container-image.html

- Azure VM Image Builder overview

https://learn.microsoft.com/en-us/azure/virtual-machines/image-builder-overview

- Build and deploy container images in the cloud with Azure Container Registry Tasks

https://learn.microsoft.com/en-us/azure/container-registry/container-registry-tutorial-quick-task

- Create custom images

https://cloud.google.com/compute/docs/images/create-custom

- Building container images

https://cloud.google.com/build/docs/building/build-containers

Create deployment pipeline

Create a CI/CD pipeline to automate the following process:

- Check for new software/binaries/library versions against well-known and signed repositories

- Pull the latest image from your private image repository

- Update the image with the latest software and configuration changes in your image registry

- Run automated tests (unit tests, functional tests, acceptance tests, integration tests) to make sure the new build does not break the application

- Gradually deploy a new version of your VMs / containers and decommission old versions

For further reading, see:

- Create an image pipeline using the EC2 Image Builder console wizard

https://docs.aws.amazon.com/imagebuilder/latest/userguide/start-build-image-pipeline.html

- Create a container image pipeline using the EC2 Image Builder console wizard

https://docs.aws.amazon.com/imagebuilder/latest/userguide/start-build-container-pipeline.html

- Streamline your custom image-building process with the Azure VM Image Builder service

- Build a container image to deploy apps using Azure Pipelines

https://learn.microsoft.com/en-us/azure/devops/pipelines/ecosystems/containers/build-image

- Creating the secure image pipeline

https://cloud.google.com/software-supply-chain-security/docs/create-secure-image-pipeline

- Using the secure image pipeline

https://cloud.google.com/software-supply-chain-security/docs/use-image-pipeline

Continues monitoring

Continuously monitor for compliance against your desired configuration settings, security best practices (such as CIS benchmark hardening settings), and well-known software vulnerabilities.

In case any of the above is met, create an automated process, and use your previously created pipeline to replace the currently running images with the latest image version from your registry.

For further reading, see:

- How to Set Up Continuous Golden AMI Vulnerability Assessments with Amazon Inspector

- Scanning Amazon ECR container images with Amazon Inspector

https://docs.aws.amazon.com/inspector/latest/user/enable-disable-scanning-ecr.html

- Manage virtual machine compliance

- Use Defender for Containers to scan your Azure Container Registry images for vulnerabilities

- Automatically scan container images for known vulnerabilities

https://cloud.google.com/kubernetes-engine/docs/how-to/security-posture-vulnerability-scanning

Summary

In this article, we have reviewed the concept of immutable infrastructure, its benefits, and the process for creating a secure, automated, and scalable solution for building immutable infrastructure in the cloud.

References

- The History of Pets vs Cattle and How to Use the Analogy Properly

https://cloudscaling.com/blog/cloud-computing/the-history-of-pets-vs-cattle/

- Deploy using immutable infrastructure

- Immutable infrastructure CI/CD using Jenkins and Terraform on Azure

- Automate your deployments

https://cloud.google.com/architecture/framework/operational-excellence/automate-your-deployments

Automation as key to cloud adoption success

After deploying several workloads in the public cloud, making mistakes, failing, fixing, and beginning using the cloud for production workloads, it is now the time to think about the next step in cloud adoption.

To be able to fully embrace the benefits of the public cloud, the scale, the elasticity, and the short time it takes to deploy new resources, it is time to put automation in place.

Automation allows us to do the same tasks over and over again, deploying the same configuration to multiple environments (Dev, Test, Prod) and get the same results – no human errors (assuming you have tested your code…)

Automation can be achieved in various ways – from using the CLI, using the cloud vendor’s SDK (languages such as Python, Go, Java, and more), or using Infrastructure as Code (such as Terraform, AWS CloudFormation, Azure Resource Manager, and more).

In this article, we shall review some of the common alternatives for using automation using code.

Why use code?

The clear benefit of using code for automation is the ability to have change management. Simply choose your favorite source control (such as GitHub, AWS CodeCommit, Azure Repos, and more), upload your scripts and have the version history of your code, and be able to know at each stage who made changes to the code.

Another benefit of using code for automation is the fact that the Internet is full of samples you can find to automate (almost) anything in your cloud environment.

The downside of doing everything using code, is the learning curve required by your organization’s IT or DevOps teams, learning new languages, but once they pass this stage, you can have all the benefits of the scripting languages.

Automation – the AWS way

If AWS is your sole cloud provider, you should learn and start using the following built-in services or capabilities offered by AWS:

Infrastructure as Code

- AWS CloudFormation – The built-in IaC for deploying and managing AWS resources.

Reference: https://github.com/aws-cloudformation/aws-cloudformation-samples

- AWS Cloud Development Kit (AWS CDK) – Ability to write CloudFormation templates, based on common programming languages such as Python, Java, DotNet, and more.

Reference: https://github.com/aws-samples/aws-cdk-examples

Policy as Code

- Service control policies (SCPs) – Managing permissions in AWS Organizations.

Reference: https://docs.aws.amazon.com/organizations/latest/userguide/orgs_manage_policies_scps_examples.html

CI/CD pipeline

- AWS CodePipeline – A fully managed continuous delivery service.

Reference: https://docs.aws.amazon.com/codepipeline/latest/userguide/tutorials.html

Containers and Kubernetes

- Amazon ECS – Container management service based on the AWS platform.

Reference: https://docs.aws.amazon.com/AmazonECS/latest/developerguide/example_task_definitions.html

- Amazon Elastic Kubernetes Service (EKS) – Managed Kubernetes service.

Reference: https://github.com/aws-quickstart/quickstart-amazon-eks

Automation – the Azure way

If Azure is your sole cloud provider, you should learn and start using the following built-in services or capabilities offered by Azure:

Infrastructure as Code

- Azure Resource Manager templates (ARM templates) – The built-in IaC for deploying and managing Azure resources.

Reference: https://github.com/Azure/azure-quickstart-templates

- Bicep – Declarative language for deploying Azure resources.

Reference: https://github.com/Azure/azure-docs-bicep-samples

Policy as Code

- Azure Policy – Enforce organizational standards across the Azure organization.

Reference: https://github.com/Azure/azure-policy

CI/CD pipeline

- Azure Pipelines – A fully managed continuous delivery service.

Reference: https://github.com/microsoft/azure-pipelines-yaml

Containers and Kubernetes

- Azure Container Instances – Container management service based on the Azure platform.

Reference: https://docs.microsoft.com/en-us/samples/browse/?products=azure&terms=container%2Binstance

- Azure Kubernetes Service (AKS) – Managed Kubernetes service.

Reference: https://github.com/Azure/AKS

Automation – the Google Cloud way

If GCP is your sole cloud provider, you should learn and start using the following built-in services or capabilities offered by GCP:

Infrastructure as Code

- Google Cloud Deployment Manager – The built-in IaC for deploying and managing GCP resources.

Reference: https://github.com/GoogleCloudPlatform/deploymentmanager-samples

Policy as Code

- Google Organization Policy Service – Programmatic control over the organization’s cloud resources.

CI/CD pipeline

- Google Cloud Build – A fully managed continuous delivery service.

Reference: https://github.com/GoogleCloudPlatform/cloud-build-samples

Containers and Kubernetes

- Google Kubernetes Engine (GKE) – Managed Kubernetes service.

Reference: https://github.com/GoogleCloudPlatform/kubernetes-engine-samples

Automation – the cloud agnostic way

If you plan for the future, plan for multi-cloud. Look for solutions that are capable of connecting to multiple cloud environments, to decrease the learning curve of your DevOps team learning the various scripting languages and being able to deploy workloads on several cloud environments.

Infrastructure as Code

- Hashicorp Terraform – The most widely used IaC for deploying and managing resources on both cloud and on-premise.

Reference: https://registry.terraform.io/browse/providers

Policy as Code

- Hashicorp Sentinel – Policy as code framework that compliments Terraform code.

Reference: https://www.terraform.io/cloud-docs/sentinel/examples

CI/CD pipeline

- Jenkins – The most widely used open-source CI/CD tool.

Reference: https://www.jenkins.io/doc/pipeline/examples/

Containers and Kubernetes

- Docker – The most widely used container run-time for deploying applications.

Reference: https://github.com/dockersamples

- Kubernetes – The most widely used container orchestration open-source platform.

Reference: https://github.com/kubernetes/examples

Summary

In this post, I have reviewed the most common solutions that allow you to automate your workloads’ deployment, management, and maintenance using various scripting languages.

Some of the solutions are bound to a specific cloud provider, while others are considered cloud agnostic.

Use automation to fully embrace the power and benefits of the public cloud.

If you don’t have experience writing code, take the time to learn. The more you practice, the more experience you will gain.

As Werner Vogels, the Amazon CTO always says – “Go Build”.

Why not just have DevOps without the Sec?

If you don’t include security testing, risk assessments, compliance evaluations as part of the entire software delivery or release pipeline, you’re putting your organizations at risk. It goes beyond just failed release or delay in getting a feature out in the market, you’ll be introducing vulnerabilities into production, bypassing compliance and failing audit tests. All of these instances of security negligence could have penalties and fines associated with them.

Security in DevOps is a part of the natural evolution DevOps

DevOps is not a technology but a cultural organization shift that organizations need to make. If we break down DevOps, it comes down to developers and IT operations. What is in the name? DevOps, if we look down at the history, it all started with developers, as in agile, breaking down codes into smaller components. Then they moved around on to the next constraint, “How can we deploy faster”. So we got really good at infrastructure as code and at deploying to not just our private cloud but our public clouds as well. The third constraint was testing. So we started to introduce automation testing into the release process, which evolved into continuous testing as we started shifting testing earlier in the release process.

Security is a constraint if you think otherwise

As I said, it is a natural evolution, now we are in rendezvous with the fourth constraint—security. However, this constraint is not going very well with existing DevOps practices of continuous integration and delivery. To fix the things, it might take renaming DevOps to DevSecOps. DevOps are fine with it because security has always been the last step in that release process and security teams really don’t step in until the code is ready to move into production. I mean in terms of DevOps, a delay in release process of months even weeks is synonymous to blasphemy. Introducing security to DevOps is not as simple as we introduced testing to it. The notion “you move security ‘left’ and things will go right” will not work outright. It requires a change in mindset at organizational level of getting security to work with developers. It requires us to reevaluate and come up with better technology to be able to introduce security into our existing DevOps pipelines.

Security is boring but ‘right’

Security people have traditionally been tool operators. Some security people might do scripting but there’s a wide disassociation with software engineering group. Developers tend to criticize security people. For them, it is easy to break something than it is to build it. As companies are getting velocity and everyone’s a software company, they’re all building things faster quicker and security is last in the pipeline because it doesn’t inherently provide any business value other than risk reduction. Therefore, the security was left behind not just because DevOps was moving delivery pipelines too fast for security to catchup, but security teams took DevOps as something of a trend in line with agile.

Eventually, it comes down to business value

Ultimately, security became a part of DevOps after organizations recognized it was a legitimate movement and provides business value. We finally realize that’s conveying and communicating risk reduction must be done at every part of the pipeline whatever your software development cycle looks like. From waterfall to continuous integration whatever you have in place, putting security in that as part of it whether it’s in name of the title or how we talk about it. I think that’s more of a sort of gimmick. Really, those cultures can come from works but just sliding security in DevOps is a start but there’s a lot behind that.

Modern DevSecOps schemes are about the right approach

The fundamentals of the modern DevSecOps schemes rely both on processes as well as the automation of DevSecOps. By processes I mean that there’s a big gap today between the security teams and the development teams in a DevOps process. To be frank, developers don’t really like fixing security issues. Eventually, the security teams take on the issues, and raise an alert but when it comes to communicating these issues and remediating them, they need the cooperation of the development teams. When they have a DevSecOps team that promotes collaboration, they understand how developers think and work, and automate the whole process. That’s the key to a successful relationship between the security teams and the development teams.

If you look at number of people in those teams you will find hundreds or thousands of developers, probably a dozen or so DevOps people, and a couple of security people. In a nutshell, there is no workaround to introduce security into DevOps. You must automate and have the right tools in place to communicate and close the loops on resolving application security issues.

There are two approaches to introduce security into DevOps. Security teams would put developers into security teams and teach themselves how developers work and want to see security issues communicated to them and resolved.

Another approach is to put security analysts into dev teams in order to help developers improve the way they think about security and the way they develop their application. Regardless the approach, that’s something that resolves the gaps that hold automation and the communications by fixing friction between the dev and security team.

DevSecOps is a mindset afterall that closely follows your culture

The purpose and intent of the word “DevSecOps” is a sort of mindset that an agile team is responsible for all aspects from design and development to operations and security. This achieves speed and scale without sacrificing the safety of the code. Traditionally, system is designed and implemented and before release the defects are determined by security staff. With agile practices, it is important to inject security and operational details as early as possible in the development cycle.

“DevOps is the practice of operations and development engineers participating together in the entire service lifecycle, from design through the development process to production support.

“DevOps is also characterized by operation staff using many of the same techniques as developers use for their system works.

Integrate security aspects in a DevOps process

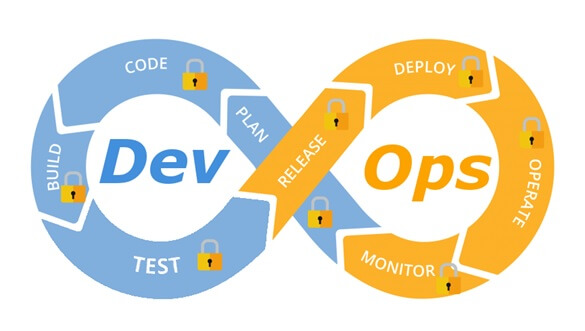

A diagram of a common DevOps lifecycle:

The DevOps world meant to provide complementary solution for both quick development (such as Agile) and a solution for cloud environments, where IT personnel become integral part of the development process. In the DevOps world, managing large number of development environments manually is practically infeasible. Monitoring mixed environments become a complex solution and deploying large number of different builds is becoming extremely fast and sensitive to changes.

The idea behind any DevOps solution is to provide a solution for deploying an entire CI/CD process, which means supporting constant changes and immediate deployment of builds/versions.

For the security department, this kind of process is at first look a nightmare – dozen builds, partial tests, no human control for any change, etc.

For this reason, it is crucial for the security department to embrace DevOps attitude, which means, embedding security in any part of the development lifecycle, software deployment or environment change.

It is important to understand that there are no constant stages as we used to have in waterfall development lifecycle, and most of the stages are parallel – in the CI/CD world everything changes quickly, components can be part of different stages, and for this reason it is important to confer the processes, methods and tools in all developments and DevOps teams.

In-order to better understand how to embed security into the DevOps lifecycle, we need to review the different stages in the development lifecycle:

Planning phase

This stage in the development process is about gathering business requirements.

At this stage, it is important to embed the following aspects:

- Gather information security requirements (such as authentication, authorization, auditing, encryptions, etc.)

- Conduct threat modeling in-order to detect possible code weaknesses

- Training / awareness programs for developers and DevOps personnel about secure coding

Creation / Code writing phase

This stage in the development process is about the code writing itself.

At this stage, it is important to embed the following aspects:

- Connect the development environments (IDE) to a static code analysis products

- Review the solution architecture by a security expert or a security champion on his behalf

- Review open source components embedded inside the code

Verification / Testing phase

This stage in the development process is about testing, conducted mostly by QA personnel.

At this stage, it is important to embed the following aspects:

- Run SAST (Static application security tools) on the code itself (pre-compiled stage)

- Run DAST (Dynamic application security tools) on the binary code (post-compile stage)

- Run IAST (Interactive application security tools) against the application itself

- Run SCA (Software composition analysis) tools in-order to detect known vulnerabilities in open source components or 3rd party components

Software packaging and pre-production phase

This stage in the development process is about software packaging of the developed code before deployment/distribution phase.

At this stage, it is important to embed the following aspects:

- Run IAST (Interactive application security tools) against the application itself

- Run fuzzing tools in-order to detect buffer overflow vulnerabilities – this can be done automatically as part of the build environment by embedding security tests for functional testing / negative testing

- Perform code signing to detect future changes (such as malwares)

Software packaging release phase

This stage is between the packaging and deployment stages.

At this stage, it is important to embed the following aspects:

- Compare code signature with the original signature from the software packaging stage

- Conduct integrity checks to the software package

- Deploy the software package to a development environment and conduct automate or stress tests

- Deploy the software package in a green/blue methodology for software quality and further security quality tests

Software deployment phase

At this stage, the software package (such as mobile application code, docker container, etc.) is moving to the deployment stage.

At this stage, it is important to embed the following aspects:

- Review permissions on destination folder (in case of code deployment for web servers)

- Review permissions for Docker registry

- Review permissions for further services in a cloud environment (such as storage, database, application, etc.) and fine-tune the service role for running the code

Configure / operate / Tune phase

At this stage, the development is in the production phase and passes modifications (according to business requirements) and on-going maintenance.

At this stage, it is important to embed the following aspects:

- Patch management processes or configuration management processes using tools such as Chef, Ansible, etc.

- Scanning process for detecting vulnerabilities using vulnerability assessment tools

- Deleting and re-deployment of vulnerable environments with an up-to-date environments (if possible)

On-going monitoring phase

At this stage, constant application monitoring is being conducted by the infrastructure or monitoring teams.

At this stage, it is important to embed the following aspects:

- Run RASP (Runtime application self-production) tools

- Implement defense at the application layer using WAF (Web application firewall) products

- Implement products for defending the application from Botnet attacks

- Implement products for defending the application from DoS / DDoS attacks

- Conduct penetration testing

- Implement monitoring solution using automated rules such as automated recovery of sensitive changes (tools such as GuardRails)

Security recommendations for developments based on CI/CD / DevOps process

- It is highly recommended to perform on-going training for the development and DevOps teams on security aspects and secure development

- It is highly recommended to nominate a security champion among the development and DevOps teams in-order to allow them to conduct threat modeling at early stages of the development lifecycle and in-order to embed security aspects as soon as possible in the development lifecycle

- Use automated tools for deploying environments in a simple and standard form.

Tools such as Puppet require root privileges for folders it has access to. In-order to lower the risk, it is recommended to enable folder access auditing. - Avoid storing passwords and access keys, hard-coded inside scripts and code.

- It is highly recommended to store credentials (SSH keys, privileged credentials, API keys, etc.) in a vault (Solutions such as HashiCorp vault or CyberArk).

- It is highly recommended to limit privilege access based on role (Role based access control) using least privileged.

- It is recommended to perform network separation between production environment and Dev/Test environments.

- Restrict all developer teams’ access to production environments, and allow only DevOps team’s access to production environments.

- Enable auditing and access control for all development environments and identify access attempts anomalies (such as developers access attempt to a production environment)

- Make sure sensitive data (such as customer data, credentials, etc.) doesn’t pass in clear text at transit. In-case there is a business requirement for passing sensitive data at transit, make sure the data is passed over encrypted protocols (such as SSH v2, TLS 1.2, etc.), while using strong cipher suites.

- It is recommended to follow OWASP organization recommendations (such as OWASP Top10, OWASP ASVS, etc.)

- When using Containers, it is recommended to use well-known and signed repositories.

- When using Containers, it is recommended not to rely on open source libraries inside the containers, and to conduct scanning to detect vulnerable versions (including dependencies) during the build creation process.

- When using Containers, it is recommended to perform hardening using guidelines such as CIS Docker Benchmark or CIS Kubernetes Benchmark.

- It is recommended to deploy automated tools for on-going tasks, starting from build deployments, code review for detecting vulnerabilities in the code and open source code, and patch management processes that will be embedded inside the development and build process.

- It is recommended to perform scanning to detect security weaknesses, using vulnerability management tools during the entire system lifetime.

- It is recommended to deploy configuration management tools, in-order to detect and automatically remediate configuration anomalies from the original configuration.

Additional reading sources:

- 20 Ways to Make Application Security Move at the Speed of DevOps

- The DevOps Security Checklist

- Making AppSec Testing Work in CI/CD

- Value driven threat modeling

- Automated Security Testing

- Security at the Speed of DevOps

- DevOps Security Best Practices

- The integration of DevOps and security

- When DevOps met Security - DevSecOps in a nutshell

- Grappling with DevOps Security

- Minimizing Risk and Improving Security in DevOps

- Security In A DevOps World

- Application Security in Devops

- Five Security Defenses Every Containerized Application Needs

- 5 ways to find and fix open source vulnerabilities

This article was written by Eyal Estrin, cloud security architect and Vitaly Unic, application security architect.