Archive for the ‘Cloud computing’ Category

Modern cloud virtualization

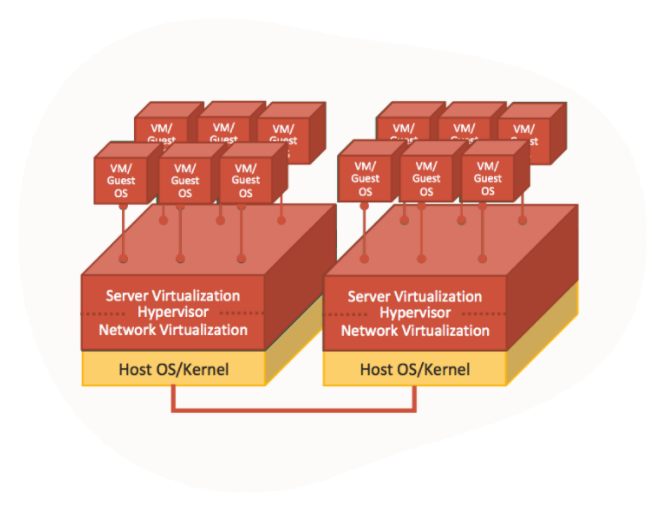

When we think about compute resources (AKA virtual machines) in the public cloud, most of us have the same picture in our head – operating system, above hypervisor, deployed above physical hardware.

Most public cloud providers build their infrastructure based on the same architecture.

In this post we will review traditional virtualization, and then explain the benefits of modern cloud virtualization.

Introduction to hypervisors and virtualization technology

The idea behind virtualization is the ability to deploy multiple operating systems, on the same physical hardware, and still allow each operating system access to the CPU, memory, storage, and network resources.

To allow the virtual operating systems (AKA “Guest machines”) access to the physical resources, we use a component called a “hypervisor”.

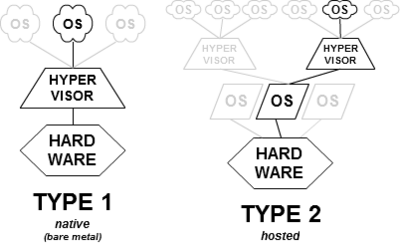

There are two types of hypervisors:

- Type 1 hypervisor – an operating system deployed on physical hardware (“bare metal” machine) and allows guest machines access to the hardware resources.

- Type 2 hypervisor – software within an operating system (AKA “Host operating system”) deployed on physical hardware. The guest machines are installed above the host operating system. The host operating system hypervisor allows guest machines access to the underlying physical resources.

The main drawbacks of current hypervisors:

- There is no full isolation between multiple guest VMs deployed on the same hypervisor and the same host machine. All the network passes through the same physical NIC and same hypervisor network virtualization.

- The more layers we add (either type 1 or type 2 hypervisors), we increase overhead on the host operating system and host hypervisor. This means the guest VMs will not be able to take full advantage of the underlying hardware.

AWS Nitro System

In 2017 AWS introduced their latest generation of hypervisors.

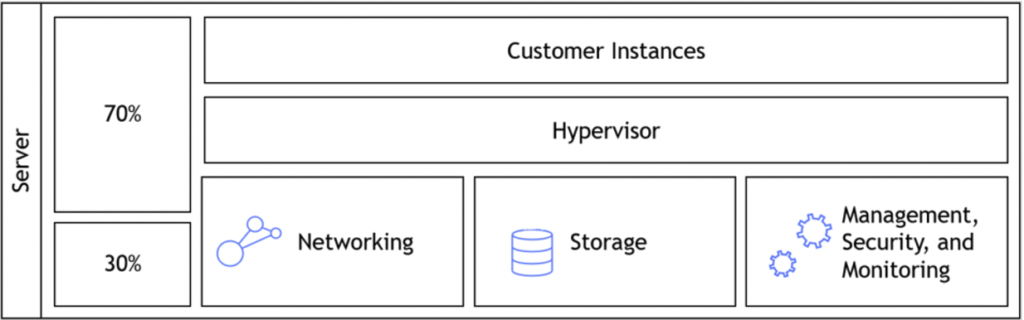

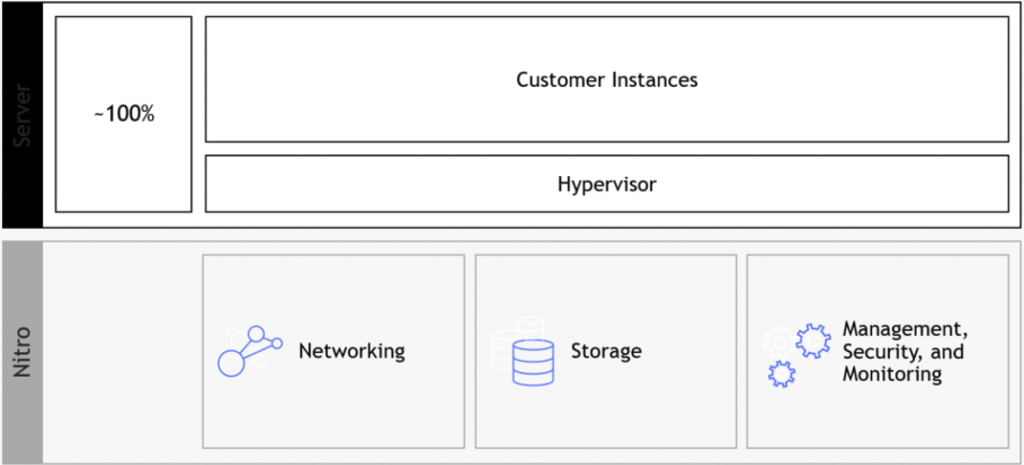

The Nitro architecture, underneath the EC2 instances, made a dramatic change to the way we use hypervisors by offloading virtualization functions (such as network, storage, security, etc.) to dedicated software and hardware chips. This allows the customer to get much better performance, with much better security and isolation of customers’ data. Hypervisor prior to AWS Nitro:

Hypervisor based on AWS Nitro:

The Nitro architecture is based on Nitro cards:

- Nitro card for VPC – handles network connectivity to the customer’s VPC, and fast network connectivity using ENA (Elastic Network Adapter) controller

- Nitro card for EBS – allows access to the Elastic Block Storage service

- Nitro card for instance storage – allows access to the local disk storage

- Nitro security chip – provides hardware-based root of trust

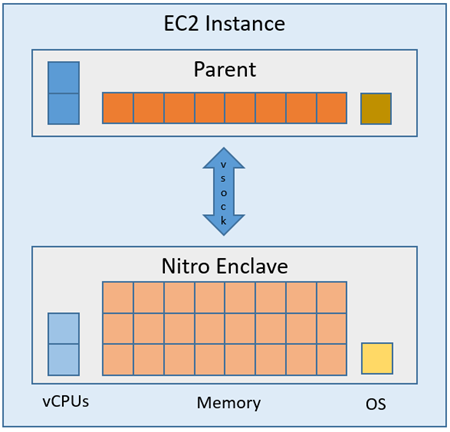

In 2020, AWS introduced AWS Nitro Enclaves that allow customers to create isolated environments to protect customers’ sensitive data and reduce the attack surface.

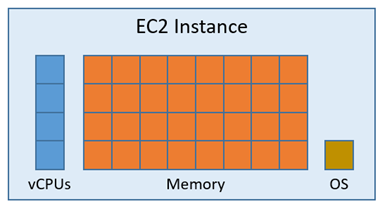

EC2 instance prior to AWS Nitro Enclaves:

EC2 instance with AWS Nitro Enclaves enabled:

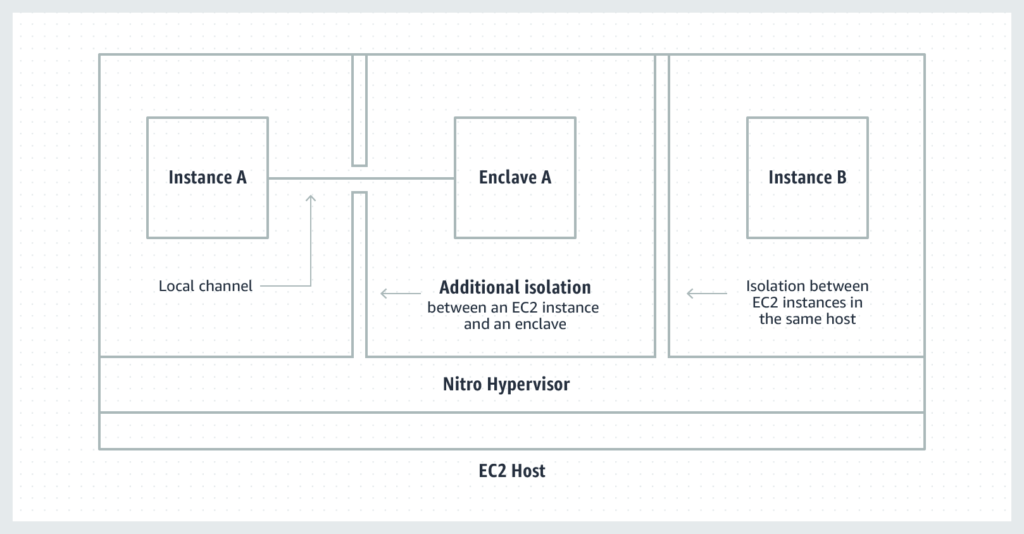

The diagram below shows two EC2 instances on the same EC2 host. One of the EC2 instances has Nitro Enclaves enabled:

Additional references:

- AWS Nitro System

https://aws.amazon.com/ec2/nitro/

- Reinventing virtualization with the AWS Nitro System

https://www.allthingsdistributed.com/2020/09/reinventing-virtualization-with-aws-nitro.html

- AWS Nitro – What Are AWS Nitro Instances, and Why Use Them?

https://www.metricly.com/aws-nitro/

- AWS Nitro Enclaves

https://aws.amazon.com/ec2/nitro/nitro-enclaves

- AWS Nitro Enclaves – Isolated EC2 Environments to Process Confidential Data

Oracle’s Generation 2 (GEN2) Cloud Infrastructure

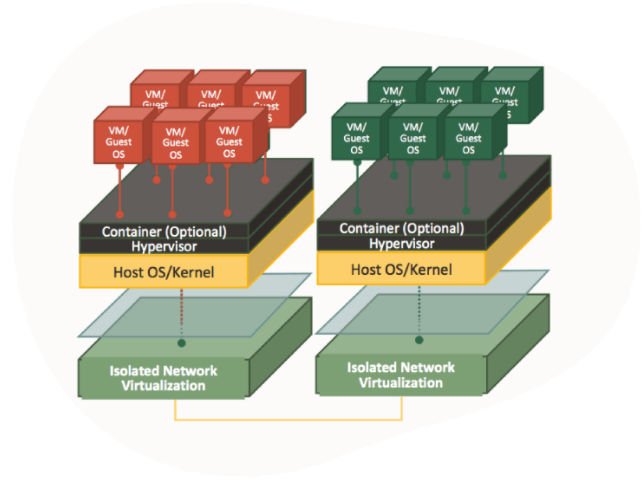

In 2018 Oracle introduced their second generation of cloud infrastructures.

Oracle’s Gen2 cloud offers isolated network virtualization, using custom-designed SmartNIC (a special software and hardware card) which offers customers the following advantages:

- Reduced attack surface

- Prevent lateral traversal between bare-metal, container or VM hosts

- Protection against Man-in-the-Middle attacks between hosts and guest VMs

- Protection against denial-of-service attacks against VM instances

First generation cloud hypervisors:

Oracle Second generation cloud hypervisor:

Oracle Cloud architecture differs from the rest of the public cloud providers in terms of CPU power.

In OCI, 1 OCPU (Oracle Compute Unit) = 1 physical core, while other cloud providers use Intel hyperthreading technology, which calculates 2 vCPU = 1 physical core.

As a result, customers get better performance per each OCPU it consumes.

Another characteristic that differentiates OCI architecture is no resource oversubscription, which means a customer will never share the same resource (CPU, memory, network) with another customer. This avoids a “noisy neighbor” scenario and allows the customer better and guaranteed performance.

Additional references:

- Oracle Cloud Infrastructure Security Architecture

https://www.oracle.com/a/ocom/docs/oracle-cloud-infrastructure-security-architecture.pdf

- Oracle Cloud Infrastructure — Isolated Network Virtualization

https://www.oracle.com/security/cloud-security/isolated-network-virtualization/

- What is a Gen 2 Cloud?

https://blogs.oracle.com/platformleader/what-is-a-gen-2-cloud

- Exploring Oracle’s Gen 2 Cloud Infrastructure Security Architectures: Isolated Network Virtualization

- Properly sizing workloads in the Oracle Government Cloud: Save costs and gain performance with OCPUs

The Future of Data Security Lies in the Cloud

We have recently read a lot of posts about the SolarWinds hack, a vulnerability in a popular monitoring software used by many organizations around the world.

This is a good example of supply chain attack, which can happen to any organization.

We have seen similar scenarios over the past decade, from the Heartbleed bug, Meltdown and Spectre, Apache Struts, and more.

Organizations all around the world were affected by the SolarWinds hack, including the cybersecurity company FireEye, and Microsoft.

Events like these make organizations rethink their cybersecurity and data protection strategies and ask important questions.

Recent changes in the European data protection laws and regulations (such as Schrems II) are trying to limit data transfer between Europe and the US.

Should such security breaches occur? Absolutely not.

Should we live with the fact that such large organization been breached? Absolutely not!

Should organizations, who already invested a lot of resources in cloud migration move back workloads to on-premises? I don’t think so.

But no organization, not even major financial organizations like banks or insurance companies, or even the largest multinational enterprises, have enough manpower, knowledge, and budget to invest in proper protection of their own data or their customers’ data, as hyperscale cloud providers.

There are several of reasons for this:

- Hyperscale cloud providers invest billions of dollars improving security controls, including dedicated and highly trained personnel.

- Breach of customers’ data that resides at hyperscale cloud providers can drive a cloud provider out of business, due to breach of customer’s trust.

- Security is important to most organizations; however, it is not their main line of expertise.

Organization need to focus on their core business that brings them value, like manufacturing, banking, healthcare, education, etc., and rethink how to obtain services that support their business goals, such as IT services, but do not add direct value.

Recommendations for managing security

Security Monitoring

Security best practices often state: “document everything”.

There are two downsides to this recommendation: One, storage capacity is limited and two, most organizations do not have enough trained manpower to review the logs and find the top incidents to handle.

Switching security monitoring to cloud-based managed systems such as Azure Sentinel or Amazon GuardDuty, will assist in detecting important incidents and internally handle huge logs.

Encryption

Another security best practice state: “encrypt everything”.

A few years ago, encryption was quite a challenge. Will the service/application support the encryption? Where do we store the encryption key? How do we manage key rotation?

In the past, only banks could afford HSM (Hardware Security Module) for storing encryption keys, due to the high cost.

Today, encryption is standard for most cloud services, such as AWS KMS, Azure Key Vault, Google Cloud KMS and Oracle Key Management.

Most cloud providers, not only support encryption at rest, but also support customer managed key, which allows the customer to generate his own encryption key for each service, instead of using the cloud provider’s generated encryption key.

Security Compliance

Most organizations struggle to handle security compliance over large environments on premise, not to mention large IaaS environments.

This issue can be solved by using managed compliance services such as AWS Security Hub, Azure Security Center, Google Security Command Center or Oracle Cloud Access Security Broker (CASB).

DDoS Protection

Any organization exposing services to the Internet (from publicly facing website, through email or DNS service, till VPN service), will eventually suffer from volumetric denial of service.

Only large ISPs have enough bandwidth to handle such an attack before the border gateway (firewall, external router, etc.) will crash or stop handling incoming traffic.

The hyperscale cloud providers have infrastructure that can handle DDoS attacks against their customers, services such as AWS Shield, Azure DDoS Protection, Google Cloud Armor or Oracle Layer 7 DDoS Mitigation.

Using SaaS Applications

In the past, organizations had to maintain their entire infrastructure, from messaging systems, CRM, ERP, etc.

They had to think about scale, resilience, security, and more.

Most breaches of cloud environments originate from misconfigurations at the customers’ side on IaaS / PaaS services.

Today, the preferred way is to consume managed services in SaaS form.

These are a few examples: Microsoft Office 365, Google Workspace (Formerly Google G Suite), Salesforce Sales Cloud, Oracle ERP Cloud, SAP HANA, etc.

Limit the Blast Radius

To limit the “blast radius” where an outage or security breach on one service affects other services, we need to re-architect infrastructure.

Switching from applications deployed inside virtual servers to modern development such as microservices based on containers, or building new applications based on serverless (or function as a service) will assist organizations limit the attack surface and possible future breaches.

Example of these services: Amazon ECS, Amazon EKS, Azure Kubernetes Service, Google Kubernetes Engine, Google Anthos, Oracle Container Engine for Kubernetes, AWS Lambda, Azure Functions, Google Cloud Functions, Google Cloud Run, Oracle Cloud Functions, etc.

Summary

The bottom line: organizations can increase their security posture, by using the public cloud to better protect their data, use the expertise of cloud providers, and invest their time in their core business to maximize value.

Security breaches are inevitable. Shifting to cloud services does not shift an organization’s responsibility to secure their data. It simply does it better.

Cloud Shell alternatives

What is cloud shell and what is it used for?

Cloud Shell is a browser-based shell, for running Linux commands, scripts, and command line tools, within a cloud environment, without having to install any tools on the local desktop. It contains ephemeral storage for saving configuration and installing software required for performing tasks. But we need to remember that the storage has a capacity limitation and eventually will be erased after a certain amount of idle time.

Cloud Shell Alternatives

| AWS CloudShell | Azure Cloud Shell | Google Cloud Shell | Oracle Cloud Shell | |

| Operating System | Amazon Linux 2 | Ubuntu 16.04 LTS | Debian-based Linux | Oracle Linux |

| Shell interface | Bash, Z shell | Bash | Bash | Bash |

| Scripting interface | PowerShell | PowerShell | – | – |

| CLI Tools installed | AWS CLI, Amazon ECS CLI, AWS SAM CLI | Azure CLI, Azure Functions CLI, Service Fabric CLI, Batch Shipyard | Google App Engine SDK, Google Cloud SDK | OCI CLI |

| Persistent storage for home directory | 1GB | 5GB | 5GB | 5GB |

| Idle inactive termination | 20-30 minutes | 20 minutes | 20 minutes | 20 minutes |

| Maximum data storage | 120 days | – | 120 days | 60 days |

Additional references

- AWS CloudShell

https://aws.amazon.com/cloudshell/features/

- Limits and restrictions for AWS CloudShell

https://docs.aws.amazon.com/cloudshell/latest/userguide/limits.html

- Azure Cloud Shell

https://docs.microsoft.com/en-us/azure/cloud-shell/features

- Troubleshooting & Limitations of Azure Cloud Shell

https://docs.microsoft.com/en-us/azure/cloud-shell/troubleshooting

- Google Cloud Shell

https://cloud.google.com/shell/docs

- Limitations and restrictions of Google Cloud Shell

https://cloud.google.com/shell/docs/limitations

- Oracle Cloud Infrastructure (OCI) Cloud Shell

https://docs.oracle.com/en-us/iaas/Content/API/Concepts/cloudshellintro.htm

- OCI Cloud Shell Limitations

https://docs.oracle.com/en-us/iaas/Content/API/Concepts/cloudshellintro.htm#Cloud_Shell_Limitations

Importance of cloud strategy

Why do organizations need a cloud strategy and what are the benefits?

In this post, we will review some of the reasons for defining and committing an organizational cloud strategy to print, what topics should be included in such a document and how a cloud strategy enables organizations to manage risks involved in achieving secure and smart cloud usage to promote business goals.

Terminology

A cloud strategy document should include a clear definition of what is considered a cloud service, based on the NIST definition:

- On demand self-service – A consumer can unilaterally provision computing capabilities, such as server time and network storage, as needed automatically without requiring human interaction with each service provider

- Broad network access – Capabilities are available over the network and accessed through standard mechanisms that promote use by heterogeneous thin or thick client platforms (e.g., mobile phones, tablets, laptops, and workstations)

- Resource pooling – The provider’s computing resources are pooled to serve multiple consumers using a multi-tenant model, with different physical and virtual resources dynamically assigned and reassigned according to consumer demand. There is a sense of location independence in that the customer generally has no control or knowledge over the exact location of the provided resources but may be able to specify location at a higher level of abstraction (e.g., country, state, or datacenter). Examples of resources include storage, processing, memory, and network bandwidth

- Rapid elasticity – Capabilities can be elastically provisioned and released, in some cases automatically, to scale rapidly outward and inward commensurate with demand. To the consumer, the capabilities available for provisioning often appear to be unlimited and can be appropriated in any quantity at any time

- Measured service – Cloud systems automatically control and optimize resource use by leveraging a metering capability at some level of abstraction appropriate to the type of service (e.g., storage, processing, bandwidth, and active user accounts). Resource usage can be monitored, controlled, and reported, providing transparency for both the provider and consumer of the utilized service

The cloud strategy document should include a clear definition of what is not considered a cloud service – such as hosting services provided by hardware vendors (hosting service / hosting facility, Virtual Private Servers / VPS, etc.)

Business Requirements

The purpose of cloud strategy document is to guide the organization in the various stages of using or migrating to cloud services, while balancing the benefits for the organization and conducting proper risk management at the same time.

Lack of a cloud strategy will result in various departments in the organization consuming cloud services for various reasons, such as an increase productivity, but without official policy on how to properly adopt the cloud services. New IT departments could be created (AKA “Shadow IT”), without any budget control, while increasing information security risks due to lack of guidance.

A cloud strategy document should include the following:

- The benefits for the organization as result of using cloud services

- Definitions of which services will remain on premise and which services can be consumed as cloud services

- Approval process for consuming cloud services

- Risks resulting from using unapproved cloud services

- Required controls to minimize the risks of using cloud services (information security and privacy, cost management, resource availability, etc.)

- Current state (in terms of cloud usage)

- Desired state (where the organization is heading in the next couple of years in terms of cloud usage)

- Exit strategy

Benefits for the organization

Cloud strategy document should include possible benefits from using cloud services, such as:

- Cost savings

- Switching to flexible payment – customer pays for what he is consuming (on demand)

- Information security

- Moving to cloud services, shifts the burden of physical security to the cloud provider

- Using cloud services allows better protection against denial-of-service attacks

- Using cloud services allows access to managed security services (such as security monitoring, breach detection, anomaly, and user behavior detection, etc.) available as part of the leader cloud provider’s portfolio

- Business continuity and disaster recovery

- Cloud infrastructure services (IaaS) are good alternative for deploying DR site

- Infrastructure flexibility

- Using cloud services, allows scale out and scale in the number of resources (from Web servers to database clusters) according to application load

Approval process for consuming cloud services

To formalize the use of cloud services for all departments of the organization, the cloud strategy document should define the approval process for using cloud services (according to organization’s size and maturity level)

- CIO / CTO / IT Manager

- Legal counsel / DPO / Chief risk officer

- Purchase department / Finance

Risk Management

A cloud strategy document should include a mapping of risks in using cloud services, such as:

- Lack of budget control

- The ability of each department, to use credit card details to open an account in the public cloud and begin consuming services without budget control from the finance department

- Regulation and privacy aspects

- Using cloud services for storing personal information (PII) without control by a DPO (or someone in charge of data protection aspects in the organization). This exposes the organization to both breach attempts and violation of privacy laws and regulation

- Information security aspects

- Using cloud services accessible by Internet visitors exposes the organization to data breach, data corruption, deletion, service downtime, reputation damage, etc.

- Lack of knowledge

- Use of cloud services requires proper training in IT, development, support, and information security teams on the proper usage of cloud services

Controls for minimizing the risk out of cloud services usage

The best solution for minimizing the risks to the organization is to create a dedicated team (CCOE – Cloud Center of Excellence) with representatives of the following departments:

- Infrastructure

- Information security

- Legal

- Development

- Technical support

- Purchase department / FinOps

Current state

The cloud strategy document should map the following current state in terms of cloud service usage:

- Which SaaS applications are currently being consumed by the organization and for what purposes?

- Which IaaS / PaaS services are currently being consumed? (Dev / Test environments, etc.)

Desired state

Cloud strategy document should define where the organization going in the next 2-5 years in terms of cloud service usage.

The document should answer these pivotal questions:

- Does the organization wish to continue to manage and maintain infrastructure on its own or migrate to managed services in the cloud?

- Should the organization deploy private cloud?

- Should the organization migrate all applications and infrastructure to the public cloud or perhaps a combination of on premise and public cloud (Hybrid cloud)?

And lastly, the strategy document should define KPIs for successful deployment of cloud services.

Exit strategy

A section should be included that addresses vendor lock-in risks and how to act if the organization chooses to migrate a system from the public cloud back to the on premise, or even migrate data between different public cloud providers for reasons such as cost, support, technological advantage, regulation, etc.

It is important to take extra care of the following topics during contractual agreement with public cloud provider:

- Is there an expected fine for scenarios if the organization decides to end the contract early?

- What is the process of exporting data from a SaaS application back to on premise (or between public cloud providers)?

- What is the public cloud providers commitment for data deletion at the end of the contractual agreement?

- How long is the cloud provider going to store organizational (and customer) data (including backup and logs) after the end of the contractual agreement?

Confidential Computing and the Public Cloud

What exactly is “confidential computing” and what are the reasons and benefits for using it in the public cloud environment?

Introduction to data encryption

To protect data stored in the cloud, we usually use one of the following methods:

· Encryption at transit — Data transferred over the public Internet can be encrypted using the TLS protocol. This method prohibits unwanted participants from entering the conversation.

· Encryption at rest — Data stored at rest, such as databases, object storage, etc., can be encrypted using symmetric encryption which means using the same encryption key to encrypt and decrypt the data. This commonly uses the AES256 algorithm.

When we wish to access encrypted data, we need to decrypt the data in the computer’s memory to access, read and update the data.

This is where confidential computing comes in — trying to protect the gap between data at rest and data at transit.

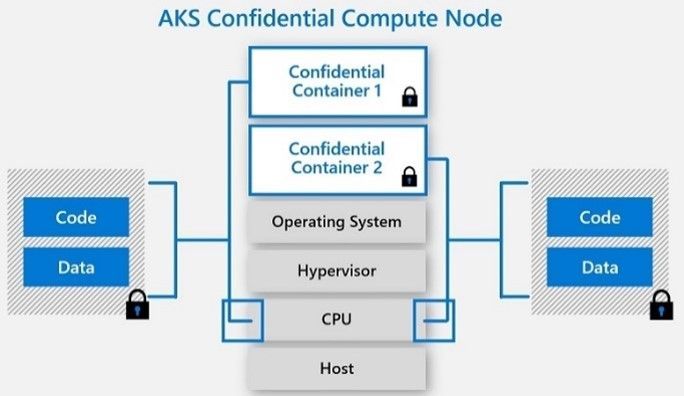

Confidential Computing uses hardware to isolate data. Data is encrypted in use by running it in a trusted execution environment (TEE).

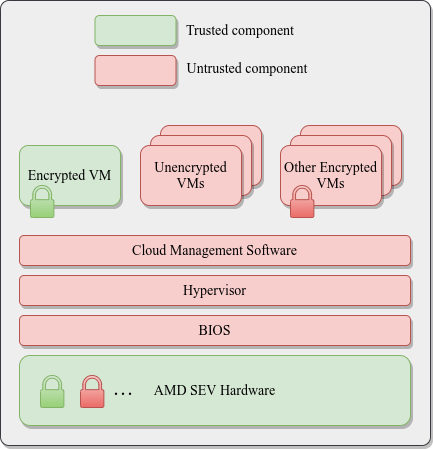

As of November 2020, confidential computing is supported by Intel Software Guard Extensions (SGX) and AMD Secure Encrypted Virtualization (SEV), based on AMD EPYC processors.

Comparison of the available options

| Intel SGX | Intel SGX2 | AMD SEV 1 | AMD SEV 2 | |

| Purpose | Microservices and small workloads | Machine Learning and AI | Cloud and IaaS workloads (above the hypervisor), suitable for legacy applications or large workloads | Cloud and IaaS workloads (above the hypervisor), suitable for legacy applications or large workloads |

| Cloud VM support (November 2020) | – | |||

| Cloud containers support (November 2020) | – | – | ||

| Operating system supported | Windows, Linux | Linux | Linux | Linux |

| Memory limitation | Up to 128MB | Up to 1TB | Up to available RAM | Up to available RAM |

| Software changes | Require software rewrite | Require software rewrite | Not required | – |

Reference Architecture

AMD SEV Architecture:

Azure Kubernetes Service (AKS) Confidential Computing:

References

· Confidential Computing: Hardware-Based Trusted Execution for Applications and Data

· Google Cloud Confidential VMs vs Azure Confidential Computing

https://msandbu.org/google-cloud-confidential-vms-vs-azure-confidential-computing/

· A Comparison Study of Intel SGX and AMD Memory Encryption Technology

https://caslab.csl.yale.edu/workshops/hasp2018/HASP18_a9-mofrad_slides.pdf

· SGX-hardware listhttps://github.com/ayeks/SGX-hardware

· Performance Analysis of Scientific Computing Workloads on Trusted Execution Environments

https://arxiv.org/pdf/2010.13216.pdf

· Helping Secure the Cloud with AMD EPYC Secure Encrypted Virtualization

https://developer.amd.com/wp-content/resources/HelpingSecuretheCloudwithAMDEPYCSEV.pdf

· Azure confidential computing

https://azure.microsoft.com/en-us/solutions/confidential-compute/

· Azure and Intel commit to delivering next generation confidential computing

· DCsv2-series VM now generally available from Azure confidential computing

· Confidential computing nodes on Azure Kubernetes Service (public preview)

https://docs.microsoft.com/en-us/azure/confidential-computing/confidential-nodes-aks-overview

· Expanding Google Cloud’s Confidential Computing portfolio

· A deeper dive into Confidential GKE Nodes — now available in preview

https://cloud.google.com/blog/products/identity-security/confidential-gke-nodes-now-available

· Using HashiCorp Vault with Google Confidential Computing

https://www.hashicorp.com/blog/using-hashicorp-vault-with-google-confidential-computing

· Confidential Computing is cool!

https://medium.com/google-cloud/confidential-computing-is-cool-1d715cf47683

· Data-in-use protection on IBM Cloud using Intel SGX

https://www.ibm.com/cloud/blog/data-use-protection-ibm-cloud-using-intel-sgx

· Why IBM believes Confidential Computing is the future of cloud security

· Alibaba Cloud Released Industry’s First Trusted and Virtualized Instance with Support for SGX 2.0 and TPM

Tips for Selecting a Public Cloud Provider

When an organization needs to select a public cloud service provider, there are several variables and factors to take into consideration that will help you choose the most appropriate cloud provider suitable for the organization’s needs.

In this post, we will review various considerations that will help organizations in the decision-making process.

Business goals

Before deciding to use a public cloud solution, or migrating existing environments to the cloud, it is important that organizations review their business goals. Explore what brings the organization value by maintaining existing systems on premise and what value does the migration to the cloud promise. In accordance with what you discover, decide which systems will be deployed in the cloud first, or which systems your organization will choose to use as managed services.

Review the lists of services offered in the cloud

Public cloud providers publish a list of services in various areas.

Review the list of current services and see how they stand up to your organization’s needs. This will help you narrow down the most suitable options.

Here are some examples of public cloud service catalogs:

· AWS — https://aws.amazon.com/products/

· Azure — https://azure.microsoft.com/en-us/services/

· GCP — https://cloud.google.com/products

· Oracle Cloud — https://www.oracle.com/cloud/products.html

· IBM — https://www.ibm.com/cloud/products

· Salesforce — https://www.salesforce.com/eu/products/

· SAP — https://www.sap.com/products.html

Centrally authenticating users against Active Directory in IaaS / PaaS environments

Many organizations manage access rights to various systems based on an organizational Active Directory.

Although it is possible to deploy Domain Controllers based on virtual servers in an IaaS environment, or create a federation between the on-premise and the cloud environments, at least some cloud providers offer managed Active Directory service based on Kerberos protocol (the most common authentication protocol in the on-premise environments) might ease the migration to the public cloud.

Examples of managed Active Directory services:

· Azure Active Directory Domain Services

· Google Managed Service for Microsoft Active Directory

Understanding IaaS / PaaS pricing models

Public cloud providers publish pricing calculators and documentation on their service pricing models.

Understanding pricing models might be complex for some services. For this reason, it is highly recommended to contact an account manager, a partners or reseller for assistance.

Comparing similar services among different cloud providers will enable an organization to identify and choose the most suitable cloud provider based on the organization’s needs and budget.

Examples of pricing calculators:

· AWS Simple Monthly Calculator

· Google Cloud Platform Pricing Calculator

Check if your country has a local region of one of the public cloud providers

The decision may be easier, or it may be easier to select one provider over a competitor, if in your specific country the provider has a local region. This can help for example in cases where there are limitations on data transfer outside a specific country’s borders (or between continents), or issues of network latency when transferring large amount of data sets between the local data centers and cloud environments,

This is relevant for all cloud service models (IaaS / PaaS / SaaS).

Examples of regional mapping:

· AWS:

AWS Regions and Availability Zones

· Azure and Office 365:

o Where your Microsoft 365 customer data is stored

· Google Cloud Platform:

· Oracle Cloud:

Oracle Data Regions for Platform and Infrastructure Services

· Salesforce:

Where is my Salesforce instance located?

· SAP:

SAP Cloud Platform Regions and Service Portfolio

Service status reporting and outage history

Mature cloud providers transparently publish their service availability status in various regions around the world, including outage history of their services.

Mature cloud providers transparently share service status and outages with customers, and know how to build stable and available infrastructure over the long term, and over multiple geographic locations, as well as how to minimize the “blast radius”, which might affect many customers.

A thorough review of an outage history report allows organizations to get a good picture over an extended period and help in the decision-making process.

Example of cloud providers’ service status and outage history documentation:

· AWS:

· Azure:

· Google Cloud Platform:

Google Cloud Status Dashboard — Incidents Summary

· Oracle Cloud:

Oracle Cloud Infrastructure — Current Status

Oracle Cloud Infrastructure — Incident History

· Salesforce:

· SAP:

SAP Cloud Platform Status Page

Summary

As you can see, there are several important factors to take into consideration when selecting a specific cloud provider. We have covered some of the more common ones in this post.

For an organization to make an educated decision, it is recommended to check what brings value for the organization, in both the short and long-term. It is important to review cloud providers’ service catalogs, alongside a thorough review of global service availability, transparency, understanding pricing models and hybrid architecture that connects local data centers to the cloud.

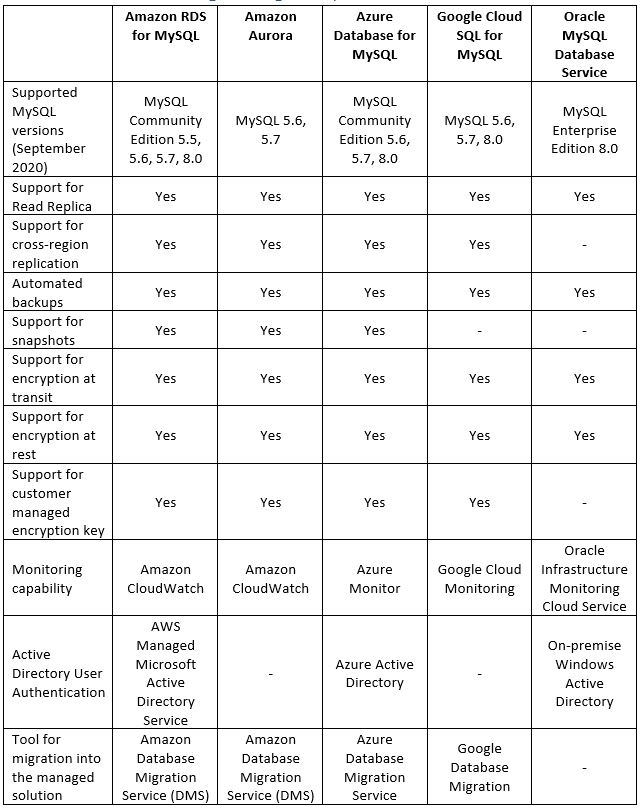

Running MySQL Managed Database in the Cloud

Today, more and more organizations are moving to the public cloud and choosing open source databases. They are choosing this for a variety of reasons, but license cost is one of the main ones.

In this post, we will review some of the common alternatives for running MySQL database inside a managed environment.

Legacy applications may be a reason for manually deploying and managing MySQL database.

Although it is possible to deploy a virtual machine, and above it manually install MySQL database (or even a MySQL cluster), unless your organization have a dedicated and capable DBA, I recommend looking at what brings value to your organization. Unless databases directly influence your organization’s revenue, I recommend paying the extra money and choosing a managed solution based on a Platform as a Service model.

It is important to note that several cloud providers offer data migration services to assist migrating existing MySQL (or even MS-SQL and Oracle) databases from on-premise to a managed service in the cloud.

Benefits of using managed database solutions

- Easy deployment – With a few clicks from within the web console, or using CLI tools, you can deploy fully managed MySQL databases (or a MySQL cluster)

- High availability and Read replica – Configurable during the deployment phase and after the product has already been deployed, according to customer requirements

- Maintenance – The entire service maintenance (including database fine-tuning, operating system, and security patches, etc.) is done by the cloud provider

- Backup and recovery – Embedded inside the managed solution and as part of the pricing model

- Encryption at transit and at rest – Embedded inside the managed solution

- Monitoring – As with any managed solution, cloud providers monitor service stability and allow customers access to metrics for further investigation (if needed)

Alternatives for running managed MySQL database in the cloud

Summary

As you can read in this article, running MySQL database in a managed environment in the cloud is a viable option, and there are various reasons for taking this step (from license cost, decrease man power maintaining the database and operating system, backups, security, availability, etc.)

References

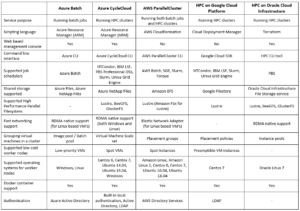

How to run HPC in the cloud?

Is it feasible to run HPC in the cloud? How different is it from running a local HPC cluster? What are some of the common alternatives for running HPC in the cloud?

Introduction

Before beginning our discussion about HPC (High Performance Computing) in the cloud, let us talk about what exactly HPC really means?

“High Performance Computing most generally refers to the practice of aggregating computing power in a way that delivers much higher performance than one could get out of a typical desktop computer or workstation in order to solve large problems in science, engineering, or business.” (https://www.usgs.gov/core-science-systems/sas/arc/about/what-high-performance-computing)

In more technical terms – it refers to a cluster of machines composed of multiple cores (either physical or virtual cores), a lot of memory, fast parallel storage (for read/write) and fast network connectivity between cluster nodes.

HPC is useful when you need a lot of compute resources, from image or video rendering (in batch mode) to weather forecasting (which requires fast connectivity between the cluster nodes).

The world of HPC is divided into two categories:

- Loosely coupled – In this scenario you might need a lot of compute resources, however, each task can run in parallel and is not dependent on other tasks being completed.

Common examples of loosely coupled scenarios: Image processing, genomic analysis, etc.

- Tightly coupled – In this scenario you need fast connectivity between cluster resources (such as memory and CPU), and each cluster node depends on other nodes for the completion of the task. Common examples of tightly coupled scenarios: Computational fluid dynamics, weather prediction, etc.

Pricing considerations

Deploying an HPC cluster on premise requires significant resources. This includes a large investment in hardware (multiple machines connected in the cluster, with many CPUs or GPUs, with parallel storage and sometimes even RDMA connectivity between the cluster nodes), manpower with the knowledge to support the platform, a lot of electric power, and more.

Deploying an HPC cluster in the cloud is also costly. The price of a virtual machine with multiple CPUs, GPUs or large amount of RAM can be very high, as compared to purchasing the same hardware on premise and using it 24×7 for 3-5 years.

The cost of parallel storage, as compared to other types of storage, is another consideration.

The magic formula is to run HPC clusters in the cloud and still have the benefits of (virtually) unlimited compute/memory/storage resources is to build dynamic clusters.

We do this by building the cluster for a specific job, according to the customer’s requirements (in terms of number of CPUs, amount of RAM, storage capacity size, network connectivity between the cluster nodes, required software, etc.). Once the job is completed, we copy the job output data and take down the entire HPC cluster in-order to save unnecessary hardware cost.

Alternatives for running HPC in the cloud

Summary

As you can see, running HPC in the public cloud is a viable option. But you need to carefully plan the specific solution, after gathering the customer’s exact requirements in terms of required compute resources, required software and of course budget estimation.

Product documentation

- Azure Batch

https://azure.microsoft.com/en-us/services/batch/

- Azure CycleCloud

https://azure.microsoft.com/en-us/features/azure-cyclecloud/

- AWS ParallelCluster

https://aws.amazon.com/hpc/parallelcluster/

- Slurm on Google Cloud Platform

https://github.com/SchedMD/slurm-gcp

- HPC on Oracle Cloud Infrastructure

What makes a good cloud architect?

Virtually any organization active in the public cloud needs at least one cloud architect to be able to see the big picture and to assist designing solutions.

So, what makes a cloud architect a good cloud architect?

In a word – be multidisciplinary.

Customer-Oriented

While the position requires good technical skills, a good cloud architect must have good customer facing skills. A cloud architect needs to understand the business needs, from the end-users (usually connecting from the Internet) to the technological teams. That means being able to speak many “languages,” and translate from one to the another while navigating the delicate nuances of each. All in the same conversation.

At the end of the day, the technology is just a means to serve your customers.

Sometimes a customer may ask for something non-technical at all (“Draw me a sheep…”) and sometimes it could be very technical (“I want to expose an API to allow read and update backend database”).

A good cloud architect knows how to take make a drawing of a sheep into a full-blown architecture diagram, complete with components, protocols, and more. In other worlds, translating a business or customer requirement into a technical requirement.

Technical Skills

Here are a few of the technical skills good cloud architects should have under their belts.

- Operating systems – Know how to deploy and troubleshoot problems related to virtual machines, based on both Windows and Linux.

- Cloud services – Be familiar with at least one public cloud provider’s services (such as AWS, Azure, GCP, Oracle Cloud, etc.). Even better to be familiar with at least two public cloud vendors since the world is heading toward multi-cloud environments.

- Networking – Be familiar with network-related concepts such as OSI model, TCP/IP, IP and subnetting, ACLs, HTTP, routing, DNS, etc.

- Storage – Be familiar with storage-related concepts such as object storage, block storage, file storage, snapshots, SMB, NFS, etc.

- Database – Be familiar with database-related concepts such as relational database, NoSQL database, etc.

- Architecture – Be familiar with concepts such as three-tier architecture, micro-services, serverless, twelve-factor app, API, etc.

Information Security

A good cloud architect can read an architecture diagram and knows which questions to ask and which security controls to embed inside a given solution.

- Identity management – Be familiar with concepts such as directory services, Identity and access management (IAM), Active Directory, Kerberos, SAML, OAuth, federation, authentication, authorization, etc.

- Auditing – Be familiar with concepts such as audit trail, access logs, configuration changes, etc.

- Cryptography – Be familiar with concepts such as TLS, public key authentication, encryption at transit & at rest, tokenization, hashing algorithms, etc.

- Application Security – Be familiar with concepts such as input validation, OWASP Top10, SDLC, SQL Injection, etc.

Laws, Regulation and Standards

In our dynamic world a good cloud architect needs to have at least a basic understanding of the following topics:

- Laws and Regulation – Be familiar with privacy regulations such as GDPR, CCPA, etc., and how they affect your organization’s cloud environments and products

- Standards – Be familiar with standards such as ISO 27001 (Information Security Management), ISO 27017 (Cloud Security), ISO 27018 (Protection of PII in public clouds), ISO 27701 (Privacy), SOC 2, CSA Security Trust Assurance and Risk (STAR), etc.

- Contractual agreements – Be able to read contracts between customers and public cloud providers, and know which topics need to appear in a typical contract (SLA, business continuity, etc.)

Code

Good cloud architects, like a good DevOps guys or gals, are not afraid to get their hands dirty and be able read and write code, mostly for automation purposes.

The required skills vary from scenario to scenario, but in most cases include:

- CLI – Be able to run command line tools, in-order to query existing environment settings up to updating or deploying new components.

- Scripting – Be familiar with at least one scripting language, such as PowerShell, Bash scripts, Python, Java Script, etc.

- Infrastructure as a Code – Be familiar with at least one declarative language, such as HashiCorp Terraform, AWS CloudFormation, Azure Resource Manager, Google Cloud Deployment Manager, RedHat Ansible, etc.

- Programming languages – Be familiar with at least one programming language, such as Java, Microsoft .NET, Ruby, etc.

Sales

A good cloud architect needs to be able to “sell” a solution to various audiences. Again the required skills vary from scenario to scenario, but in most cases include:

- Pricing calculators – Be familiar with various cloud service pricing models and be able to estimate cloud service costs using tools such as AWS Simple Monthly Calculator, Azure Pricing Calculator, Google Cloud Platform Pricing Calculator, Oracle Cloud Cost Estimator, etc.

- Cloud vs. On-Premise – Be able to have weigh in on the pros and cons of cloud vs. on premise, with different audiences.

- Architecture alternatives – Be able to present different architecture alternatives (from VM to micro-services up to Serverless) for each scenario. It is always good idea to have backup plan.

Summary

Recruiting a good cloud architect is indeed challenging. The role requires multidisciplinary skills – from soft skills (been a customer-oriented and salesperson) to deep technical skills (technology, cloud services, information security, etc.)

There is no alternative to years of hands-on experience. The more areas of experience cloud architects have, the better they will succeed at the job.

References

- What is a cloud architect? A vital role for success in the cloud.

- Want to Become a Cloud Architect? Here’s How

https://www.businessnewsdaily.com/10767-how-to-become-a-cloud-architect.html

The Public Cloud is Coming to Your Local Data Center

For a long time, public cloud providers have given users (almost) unlimited access to compute resources (virtual servers, storage, database, etc.) inside their end-to-end managed data centers. Recently the need for local on-premise solutions is now being felt.

In scenarios where network latency or there is a need to store sensitive or critical data inside a local data center, public cloud providers have built server racks meant for deployment of familiar virtual servers, storage and network equipment cloud infrastructure, while using the same user interface and the same APIs for controlling components using CLI or SDK.

Managing the lower infrastructure layers (monitoring of hardware/software/licenses and infrastructure updates) is done remotely by the public cloud providers, which in some cases, requires constant inbound Internet connectivity.

This solution allows customers to enjoy all the benefits of the public cloud (minus the scale), transparently expand on-premise environments to the public cloud, continue storing and processing data inside local data centers as much as required, and in in cases where there is demand for large compute power, migrate environments (or deploy new environments) to the public cloud.

The solution is suitable for military and defense users, or organizations with large amounts of data sets which cannot be moved to the public cloud in a reasonable amount of time. Below is a comparison of three solutions currently available:

| Azure Stack Hub | AWS Outposts | Oracle Private Cloud at Customer | |

| Ability to work in disconnect mode from the public cloud / Internet | Fully supported / Partially supported | The solution requires constant connectivity to a region in the cloud | The solution requires remote connectivity of Oracle support for infrastructure monitoring and software updates |

| VM deployment support | Fully supported | Fully supported | Fully supported |

| Containers or Kubernetes deployment support | Fully supported | Fully supported | Fully supported |

| Support Object Storage locally | Fully supported | Will be supported in 2020 | Fully supported |

| Support Block Storage locally | Fully supported | Fully supported | Fully supported |

| Support managed database deployment locally | – | Fully supported (MySQL, PostgreSQL) | Fully supported (Oracle Database) |

| Support data analytics deployment locally | – | Fully supported (Amazon EMR) | – |

| Support load balancing services locally | Fully supported | Fully supported | Fully supported |

| Built in support for VPN connectivity to the solution | Fully supported | – | – |

| Support connectivity between the solution and resources from on premise site | – | Fully supported | – |

| Built in support for encryption services (data at rest) | Fully supported (Key Vault) | Fully supported (AWS KMS) | – |

| Maximum number of physical cores (per rack) | 100 physical cores | – | 96 physical cores |

| Maximum storage capacity (per rack) | 5TB | 55TB | 200TB |

Summary

The private cloud solutions noted here are not identical in terms of their capabilities. At least for the initial installation and support, a partner who specializes in this field is a must.

Support for the well-known services from public cloud environments (virtual servers, storage, database, etc.) will expand over time, as these solutions become more commonly used by organizations or hosting providers.

These solutions are not meant for every customer. However they provide a suitable solution in scenarios where it is not possible to use the public cloud, for regulatory or military/defense reasons for example, or when organizations are planning for a long term migration to the public cloud a few years in advance. These plans can be due to legacy applications not built for the cloud, network latency issues or a large amount of data sets that need to be copied to the cloud.