Archive for the ‘AWS’ Category

Automation as key to cloud adoption success

After deploying several workloads in the public cloud, making mistakes, failing, fixing, and beginning using the cloud for production workloads, it is now the time to think about the next step in cloud adoption.

To be able to fully embrace the benefits of the public cloud, the scale, the elasticity, and the short time it takes to deploy new resources, it is time to put automation in place.

Automation allows us to do the same tasks over and over again, deploying the same configuration to multiple environments (Dev, Test, Prod) and get the same results – no human errors (assuming you have tested your code…)

Automation can be achieved in various ways – from using the CLI, using the cloud vendor’s SDK (languages such as Python, Go, Java, and more), or using Infrastructure as Code (such as Terraform, AWS CloudFormation, Azure Resource Manager, and more).

In this article, we shall review some of the common alternatives for using automation using code.

Why use code?

The clear benefit of using code for automation is the ability to have change management. Simply choose your favorite source control (such as GitHub, AWS CodeCommit, Azure Repos, and more), upload your scripts and have the version history of your code, and be able to know at each stage who made changes to the code.

Another benefit of using code for automation is the fact that the Internet is full of samples you can find to automate (almost) anything in your cloud environment.

The downside of doing everything using code, is the learning curve required by your organization’s IT or DevOps teams, learning new languages, but once they pass this stage, you can have all the benefits of the scripting languages.

Automation – the AWS way

If AWS is your sole cloud provider, you should learn and start using the following built-in services or capabilities offered by AWS:

Infrastructure as Code

- AWS CloudFormation – The built-in IaC for deploying and managing AWS resources.

Reference: https://github.com/aws-cloudformation/aws-cloudformation-samples

- AWS Cloud Development Kit (AWS CDK) – Ability to write CloudFormation templates, based on common programming languages such as Python, Java, DotNet, and more.

Reference: https://github.com/aws-samples/aws-cdk-examples

Policy as Code

- Service control policies (SCPs) – Managing permissions in AWS Organizations.

Reference: https://docs.aws.amazon.com/organizations/latest/userguide/orgs_manage_policies_scps_examples.html

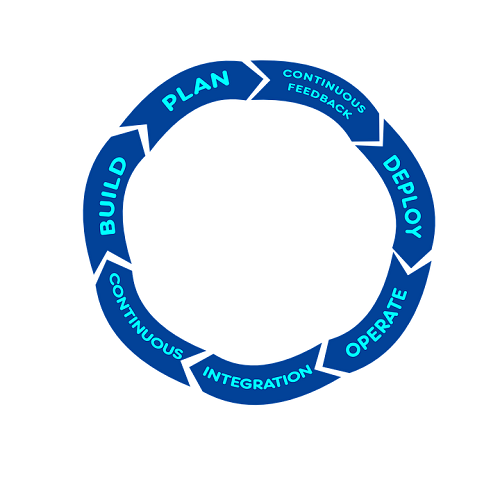

CI/CD pipeline

- AWS CodePipeline – A fully managed continuous delivery service.

Reference: https://docs.aws.amazon.com/codepipeline/latest/userguide/tutorials.html

Containers and Kubernetes

- Amazon ECS – Container management service based on the AWS platform.

Reference: https://docs.aws.amazon.com/AmazonECS/latest/developerguide/example_task_definitions.html

- Amazon Elastic Kubernetes Service (EKS) – Managed Kubernetes service.

Reference: https://github.com/aws-quickstart/quickstart-amazon-eks

Automation – the Azure way

If Azure is your sole cloud provider, you should learn and start using the following built-in services or capabilities offered by Azure:

Infrastructure as Code

- Azure Resource Manager templates (ARM templates) – The built-in IaC for deploying and managing Azure resources.

Reference: https://github.com/Azure/azure-quickstart-templates

- Bicep – Declarative language for deploying Azure resources.

Reference: https://github.com/Azure/azure-docs-bicep-samples

Policy as Code

- Azure Policy – Enforce organizational standards across the Azure organization.

Reference: https://github.com/Azure/azure-policy

CI/CD pipeline

- Azure Pipelines – A fully managed continuous delivery service.

Reference: https://github.com/microsoft/azure-pipelines-yaml

Containers and Kubernetes

- Azure Container Instances – Container management service based on the Azure platform.

Reference: https://docs.microsoft.com/en-us/samples/browse/?products=azure&terms=container%2Binstance

- Azure Kubernetes Service (AKS) – Managed Kubernetes service.

Reference: https://github.com/Azure/AKS

Automation – the Google Cloud way

If GCP is your sole cloud provider, you should learn and start using the following built-in services or capabilities offered by GCP:

Infrastructure as Code

- Google Cloud Deployment Manager – The built-in IaC for deploying and managing GCP resources.

Reference: https://github.com/GoogleCloudPlatform/deploymentmanager-samples

Policy as Code

- Google Organization Policy Service – Programmatic control over the organization’s cloud resources.

CI/CD pipeline

- Google Cloud Build – A fully managed continuous delivery service.

Reference: https://github.com/GoogleCloudPlatform/cloud-build-samples

Containers and Kubernetes

- Google Kubernetes Engine (GKE) – Managed Kubernetes service.

Reference: https://github.com/GoogleCloudPlatform/kubernetes-engine-samples

Automation – the cloud agnostic way

If you plan for the future, plan for multi-cloud. Look for solutions that are capable of connecting to multiple cloud environments, to decrease the learning curve of your DevOps team learning the various scripting languages and being able to deploy workloads on several cloud environments.

Infrastructure as Code

- Hashicorp Terraform – The most widely used IaC for deploying and managing resources on both cloud and on-premise.

Reference: https://registry.terraform.io/browse/providers

Policy as Code

- Hashicorp Sentinel – Policy as code framework that compliments Terraform code.

Reference: https://www.terraform.io/cloud-docs/sentinel/examples

CI/CD pipeline

- Jenkins – The most widely used open-source CI/CD tool.

Reference: https://www.jenkins.io/doc/pipeline/examples/

Containers and Kubernetes

- Docker – The most widely used container run-time for deploying applications.

Reference: https://github.com/dockersamples

- Kubernetes – The most widely used container orchestration open-source platform.

Reference: https://github.com/kubernetes/examples

Summary

In this post, I have reviewed the most common solutions that allow you to automate your workloads’ deployment, management, and maintenance using various scripting languages.

Some of the solutions are bound to a specific cloud provider, while others are considered cloud agnostic.

Use automation to fully embrace the power and benefits of the public cloud.

If you don’t have experience writing code, take the time to learn. The more you practice, the more experience you will gain.

As Werner Vogels, the Amazon CTO always says – “Go Build”.

Cloud and the shared responsibility model misconceptions

One of the most common concepts working with cloud services is the “Shared responsibility mode”.

The model is aim to set the responsibility boundaries between the cloud service provider and the cloud service consumer, depending on the cloud service model (IaaS, PaaS, SaaS).

In this post, I will review common misconceptions regarding the shared responsibility model.

Misconception #1 — My cloud provider’s certifications allow me to comply with regulations

This is a common misconception for companies (and new SaaS providers) who fail to understand the shared responsibility model while deploying their first workload.

Reviewing cloud providers’ compliance pages, we can see that the providers have already certified themselves for most regulations and local laws, and in some cases even offer customers special environments that are already in compliance with regulations such as PCI-DSS or HIPAA.

If you are planning to store sensitive customers’ data (from PII, healthcare, financial, or any other types of sensitive data) in a public cloud, keep in mind that according to the shared responsibility model, the cloud provider is responsible only for the lower layers of the architecture:

· IaaS — the CSP is responsible for all layers, from the physical layer to the virtualization layer

· PaaS — the CSP is responsible for all layers, from the physical layer to the guest operating system, middleware, and even runtime

· SaaS — the CSP is responsible for all layers, from the physical layer to the application layer

Bottom line — the fact that a CSP has all the relevant certifications, means almost nothing when talking about compliance with regulations or protecting customers’ data.

Each organization storing sensitive data in the cloud must conduct a risk assessment, review which data is stored in the cloud (before storing data in the cloud), and set the proper controls to protect customers’ data.

Misconception #2 — Who is responsible for protecting my data?

When customers (either organizations or personal customers) store their data in public cloud services, they sometimes mistakenly think that if they store their data in one of the major CSPs, their data is protected.

This is a misconception.

All major CSPs offer their customers a large variety of services and tools to protect their customers’ data (from network access control lists, encryption in transit and at rest, authentication, authorization, auditing, and more), however, according to the shared responsibility model, it is up to the customer (mostly organizations storing their data in the cloud), to decide which security controls to implement.

In most cases, the CSPs don’t have access to customers’ data stored in the cloud, whether organizations decide to use managed storage services (from object storage to managed CIFS/NFS services), managed database services (from relational databases to NoSQL databases) and more.

The most obvious exception to the mentioned above is SaaS services, where we allow CSP service accounts access to our data, to allow us to perform queries, get insights about our data or even perform regular backups — the access is mostly strict to specific actions, to a specific role or service account, and usually shouldn’t be used by the CSP employees.

At the end of the day, the customer is always the data owner, and as a data owner, the customer must decide whether or not to store sensitive data in the cloud, who should have access to the data stored in the cloud, what access rights do we allow people to access and update/delete our data, and more.

Misconception #3 — Availability is not my concern since the cloud is highly available by design

The above headline is true, mainly for major SaaS services.

When looking at availability and building highly available architectures, specifically in IaaS and PaaS, it is up to us, as organizations, to use the services and the service capabilities that CSPs offer us, to build highly available solutions.

Just because we decided to deploy our application on a VM or store our data in a managed database service, but we failed to deploy everything behind a load-balancer or in a cluster, will not guarantee us the availability that our customers expect.

Even if we are using managed object storage services and we choose a low redundancy tier, using a single availability zone, the CSP does not guarantee high availability.

To achieve high availability to our workloads, we need to review cloud providers’ documentation, such as “Well architected frameworks” and design our workloads to fit business needs.

Misconception #4 — Incident response in the cloud is an impossible mission

This part is a little bit tricky.

Since as AWS always mention, they are responsible for the security of the cloud — they are responsible for the incident response process of the cloud infrastructure, from the physical data center, the host OS, the network equipment, the virtualization, and all the managed services.

We, as customers of cloud services, are responsible for security within our cloud environments.

In IaaS, everything within the guest OS is our responsibility as customers of the cloud.

It is our responsibility to enable auditing as much as possible, and send all logs to a central log repository and from there to our SIEM system (whether it is located on-premise or in a managed cloud service).

There are also documented procedures for building a forensics environment, made out of snapshots of our VMs or databases, for further analysis.

It is not perfect; we still don’t control the entire flow of the packet from the lower network layers to the application layer, and on managed PaaS services we only have audit logs and we can’t perform memory analysis of managed services (such as databases).

In SaaS services, it gets even worse since, in at best case, the SaaS provider is mature enough to allow us to pull audit logs using API and send them to our SIEM system for further analysis — unfortunately, not all SaaS providers are mature enough to provide us access to the audit logs.

Bottom line — challenging, but not completely impossible. Depending on the cloud service model and the maturity of the cloud provider.

Summary

It is important to understand the shared responsibility model, but what is more important is to understand the cloud service model and services or tools available for us, to enable us to build secure and highly available cloud environments.

References

· AWS Compliance Programs

https://aws.amazon.com/compliance/programs

· Azure compliance documentation

https://docs.microsoft.com/en-us/azure/compliance

· GCP Compliance offerings

https://cloud.google.com/security/compliance/offerings

· AWS Well-Architected Framework

https://docs.aws.amazon.com/wellarchitected/latest/framework/welcome.html

· Forensic investigation environment strategies in the AWS Cloud

https://aws.amazon.com/blogs/security/forensic-investigation-environment-strategies-in-the-aws-cloud

· Computer forensics chain of custody in Azure

https://docs.microsoft.com/en-us/azure/architecture/example-scenario/forensics

Introduction to Policy as Code

Building our first environment in the cloud, or perhaps migrating our first couple of workloads to the cloud is fairly easy until we begin the ongoing maintenance of the environment.

Pretty soon we start to realize we are losing control over our environment – from configuration changes, forgetting to implement security best practices, and more.

At this stage, we wish we could have gone back, rebuilt everything from scratch, and have much more strict rules for creating new resources and their configuration.

Manual configuration simply doesn’t scale.

Developers would like to focus on what they do best – developing new products or features, while security teams would like to enforce guard rails, allowing developers to do their work, while still enforcing security best practices.

In the past couple of years, one of the hottest topics is called Infrastructure as Code, a declarative way to deploy new environments using code (mostly JSON or YAML format).

Infrastructure as Code is a good solution for deploying a new environment or even reusing some of the code to deploy several environments, however, it is meant for a specific task.

What happens when we would like to set guard rails on an entire cloud account or even on our entire cloud organization environment, containing multiple accounts, which may expand or change daily?

This is where Policy as Code comes into the picture.

Policy as Code allows you to write high-level rules and assign them to an entire cloud environment, to be effective on any existing or new product or service we deploy or consume.

Policy as Code allows security teams to define security, governance, and compliance policies according to business needs and assign them at the organizational level.

The easiest way to explain it is – can user X perform action Y on resource Z?

A more practical example from the AWS realm – block the ability to create a public S3 bucket. Once the policy was set and assigned, security teams won’t need to worry whether or not someone made a mistake and left a publicly accessible S3 bucket – the policy will simply block this action.

Looking for a code example to achieve the above goal? See:

https://aws-samples.github.io/aws-iam-permissions-guardrails/guardrails/scp-guardrails.html#scp-s3-1

Policy as Code on AWS

When designing a multi-account environment based on the AWS platform, you should use AWS Control Tower.

The AWS Control Tower is aim to assist organizations deploying multiple AWS accounts under the same AWS organization, with the ability to deploy policies (or Service Control Policies) from a central location, allowing you to have the same policies for every newly created AWS account.

Example of governance policy:

- Enabling resource creation in a specific region – this capability will allow European customers to restrict resource creation in regions outside Europe, to comply with the GDPR.

- Allow only specific EC2 instance types (to preserve cost).

Example of security policies:

- Prevent upload of unencrypted objects to S3 bucket, to protect access to sensitive objects.

https://aws-samples.github.io/aws-iam-permissions-guardrails/guardrails/scp-guardrails.html#scp-s3-2

- Deny the use of the Root user account (least privilege best practice).

AWS Control Tower allows you to configure baseline policies using CloudFormation templates, over an entire AWS organization, or on a specific AWS account.

To further assist in writing CloudFormation templates and service control policies on large scale, AWS offers some additional tools:

Customizations for AWS Control Tower (CfCT) – ability to customize AWS accounts and OU’s, make sure governance and security policies remain synched with security best practices.

AWS CloudFormation Guard – ability to check for CloudFormation templates compliance against pre-defined policies.

Summary

Policy as Code allows an organization to automate governance and security policies deployment on large scale, keeping AWS organizations and accounts secure, while allowing developers to invest time in developing new products, with minimal required changes to their code, to be compliant with organizational policies.

References

- Best Practices for AWS Organizations Service Control Policies in a Multi-Account Environment

- AWS IAM Permissions Guardrails

https://aws-samples.github.io/aws-iam-permissions-guardrails/guardrails/scp-guardrails.html

- AWS Organizations – general examples

- Customizations for AWS Control Tower (CfCT) overview

https://docs.aws.amazon.com/controltower/latest/userguide/cfct-overview.html

- Policy-as-Code for Securing AWS and Third-Party Resource Types

https://aws.amazon.com/blogs/mt/policy-as-code-for-securing-aws-and-third-party-resource-types/

Journey for writing my first book about cloud security

My name is Eyal, and I am a cloud architect.

I have been in the IT industry since 1998 and began working with public clouds in 2015.

Over the years I have gained hands-on experience working on the infrastructure side of AWS, Azure, and GCP.

The more I worked with the various services from the three major cloud providers, the more I had the urge to compare the cloud providers’ capabilities, and I have shared several blog posts comparing the services.

In 2021 I was approached by PACKT publishing after they came across one of my blog posts on social media, and they offered me the opportunity to write a book about cloud security, comparing AWS, Azure, and GCP services and capabilities.

Over the years I have published many blog posts through social media and public websites, but this was my first experience writing an entire book with the support and assistance of a well-known publisher.

As with any previous article, I began by writing down each chapter title and main headlines for each chapter.

Once the chapters were approved, I moved on to write the actual chapters.

For each chapter, I first wrote down the headlines and then began filling them with content.

Before writing each chapter, I have done research on the subject, collected references from the vendors’ documentation, and looked for security best practices.

Once I have completed a chapter, I submitted it for review by the PACKT team.

PACKT team, together with external reviewers, sent me their input, things to change, additional material to add, request for relevant diagrams, and more.

Since copyright and plagiarism are important topics to take care of while writing a book, I have prepared my diagrams and submitted them to PACKT.

Finally, after a lot of review and corrections, which took almost a year, the book draft was submitted to another external reviewer and once comments were fixed, the work on the book (at least from my side as an author) was completed.

From my perspective, the book is unique by the fact that it does not focus on a single public cloud provider, but it constantly compares between the three major cloud providers.

From a reader’s point of view or someone who only works with a single cloud provider, I recommend focusing on the relevant topics according to the target cloud provider.

For each topic, I made a list of best practices, which can also be referenced as a checklist for securing the cloud providers’ environment, and for each recommendation I have added reference for further reading from the vendors’ documentation.

If you are interested in learning how to secure cloud environments based on AWS, Azure, or GCP, my book is available for purchase in one of the following book stores:

- Amazon:

https://www.amazon.com/Cloud-Security-Handbook-effectively-environments/dp/180056919X

- Barnes & Noble:

https://www.barnesandnoble.com/w/cloud-security-handbook-eyal-estrin/1141215482?ean=9781800569195

- PACKT

https://www.packtpub.com/product/cloud-security-handbook/9781800569195

Not all cloud providers are built the same

When organizations debate workload migration to the cloud, they begin to realize the number of public cloud alternatives that exist, both U.S hyper-scale cloud providers and several small to medium European and Asian providers.

The more we study the differences between the cloud providers (both IaaS/PaaS and SaaS providers), we begin to realize that not all cloud providers are built the same.

How can we select a mature cloud provider from all the alternatives?

Transparency

Mature cloud providers will make sure you don’t have to look around their website, to locate their security compliance documents, allow you to download their security controls documentation, such as SOC 2 Type II, CSA Star, CSA Cloud Controls Matrix (CCM), etc.

What happens if we wish to evaluate the cloud provider by ourselves?

Will the cloud provider (no matter what cloud service model), allow me to conduct a security assessment (or even a penetration test), to check the effectiveness of his security controls?

Global presence

When evaluating cloud providers, ask yourself the following questions:

- Does the cloud provider have a local presence near my customers?

- Will I be able to deploy my application in multiple countries around the world?

- In case of an outage, will I be able to continue serving my customers from a different location with minimal effort?

Scale

Deploying an application for the first time, we might not think about it, but what happens in the peak scenario?

Will the cloud provider allow me to deploy hundreds or even thousands of VM’s (or even better, containers), in a short amount of time, for a short period, from the same location?

Will the cloud provider allow me infinite scale to store my data in cloud storage, without having to guess or estimate the storage size?

Multi-tenancy

As customers, we expect our cloud providers to offer us a fully private environment.

We never want to hear about “noisy neighbor” (where one customer is using a lot of resources, which eventually affect other customers), and we never want to hear a provider admits that some or all of the resources (from VMs, database, storage, etc.) are being shared among customers.

Will the cloud provider be able to offer me a commitment to a multi-tenant environment?

Stability

One of the major reasons for migrating to the cloud is the ability to re-architect our services, whether we are still using VMs based on IaaS, databases based on PaaS, or fully managed CRM services based on SaaS.

In all scenarios, we would like to have a stable service with zero downtime.

Will the cloud provider allow me to deploy a service in a redundant architecture, that will survive data center outage or infrastructure availability issues (from authentication services, to compute, storage, or even network infrastructure) and return to business with minimal customer effect?

APIs

In the modern cloud era, everything is based on API (Application programming interface).

Will the cloud provider offer me various APIs?

From deploying an entire production environment in minutes using Infrastructure as Code, to monitoring both performances of our services, cost, and security auditing – everything should be allowed using API, otherwise, it is simply not scale/mature/automated/standard and prone to human mistakes.

Data protection

Encrypting data at transit, using TLS 1.2 is a common standard, but what about encryption at rest?

Will the cloud provider allow me to encrypt a database, object storage, or a simple NFS storage using my encryption keys, inside a secure key management service?

Will the cloud provider allow me to automatically rotate my encryption keys?

What happens if I need to store secrets (credentials, access keys, API keys, etc.)? Will the cloud provider allow me to store my secrets in a secured, managed, and audited location?

In case you are about to store extremely sensitive data (from PII, credit card details, healthcare data, or even military secrets), will the cloud provider offer me a solution for confidential computing, where I can store sensitive data, even in memory (or in use)?

Well architected

A mature cloud provider has a vast amount of expertise to share knowledge with you, about how to build an architecture that will be secure, reliable, performance efficient, cost-optimized, and continually improve the processes you have built.

Will the cloud provider offer me rich documentation on how to achieve all the above-mentioned goals, to provide your customers the best experience?

Will the cloud provider offer me an automated solution for deploying an entire application stack within minutes from a large marketplace?

Cost management

The more we broaden our use of the IaaS / PaaS service, the more we realize that almost every service has its price tag.

We might not prepare for this in advance, but once we begin to receive the monthly bill, we begin to see that we pay a lot of money, sometimes for services we don’t need, or for an expensive tier of a specific service.

Unlike on-premise, most cloud providers offer us a way to lower the monthly bill or pay for what we consume.

Regarding cost management, ask yourself the following questions:

Will the cloud provider charge me for services when I am not consuming them?

Will the cloud provider offer me detailed reports that will allow me to find out what am I paying for?

Will the cloud provider offer me documents and best practices for saving costs?

Summary

Answering the above questions with your preferred cloud provider, will allow you to differentiate a mature cloud provider, from the rest of the alternatives, and to assure you that you have made the right choice selecting a cloud provider.

The answers will provide you with confidence, both when working with a single cloud provider, and when taking a step forward and working in a multi-cloud environment.

References

Security, Trust, Assurance, and Risk (STAR)

https://cloudsecurityalliance.org/star/

SOC 2 – SOC for Service Organizations: Trust Services Criteria

https://www.aicpa.org/interestareas/frc/assuranceadvisoryservices/aicpasoc2report.html

Confidential Computing and the Public Cloud

https://eyal-estrin.medium.com/confidential-computing-and-the-public-cloud-fa4de863df3

Confidential computing: an AWS perspective

https://aws.amazon.com/blogs/security/confidential-computing-an-aws-perspective/

AWS Well-Architected

https://aws.amazon.com/architecture/well-architected

Azure Well-Architected Framework

https://docs.microsoft.com/en-us/azure/architecture/framework/

Google Cloud’s Architecture Framework

https://cloud.google.com/architecture/framework

Oracle Architecture Center

https://docs.oracle.com/solutions/

Alibaba Cloud’s Well-Architectured Framework

The Future of Data Security Lies in the Cloud

We have recently read a lot of posts about the SolarWinds hack, a vulnerability in a popular monitoring software used by many organizations around the world.

This is a good example of supply chain attack, which can happen to any organization.

We have seen similar scenarios over the past decade, from the Heartbleed bug, Meltdown and Spectre, Apache Struts, and more.

Organizations all around the world were affected by the SolarWinds hack, including the cybersecurity company FireEye, and Microsoft.

Events like these make organizations rethink their cybersecurity and data protection strategies and ask important questions.

Recent changes in the European data protection laws and regulations (such as Schrems II) are trying to limit data transfer between Europe and the US.

Should such security breaches occur? Absolutely not.

Should we live with the fact that such large organization been breached? Absolutely not!

Should organizations, who already invested a lot of resources in cloud migration move back workloads to on-premises? I don’t think so.

But no organization, not even major financial organizations like banks or insurance companies, or even the largest multinational enterprises, have enough manpower, knowledge, and budget to invest in proper protection of their own data or their customers’ data, as hyperscale cloud providers.

There are several of reasons for this:

- Hyperscale cloud providers invest billions of dollars improving security controls, including dedicated and highly trained personnel.

- Breach of customers’ data that resides at hyperscale cloud providers can drive a cloud provider out of business, due to breach of customer’s trust.

- Security is important to most organizations; however, it is not their main line of expertise.

Organization need to focus on their core business that brings them value, like manufacturing, banking, healthcare, education, etc., and rethink how to obtain services that support their business goals, such as IT services, but do not add direct value.

Recommendations for managing security

Security Monitoring

Security best practices often state: “document everything”.

There are two downsides to this recommendation: One, storage capacity is limited and two, most organizations do not have enough trained manpower to review the logs and find the top incidents to handle.

Switching security monitoring to cloud-based managed systems such as Azure Sentinel or Amazon GuardDuty, will assist in detecting important incidents and internally handle huge logs.

Encryption

Another security best practice state: “encrypt everything”.

A few years ago, encryption was quite a challenge. Will the service/application support the encryption? Where do we store the encryption key? How do we manage key rotation?

In the past, only banks could afford HSM (Hardware Security Module) for storing encryption keys, due to the high cost.

Today, encryption is standard for most cloud services, such as AWS KMS, Azure Key Vault, Google Cloud KMS and Oracle Key Management.

Most cloud providers, not only support encryption at rest, but also support customer managed key, which allows the customer to generate his own encryption key for each service, instead of using the cloud provider’s generated encryption key.

Security Compliance

Most organizations struggle to handle security compliance over large environments on premise, not to mention large IaaS environments.

This issue can be solved by using managed compliance services such as AWS Security Hub, Azure Security Center, Google Security Command Center or Oracle Cloud Access Security Broker (CASB).

DDoS Protection

Any organization exposing services to the Internet (from publicly facing website, through email or DNS service, till VPN service), will eventually suffer from volumetric denial of service.

Only large ISPs have enough bandwidth to handle such an attack before the border gateway (firewall, external router, etc.) will crash or stop handling incoming traffic.

The hyperscale cloud providers have infrastructure that can handle DDoS attacks against their customers, services such as AWS Shield, Azure DDoS Protection, Google Cloud Armor or Oracle Layer 7 DDoS Mitigation.

Using SaaS Applications

In the past, organizations had to maintain their entire infrastructure, from messaging systems, CRM, ERP, etc.

They had to think about scale, resilience, security, and more.

Most breaches of cloud environments originate from misconfigurations at the customers’ side on IaaS / PaaS services.

Today, the preferred way is to consume managed services in SaaS form.

These are a few examples: Microsoft Office 365, Google Workspace (Formerly Google G Suite), Salesforce Sales Cloud, Oracle ERP Cloud, SAP HANA, etc.

Limit the Blast Radius

To limit the “blast radius” where an outage or security breach on one service affects other services, we need to re-architect infrastructure.

Switching from applications deployed inside virtual servers to modern development such as microservices based on containers, or building new applications based on serverless (or function as a service) will assist organizations limit the attack surface and possible future breaches.

Example of these services: Amazon ECS, Amazon EKS, Azure Kubernetes Service, Google Kubernetes Engine, Google Anthos, Oracle Container Engine for Kubernetes, AWS Lambda, Azure Functions, Google Cloud Functions, Google Cloud Run, Oracle Cloud Functions, etc.

Summary

The bottom line: organizations can increase their security posture, by using the public cloud to better protect their data, use the expertise of cloud providers, and invest their time in their core business to maximize value.

Security breaches are inevitable. Shifting to cloud services does not shift an organization’s responsibility to secure their data. It simply does it better.

Confidential Computing and the Public Cloud

What exactly is “confidential computing” and what are the reasons and benefits for using it in the public cloud environment?

Introduction to data encryption

To protect data stored in the cloud, we usually use one of the following methods:

· Encryption at transit — Data transferred over the public Internet can be encrypted using the TLS protocol. This method prohibits unwanted participants from entering the conversation.

· Encryption at rest — Data stored at rest, such as databases, object storage, etc., can be encrypted using symmetric encryption which means using the same encryption key to encrypt and decrypt the data. This commonly uses the AES256 algorithm.

When we wish to access encrypted data, we need to decrypt the data in the computer’s memory to access, read and update the data.

This is where confidential computing comes in — trying to protect the gap between data at rest and data at transit.

Confidential Computing uses hardware to isolate data. Data is encrypted in use by running it in a trusted execution environment (TEE).

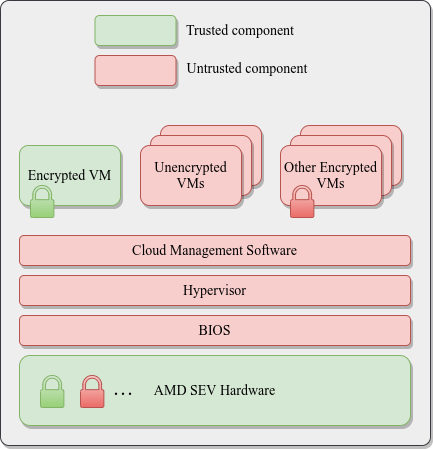

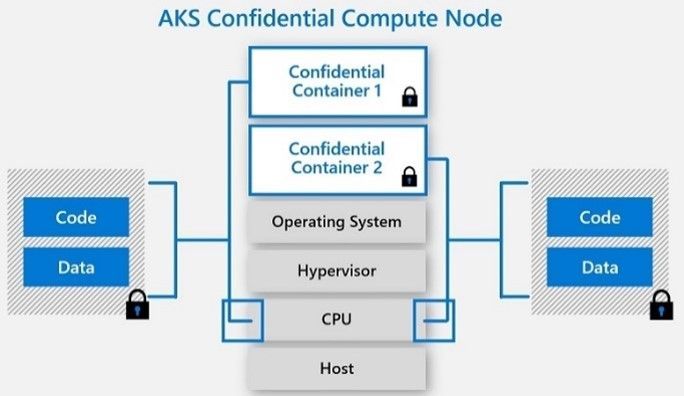

As of November 2020, confidential computing is supported by Intel Software Guard Extensions (SGX) and AMD Secure Encrypted Virtualization (SEV), based on AMD EPYC processors.

Comparison of the available options

| Intel SGX | Intel SGX2 | AMD SEV 1 | AMD SEV 2 | |

| Purpose | Microservices and small workloads | Machine Learning and AI | Cloud and IaaS workloads (above the hypervisor), suitable for legacy applications or large workloads | Cloud and IaaS workloads (above the hypervisor), suitable for legacy applications or large workloads |

| Cloud VM support (November 2020) | – | |||

| Cloud containers support (November 2020) | – | – | ||

| Operating system supported | Windows, Linux | Linux | Linux | Linux |

| Memory limitation | Up to 128MB | Up to 1TB | Up to available RAM | Up to available RAM |

| Software changes | Require software rewrite | Require software rewrite | Not required | – |

Reference Architecture

AMD SEV Architecture:

Azure Kubernetes Service (AKS) Confidential Computing:

References

· Confidential Computing: Hardware-Based Trusted Execution for Applications and Data

· Google Cloud Confidential VMs vs Azure Confidential Computing

https://msandbu.org/google-cloud-confidential-vms-vs-azure-confidential-computing/

· A Comparison Study of Intel SGX and AMD Memory Encryption Technology

https://caslab.csl.yale.edu/workshops/hasp2018/HASP18_a9-mofrad_slides.pdf

· SGX-hardware listhttps://github.com/ayeks/SGX-hardware

· Performance Analysis of Scientific Computing Workloads on Trusted Execution Environments

https://arxiv.org/pdf/2010.13216.pdf

· Helping Secure the Cloud with AMD EPYC Secure Encrypted Virtualization

https://developer.amd.com/wp-content/resources/HelpingSecuretheCloudwithAMDEPYCSEV.pdf

· Azure confidential computing

https://azure.microsoft.com/en-us/solutions/confidential-compute/

· Azure and Intel commit to delivering next generation confidential computing

· DCsv2-series VM now generally available from Azure confidential computing

· Confidential computing nodes on Azure Kubernetes Service (public preview)

https://docs.microsoft.com/en-us/azure/confidential-computing/confidential-nodes-aks-overview

· Expanding Google Cloud’s Confidential Computing portfolio

· A deeper dive into Confidential GKE Nodes — now available in preview

https://cloud.google.com/blog/products/identity-security/confidential-gke-nodes-now-available

· Using HashiCorp Vault with Google Confidential Computing

https://www.hashicorp.com/blog/using-hashicorp-vault-with-google-confidential-computing

· Confidential Computing is cool!

https://medium.com/google-cloud/confidential-computing-is-cool-1d715cf47683

· Data-in-use protection on IBM Cloud using Intel SGX

https://www.ibm.com/cloud/blog/data-use-protection-ibm-cloud-using-intel-sgx

· Why IBM believes Confidential Computing is the future of cloud security

· Alibaba Cloud Released Industry’s First Trusted and Virtualized Instance with Support for SGX 2.0 and TPM

Tips for Selecting a Public Cloud Provider

When an organization needs to select a public cloud service provider, there are several variables and factors to take into consideration that will help you choose the most appropriate cloud provider suitable for the organization’s needs.

In this post, we will review various considerations that will help organizations in the decision-making process.

Business goals

Before deciding to use a public cloud solution, or migrating existing environments to the cloud, it is important that organizations review their business goals. Explore what brings the organization value by maintaining existing systems on premise and what value does the migration to the cloud promise. In accordance with what you discover, decide which systems will be deployed in the cloud first, or which systems your organization will choose to use as managed services.

Review the lists of services offered in the cloud

Public cloud providers publish a list of services in various areas.

Review the list of current services and see how they stand up to your organization’s needs. This will help you narrow down the most suitable options.

Here are some examples of public cloud service catalogs:

· AWS — https://aws.amazon.com/products/

· Azure — https://azure.microsoft.com/en-us/services/

· GCP — https://cloud.google.com/products

· Oracle Cloud — https://www.oracle.com/cloud/products.html

· IBM — https://www.ibm.com/cloud/products

· Salesforce — https://www.salesforce.com/eu/products/

· SAP — https://www.sap.com/products.html

Centrally authenticating users against Active Directory in IaaS / PaaS environments

Many organizations manage access rights to various systems based on an organizational Active Directory.

Although it is possible to deploy Domain Controllers based on virtual servers in an IaaS environment, or create a federation between the on-premise and the cloud environments, at least some cloud providers offer managed Active Directory service based on Kerberos protocol (the most common authentication protocol in the on-premise environments) might ease the migration to the public cloud.

Examples of managed Active Directory services:

· Azure Active Directory Domain Services

· Google Managed Service for Microsoft Active Directory

Understanding IaaS / PaaS pricing models

Public cloud providers publish pricing calculators and documentation on their service pricing models.

Understanding pricing models might be complex for some services. For this reason, it is highly recommended to contact an account manager, a partners or reseller for assistance.

Comparing similar services among different cloud providers will enable an organization to identify and choose the most suitable cloud provider based on the organization’s needs and budget.

Examples of pricing calculators:

· AWS Simple Monthly Calculator

· Google Cloud Platform Pricing Calculator

Check if your country has a local region of one of the public cloud providers

The decision may be easier, or it may be easier to select one provider over a competitor, if in your specific country the provider has a local region. This can help for example in cases where there are limitations on data transfer outside a specific country’s borders (or between continents), or issues of network latency when transferring large amount of data sets between the local data centers and cloud environments,

This is relevant for all cloud service models (IaaS / PaaS / SaaS).

Examples of regional mapping:

· AWS:

AWS Regions and Availability Zones

· Azure and Office 365:

o Where your Microsoft 365 customer data is stored

· Google Cloud Platform:

· Oracle Cloud:

Oracle Data Regions for Platform and Infrastructure Services

· Salesforce:

Where is my Salesforce instance located?

· SAP:

SAP Cloud Platform Regions and Service Portfolio

Service status reporting and outage history

Mature cloud providers transparently publish their service availability status in various regions around the world, including outage history of their services.

Mature cloud providers transparently share service status and outages with customers, and know how to build stable and available infrastructure over the long term, and over multiple geographic locations, as well as how to minimize the “blast radius”, which might affect many customers.

A thorough review of an outage history report allows organizations to get a good picture over an extended period and help in the decision-making process.

Example of cloud providers’ service status and outage history documentation:

· AWS:

· Azure:

· Google Cloud Platform:

Google Cloud Status Dashboard — Incidents Summary

· Oracle Cloud:

Oracle Cloud Infrastructure — Current Status

Oracle Cloud Infrastructure — Incident History

· Salesforce:

· SAP:

SAP Cloud Platform Status Page

Summary

As you can see, there are several important factors to take into consideration when selecting a specific cloud provider. We have covered some of the more common ones in this post.

For an organization to make an educated decision, it is recommended to check what brings value for the organization, in both the short and long-term. It is important to review cloud providers’ service catalogs, alongside a thorough review of global service availability, transparency, understanding pricing models and hybrid architecture that connects local data centers to the cloud.

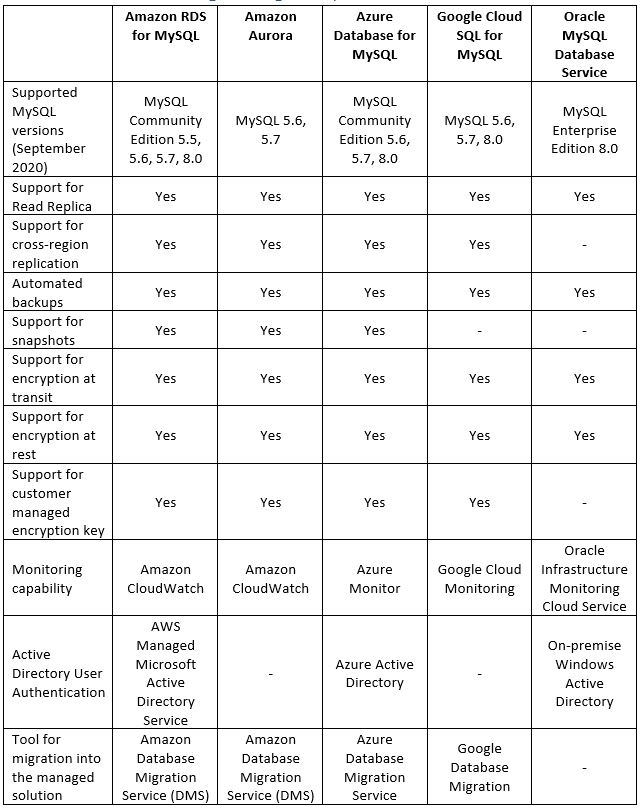

Running MySQL Managed Database in the Cloud

Today, more and more organizations are moving to the public cloud and choosing open source databases. They are choosing this for a variety of reasons, but license cost is one of the main ones.

In this post, we will review some of the common alternatives for running MySQL database inside a managed environment.

Legacy applications may be a reason for manually deploying and managing MySQL database.

Although it is possible to deploy a virtual machine, and above it manually install MySQL database (or even a MySQL cluster), unless your organization have a dedicated and capable DBA, I recommend looking at what brings value to your organization. Unless databases directly influence your organization’s revenue, I recommend paying the extra money and choosing a managed solution based on a Platform as a Service model.

It is important to note that several cloud providers offer data migration services to assist migrating existing MySQL (or even MS-SQL and Oracle) databases from on-premise to a managed service in the cloud.

Benefits of using managed database solutions

- Easy deployment – With a few clicks from within the web console, or using CLI tools, you can deploy fully managed MySQL databases (or a MySQL cluster)

- High availability and Read replica – Configurable during the deployment phase and after the product has already been deployed, according to customer requirements

- Maintenance – The entire service maintenance (including database fine-tuning, operating system, and security patches, etc.) is done by the cloud provider

- Backup and recovery – Embedded inside the managed solution and as part of the pricing model

- Encryption at transit and at rest – Embedded inside the managed solution

- Monitoring – As with any managed solution, cloud providers monitor service stability and allow customers access to metrics for further investigation (if needed)

Alternatives for running managed MySQL database in the cloud

Summary

As you can read in this article, running MySQL database in a managed environment in the cloud is a viable option, and there are various reasons for taking this step (from license cost, decrease man power maintaining the database and operating system, backups, security, availability, etc.)

References

How to run HPC in the cloud?

Is it feasible to run HPC in the cloud? How different is it from running a local HPC cluster? What are some of the common alternatives for running HPC in the cloud?

Introduction

Before beginning our discussion about HPC (High Performance Computing) in the cloud, let us talk about what exactly HPC really means?

“High Performance Computing most generally refers to the practice of aggregating computing power in a way that delivers much higher performance than one could get out of a typical desktop computer or workstation in order to solve large problems in science, engineering, or business.” (https://www.usgs.gov/core-science-systems/sas/arc/about/what-high-performance-computing)

In more technical terms – it refers to a cluster of machines composed of multiple cores (either physical or virtual cores), a lot of memory, fast parallel storage (for read/write) and fast network connectivity between cluster nodes.

HPC is useful when you need a lot of compute resources, from image or video rendering (in batch mode) to weather forecasting (which requires fast connectivity between the cluster nodes).

The world of HPC is divided into two categories:

- Loosely coupled – In this scenario you might need a lot of compute resources, however, each task can run in parallel and is not dependent on other tasks being completed.

Common examples of loosely coupled scenarios: Image processing, genomic analysis, etc.

- Tightly coupled – In this scenario you need fast connectivity between cluster resources (such as memory and CPU), and each cluster node depends on other nodes for the completion of the task. Common examples of tightly coupled scenarios: Computational fluid dynamics, weather prediction, etc.

Pricing considerations

Deploying an HPC cluster on premise requires significant resources. This includes a large investment in hardware (multiple machines connected in the cluster, with many CPUs or GPUs, with parallel storage and sometimes even RDMA connectivity between the cluster nodes), manpower with the knowledge to support the platform, a lot of electric power, and more.

Deploying an HPC cluster in the cloud is also costly. The price of a virtual machine with multiple CPUs, GPUs or large amount of RAM can be very high, as compared to purchasing the same hardware on premise and using it 24×7 for 3-5 years.

The cost of parallel storage, as compared to other types of storage, is another consideration.

The magic formula is to run HPC clusters in the cloud and still have the benefits of (virtually) unlimited compute/memory/storage resources is to build dynamic clusters.

We do this by building the cluster for a specific job, according to the customer’s requirements (in terms of number of CPUs, amount of RAM, storage capacity size, network connectivity between the cluster nodes, required software, etc.). Once the job is completed, we copy the job output data and take down the entire HPC cluster in-order to save unnecessary hardware cost.

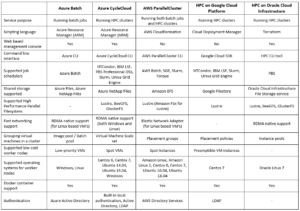

Alternatives for running HPC in the cloud

Summary

As you can see, running HPC in the public cloud is a viable option. But you need to carefully plan the specific solution, after gathering the customer’s exact requirements in terms of required compute resources, required software and of course budget estimation.

Product documentation

- Azure Batch

https://azure.microsoft.com/en-us/services/batch/

- Azure CycleCloud

https://azure.microsoft.com/en-us/features/azure-cyclecloud/

- AWS ParallelCluster

https://aws.amazon.com/hpc/parallelcluster/

- Slurm on Google Cloud Platform

https://github.com/SchedMD/slurm-gcp

- HPC on Oracle Cloud Infrastructure